Question: Neural networks In this overloaded problem set, we will study neural network training from the perspective of maximum likelihood and maximum a posteriori parameter learning,

Neural networks

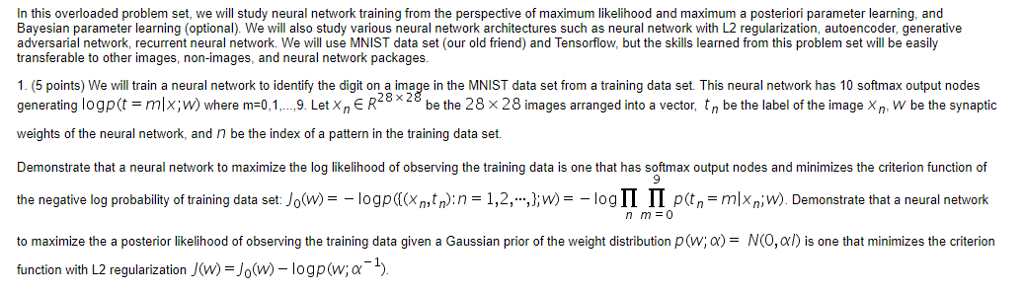

In this overloaded problem set, we will study neural network training from the perspective of maximum likelihood and maximum a posteriori parameter learning, and Bayesian parameter learning (optional). We will also study various neural network architectures such as neural network with L2 regularization, autoencoder, generative adversarial network, recurrent neural network. We will use MNIST data set (our old friend) and Tensorflow, but the skills learned from this problem set will be easily transferable to other images, non-images, and neural network packages. 1. (5 points) We will train a neural network to identify the digit on a image in the MNIST data set from a training data set. This neural network has 10 softmax output nodes generating logp (t m x; w) where m #0 1 .9 Let xnE R28% 28 be the 28 28 images arranged into a vector, t n be the label of the image xn w be the synaptic weights of the neural network, and n be the index of a pattern in the training data set. Demonstrate that a neural network to maximize the log likelihood of observing the training data is one that has softmax output nodes and minimizes the criterion function of the negative log probability of training dataset Jo w = ogpd(x tn :n 1,2 } w)= log ? ? p(tn mk v Demon neural network to maximize the a posterior likelihood of observing the training data given a Gaussian prior of the weight distribution p w ? N 0 ? is one that minimizes the criterion function with L2 regularization/(w) = Jo(w)-log p (w; ?-1) 9 strate that a In this overloaded problem set, we will study neural network training from the perspective of maximum likelihood and maximum a posteriori parameter learning, and Bayesian parameter learning (optional). We will also study various neural network architectures such as neural network with L2 regularization, autoencoder, generative adversarial network, recurrent neural network. We will use MNIST data set (our old friend) and Tensorflow, but the skills learned from this problem set will be easily transferable to other images, non-images, and neural network packages. 1. (5 points) We will train a neural network to identify the digit on a image in the MNIST data set from a training data set. This neural network has 10 softmax output nodes generating logp (t m x; w) where m #0 1 .9 Let xnE R28% 28 be the 28 28 images arranged into a vector, t n be the label of the image xn w be the synaptic weights of the neural network, and n be the index of a pattern in the training data set. Demonstrate that a neural network to maximize the log likelihood of observing the training data is one that has softmax output nodes and minimizes the criterion function of the negative log probability of training dataset Jo w = ogpd(x tn :n 1,2 } w)= log ? ? p(tn mk v Demon neural network to maximize the a posterior likelihood of observing the training data given a Gaussian prior of the weight distribution p w ? N 0 ? is one that minimizes the criterion function with L2 regularization/(w) = Jo(w)-log p (w; ?-1) 9 strate that a

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts