Question: nn Figure 2 subprocess 3. clustering Figure 3. Merging data sets For better understanding of the data set, we used operator Correlation matrix in order

nn

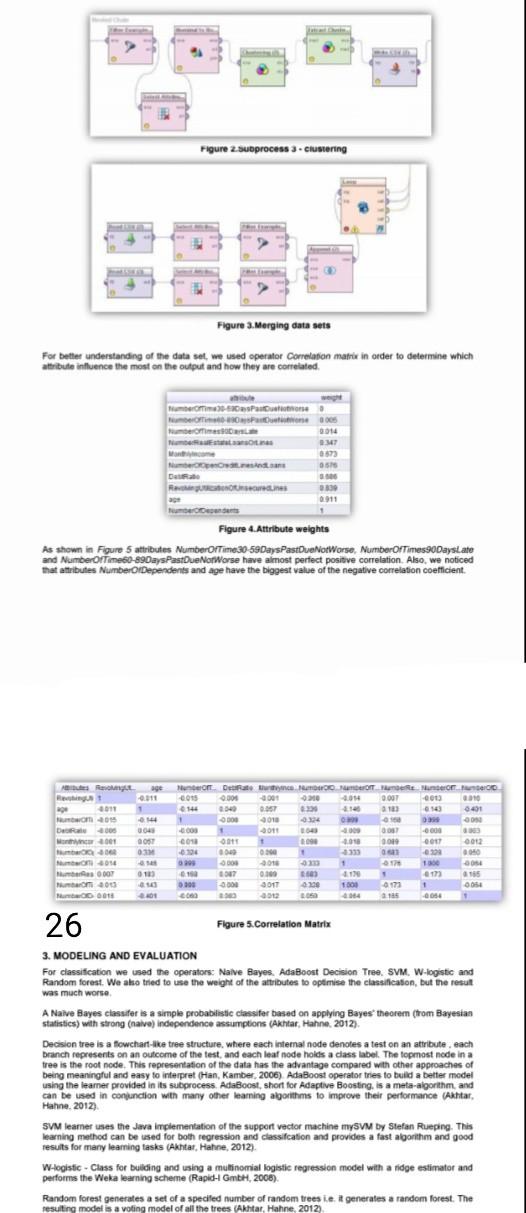

Figure 2 subprocess 3. clustering Figure 3. Merging data sets For better understanding of the data set, we used operator Correlation matrix in order to determine which attribute influence the most on the output and how they are correlated w Osalone One 305 mer 2014 Not 17 M NO 051 De Races 300 0911 DO Figure 4.Attribute weights As shown in Figure 5 attributes NumberOfTime 30 59 Days PastDueNotWorse, NumberOfTimes90Dayslate and NumberOfTime60-89Days PastueNoWorse have almost perfect positive correlation. Also, we noticed that attributes NumberOlDependents and age have the biggest value of the negative correlation coefficient 2010 6400 age Not Deathrync Of Me 2011 2015 9.00 2001 090 2014 0.007 2013 2011 146 0.067 2015 4 + 300 010 DR400 004 1 2011 104 -0.00305 M001 0051 e01 109 900 001 000 6134 0.30 100 om 1014 200 3000 2011 + 1 10 1907 0.100 10 mtaram 400 000 300 2017 6300 1300 073 1 MODO 2.01 co . 20 ES 4.064 2185 -0.084 2013 040 0 0054 26 Figure 5. Correlation Matrix 3. MODELING AND EVALUATION For classification we used the operators: Nalve Bayes. AdaBoost Decision Tree. SVM, W-logistic and Random forest. We also tried to use the weight of the attributes to optimise the classification, but the resul was much worse A Naive Bayes classifer is a simple probabilistic classifer based on applying Bayes theorer (from Bayesian statistics) with strong (nalve) independence assumptions (Akhtar, Hahne. 2012) Decision tree is a flowchart-ike tree structure, where each internal node denotes a test on an attribute branch represents on an outcome of the test, and each leaf node holds a class label. The topmost node in a tree is the root node. This representation of the data has the advantage compared with other approaches of being meaningful and easy to interpret (Han, Kamber, 2006) AdaBoost operator tries to build a better model using the learner provided in its subprocess. AdaBoost, short for Adaptive Boosting, is a meta-algorithm and can be used in conjunction with many other learning algorithms to improve their performance Attar, Hahne. 2012) SVM leamer uses the Java implementation of the support vector machine mySVM by Stefan Rueping. This learning method can be used for both regression and classifcation and provides a fast algorithm and good results for many learning tasks (Akhtar, Hahne, 2012) W-logistic - Class for building and using a mulinomial logistic regression model with a ridge estimator and performs the Weka learning scheme (Rapid GmbH, 2008) Random forest generates a set of a specifed number of random trees iet generates a random forest. The aStep by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock