Question: Note . For this homework, we will use a Python toolkit/package called scikit-lear . For the preprocessing, we start with tokenization using NLTK.tokenizer. You

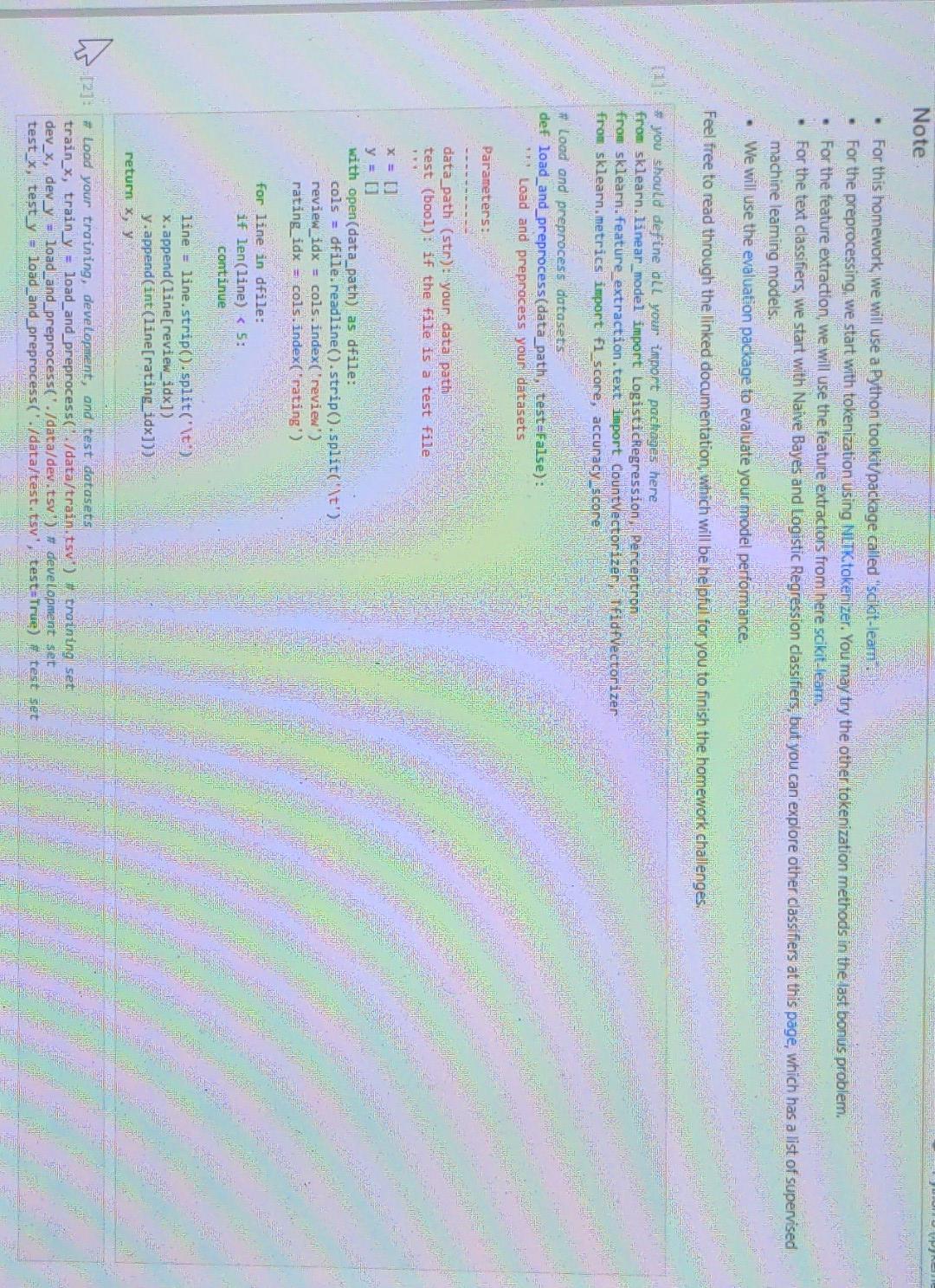

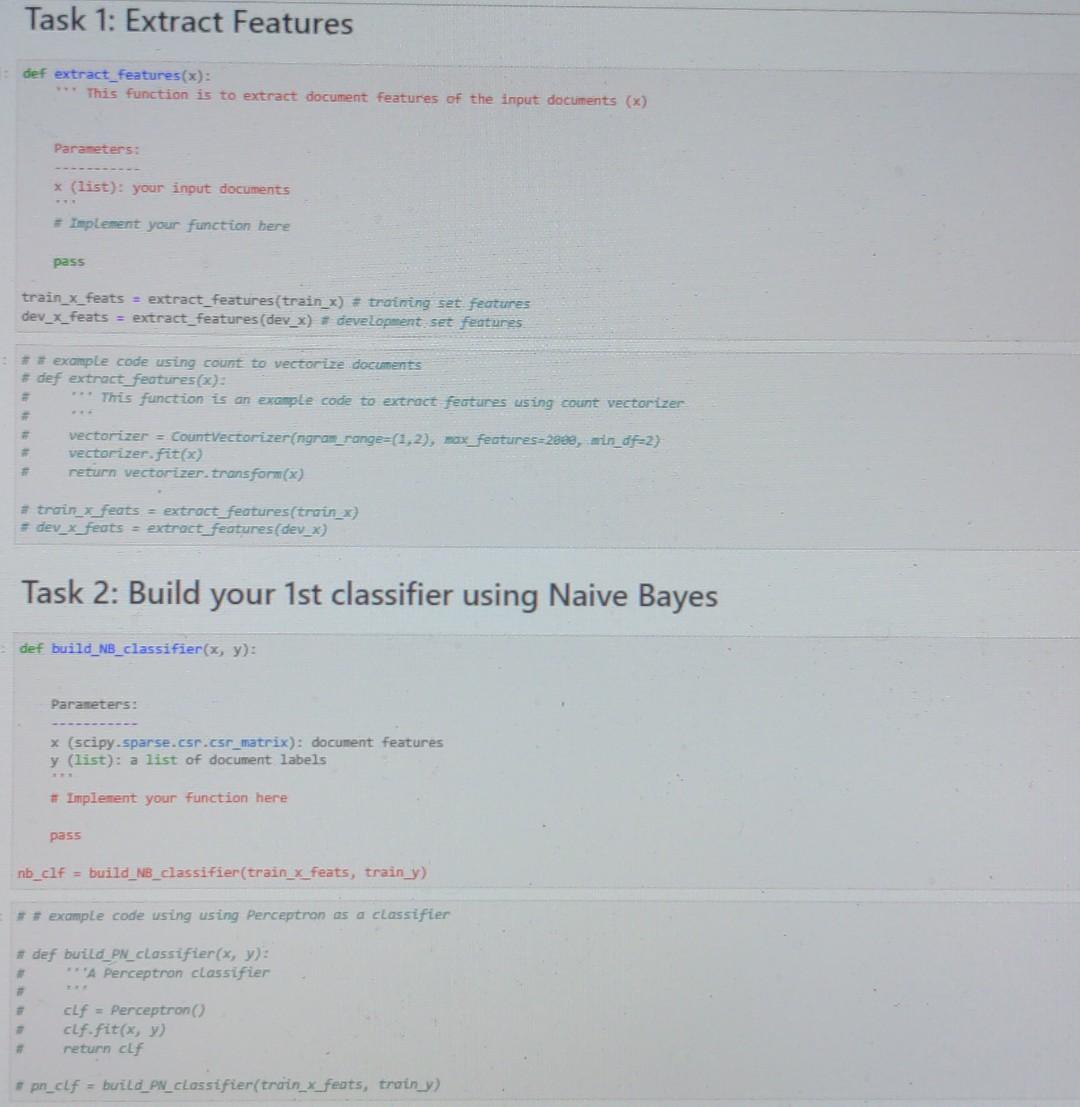

Note . For this homework, we will use a Python toolkit/package called "scikit-lear . For the preprocessing, we start with tokenization using NLTK.tokenizer. You may try the other tokenization methods in the last bonus problem. . For the feature extraction, we will use the feature extractors from here scikit-learn.. . For the text classifiers, we start with Naive Bayes and Logistic Regression classifiers, but you can explore other classifiers at this page, which has a list of supervised machine leaming models. We will use the evaluation package to evaluate your model performance. Feel free to read through the linked documentation, which will be helpful for you to finish the homework challenges. Woment # you should define all your import packages here from sklearn. linear_model import LogisticRegression, Perceptron from sklearn. feature_extraction.text import CountVectorizer, fidfVectorizer from sklearn.metrics import f1_score, accuracy score #Load and preprocess datasets def load_and_preprocess (data_path, test=False): *** Load and preprocess your datasets Parameters: data_path (str): your data path test (bool): if the file is a test file *** x = [] y = [] with open (data_path) as dfile: cols = dfile.readline().strip().split(\t") review_idx= cols.index(review') rating idx= cols. index("rating') for line in dfile: if len(line) < 5: continue line = line.strip().split('\t") x.append(line[review_idx]) y.append(int(line[rating_idx])) return x, y MEANIYOR As [2]: # Load your training, development, and test datasets train_x, train_y= load_and_preprocess(/data/train.tsv') # training set dev_x, dev_y= load_and_preprocess('./data/dev.tsv) # development set test_x, test y = load_and_preprocess('./data/test.tsv, test=True) test set Task 1: Extract Features :def extract_features(x): *** This function is to extract document features of the input documents (x) Parameters: x (list): your input documents *** #Implement your function here train_x_feats = extract_features (train_x) # training set features dev_x_feats = extract_features (dev_x)# development set features # pass # # example code using count to vectorize documents # def extract features(x): # # *** This function is an example code to extract features using count vectorizer *** return vectorizer.transform(x) #train_x_feats extract_features (train_x) # dev_x_feats extract features (dev_x) vectorizer = CountVectorizer(ngram_range-(1,2), max_features-2000, win_df=2) vectorizer.fit(x) Task 2: Build your 1st classifier using Naive Bayes # # # def build_NB_classifier(x, y): Parameters: x (scipy.sparse.csr.csr_matrix): document features y (list): a list of document labels *** # Implement your function here pass nb_clf = build_NB_classifier (train_x_feats, train_y) # # example code using using Perceptron as a classifier # def build_PN_classifier (x, y): # ***A Perceptron classifier # cLf = Perceptron () clf.fit (x, y) return clf # pn_clf = build_PN_classifier (train_x_feats, train_y)

Step by Step Solution

3.31 Rating (154 Votes )

There are 3 Steps involved in it

Lets break down the tasks and provide explanations for each part Load and Preprocess Datasets In thi... View full answer

Get step-by-step solutions from verified subject matter experts