Question: . Optimization. Suppose our loss function L(w) has a single global minimum 10*. Our goal is to start from an arbitrary rug and nd to

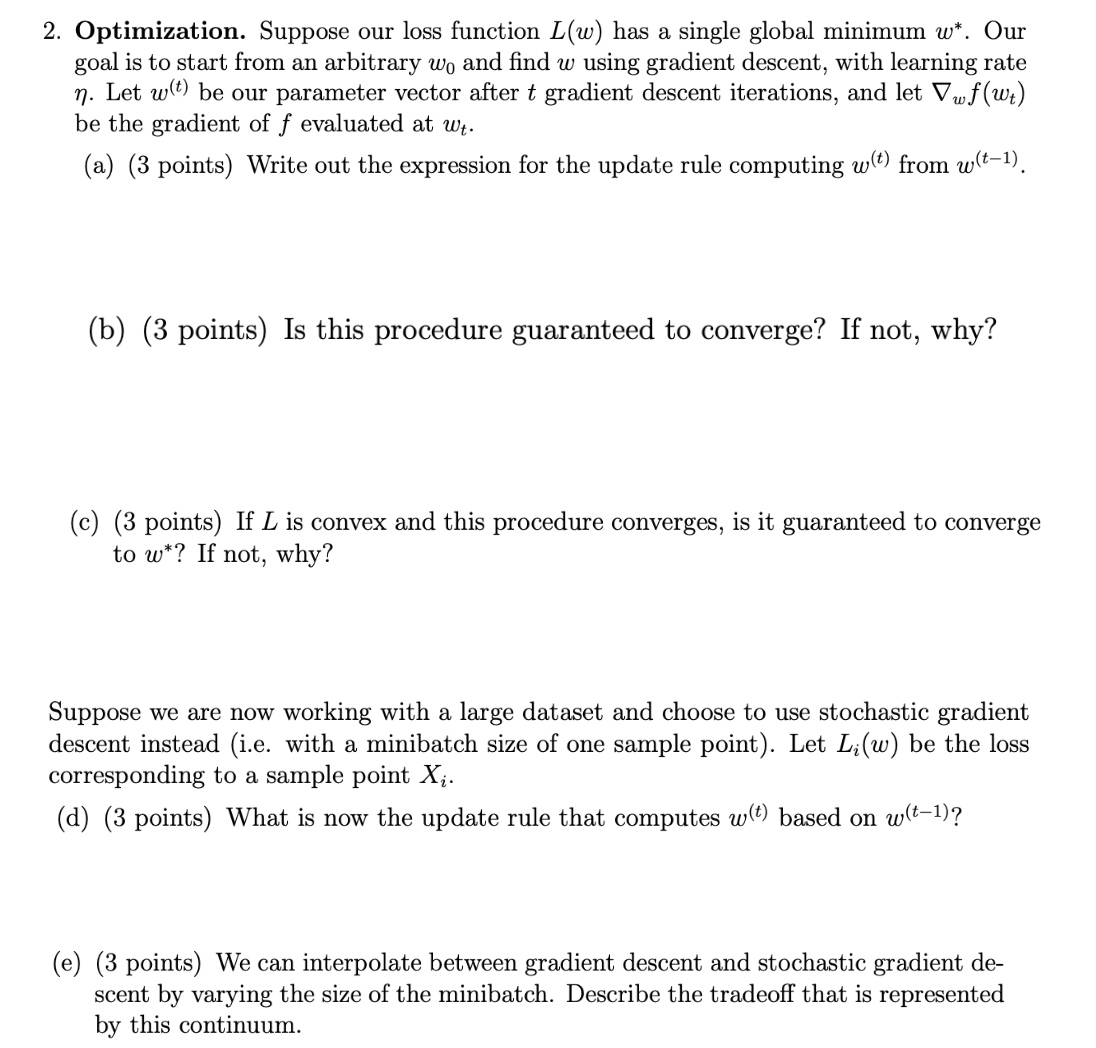

. Optimization. Suppose our loss function L(w) has a single global minimum "10*. Our goal is to start from an arbitrary rug and nd to using gradient descent, with learning rate 77. Let m") be our parameter vector after 1? gradient descent iterations, and let Vwwt) be the gradient of f evaluated at 10,. (a) (3 points) Write out the expression for the update rule computing tum from w('_1). (b) (3 points) Is this procedure guaranteed to converge? If not, why? (c) (3 points) If L is convex and this procedure converges, is it guaranteed to converge to w*? If not, why? Suppose we are now working with a large dataset and choose to use stochastic gradient descent instead (is. with a minibatch size of one sample point). Let L,(w) be the loss corresponding to a sample point X,. ((1) (3 points) What is now the update rule that computes w") based on tun1)? (e) (3 points) We can interpolate between gradient descent and stochastic gradient de- scent by varying the size of the minibatch. Describe the tradeo' that is represented by this continuum

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts