Question: Our first theorem formalizes the intuition given above that learning from sta - tistical queries implies learning in the noise - free Valiant model. The

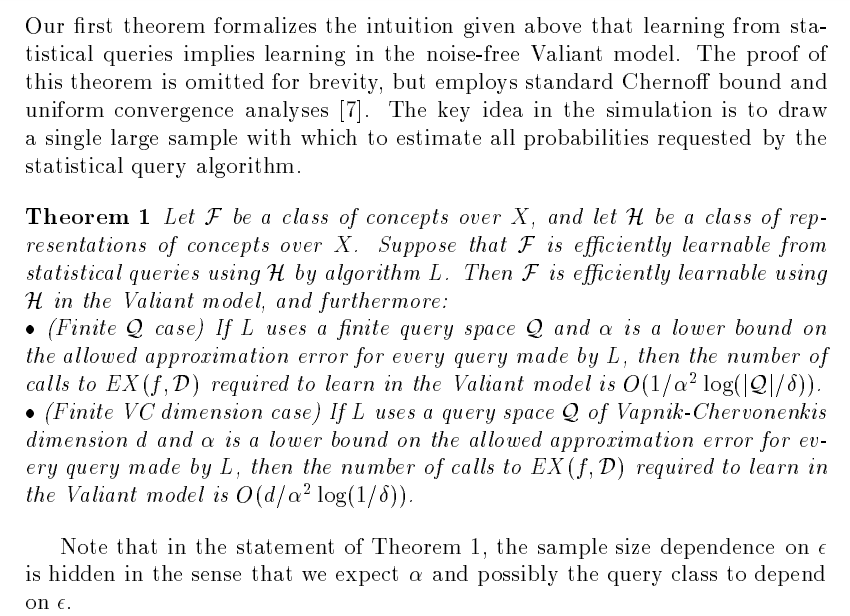

Our first theorem formalizes the intuition given above that learning from sta

tistical queries implies learning in the noisefree Valiant model. The proof of

this theorem is omitted for brevity, but employs standard Chernoff bound and

uniform convergence analyses The key idea in the simulation is to draw

a single large sample with which to estimate all probabilities requested by the

statistical query algorithm.

Theorem Let be a class of concepts over and let be a class of rep

resentations of concepts over Suppose that is efficiently learnable from

statistical queries using by algorithm Then is efficiently learnable using

in the Valiant model, and furthermore:

Finite case If uses a finite query space and is a lower bound on

the allowed approximation error for every query made by then the number of

calls to required to learn in the Valiant model is

Finite VC dimension case If L uses a query space of VapnikChervonenkis

dimension and is a lower bound on the allowed approximation error for ev

ery query made by then the number of calls to required to learn in

the Valiant model is

Note that in the statement of Theorem the sample size dependence on

is hidden in the sense that we expect and possibly the query class to depend

on

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock