Question: - Part 1: Getting started [2 marks] First off, take a look at the data, target and feature_names entries in the dataset dictionary. They contain

![- Part 1: Getting started [2 marks] First off, take a](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f3938696895_04666f393861e4db.jpg)

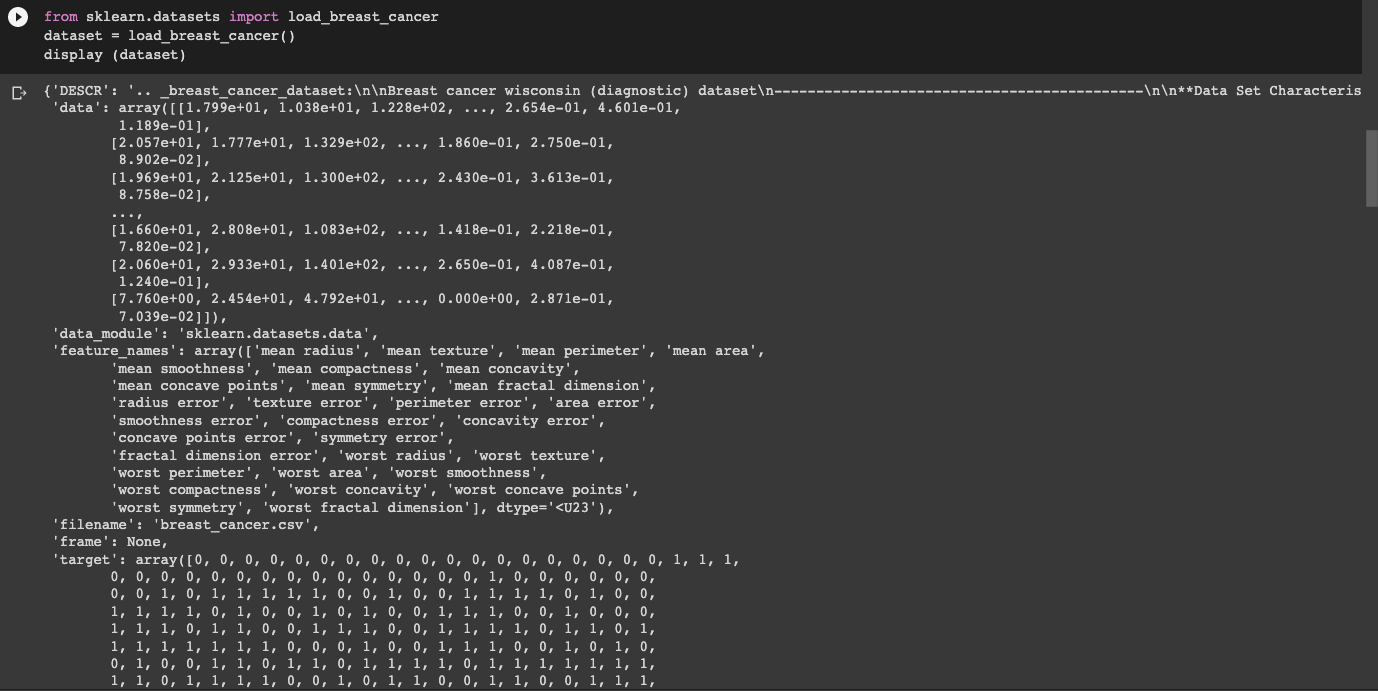

- Part 1: Getting started [2 marks] First off, take a look at the data, target and feature_names entries in the dataset dictionary. They contain the information we'll be working with here. Then, create a Pandas DataFrame called df containing the data and the targets, with the feature names as column headings. If you need help, see here for more details on how to achieve this. (0.4) How many features do we have in this dataset?. How many observations have a 'mean area' of greater than 700? How many participants tested Malignant ? How many participants tested Benign? # Import the required libraries import pandas as pd import numpy as np import sklearn s # Creating Pandas DataFrame Splitting the data It is best practice to have a training set (from which there is a rotating validation subset) and a test set. Our aim here is to (eventually) obtain the best accuracy we can on the test set (we'll do all our tuning on the training/validation sets, however.) Split the dataset into a train and a test set "70:30", use random_state=0. The test set is set aside (untouched) for final evaluation, once hyperparameter optimization is complete. (0.5) from sklearn.datasets import load_breast cancer dataset = load_breast cancer() display (dataset) - **Data Set Characteris {'DESCR': '.. _breast_cancer_dataset: Breast cancer wisconsin (diagnostic) dataset -- 'data': array([[1.799e+01, 1.038e+01, 1.228e+02, ..., 2.654e-01, 4.601e-01, 1.1892-01], [2.057e+01, 1.777e+01, 1.329e+02, ..., 1.8600-01, 2.750e-01, 8.902e-02], [1.969e+01, 2.125e+01, 1.300e+02, ..., 2.430e-01, 3.6130-01, 8.758e-02], [1.660e+01, 2.808e+01, 1.083e+02, ..., 1.418e-01, 2.218e-01, 7.820e-02], [2.060e+01, 2.933e+01, 1.401e+02, ..., 2.650e-01, 4.087e-01, 1.2402-01], [7.760e+00, 2.454e+01, 4.792e+01, ..., 0.000e+00, 2.871e-01, 7.039e-02]]), 'data_module': 'sklearn.datasets.data', 'feature_names': array([ 'mean radius', 'mean texture', 'mean perimeter', 'mean area', 'mean smoothness', 'mean compactness', 'mean concavity', 'mean concave points', 'mean symmetry', 'mean fractal dimension', 'radius error', 'texture error', 'perimeter error', 'area error', 'smoothness error' compactness error', 'concavity error', 'concave points error', 'symmetry error' I 'fractal dimension error', 'worst radius', 'worst texture', 'worst perimeter', 'worst area', 'worst smoothness', 'worst compactness', 'worst concavity', 'worst concave points', 'worst symmetry', 'worst fractal dimension'], dtype='

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts