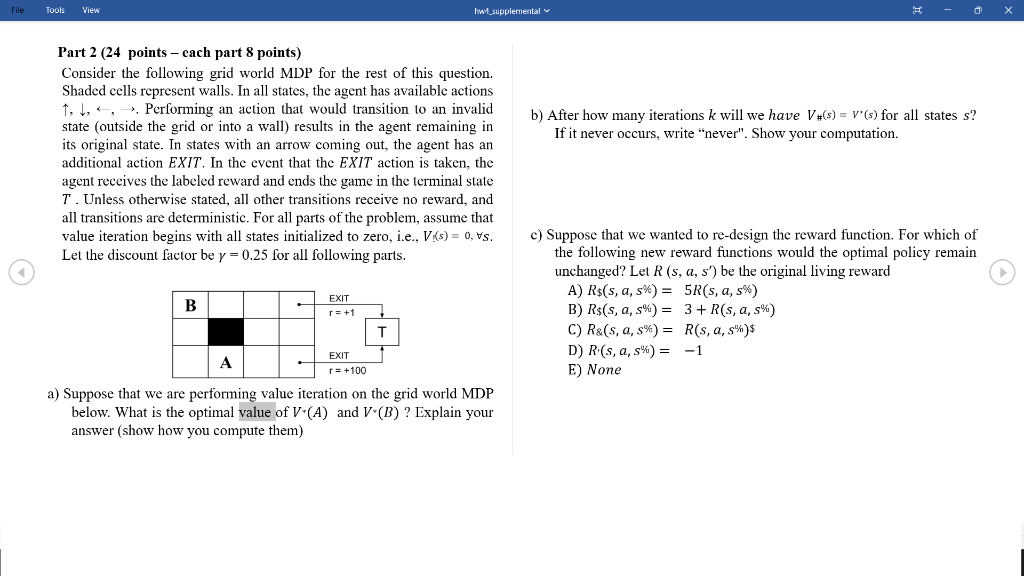

Question: Part 2 (24 points - each part 8 points) Consider the following grid world MDP for the rest of this question. Shaded cells represent walls.

Part 2 (24 points - each part 8 points) Consider the following grid world MDP for the rest of this question. Shaded cells represent walls. In all states, the agent has available actions ,,,. Performing an action that would transition to an invalid b) After how many iterations k will we have V#(s)=V(s) for all states s ? state (outside the grid or into a wall) results in the agent remaining in its original state. In states with an arrow coming out, the agent has an additional action EXIT. In the event that the EXIT action is taken, the agent receives the labeled reward and ends the game in the terminal state T. Unless otherwise stated, all other transitions receive no reward, and all transitions are deterministic. For all parts of the problem, assume that value iteration begins with all states initialized to zero, i.e., V!(s)=0,s. c) Suppose that we wanted to re-design the reward function. For which of Let the discount factor be =0.25 for all following parts. the following new reward functions would the optimal policy remain unchanged? Let R(s,a,s) be the original living reward A) R$(s,a,s%)=5R(s,a,s%) B) R$(s,a,s%)=3+R(s,a,s%) C) R(s,a,s%)=R(s,a,s))$ D) R(s,a,s%)=1 E) None a) Suppose that we are performing value iteration on the grid world MDP below. What is the optimal value of V(A) and V(B) ? Explain your answer (show how you compute them)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts