Question: Part (iii) and (iv) only.Thanks! Minimizing an objective function is of central importance in machine learning. In this problem, we will analyze the an iterative

Part (iii) and (iv) only.Thanks!

Part (iii) and (iv) only.Thanks!

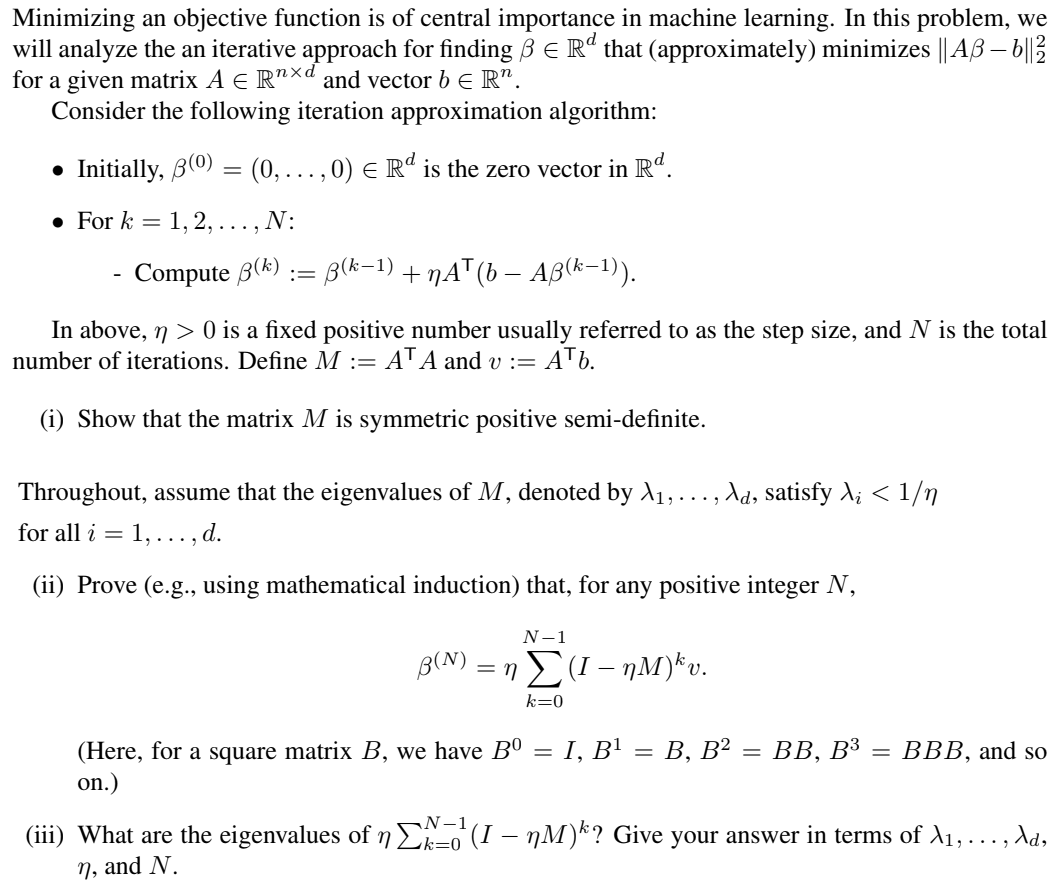

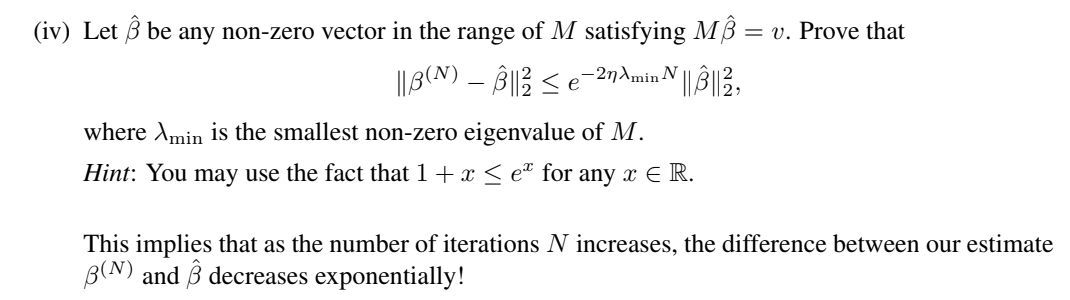

Minimizing an objective function is of central importance in machine learning. In this problem, we will analyze the an iterative approach for finding Be Rd that (approximately) minimizes || AB 6||3 for a given matrix A E Rnxd and vector b ER. Consider the following iteration approximation algorithm: Initially, B(0) = (0,...,0) e Rd is the zero vector in Rd. For k = 1, 2, ...,N: Compute B(k) := B(k-1) + NAT (6 AB(k-1)). In above, n > 0 is a fixed positive number usually referred to as the step size, and N is the total number of iterations. Define M := AT A and v:= AT. (i) Show that the matrix M is symmetric positive semi-definite. Throughout, assume that the eigenvalues of M, denoted by 11, ..., dd, satisfy li 0 is a fixed positive number usually referred to as the step size, and N is the total number of iterations. Define M := AT A and v:= AT. (i) Show that the matrix M is symmetric positive semi-definite. Throughout, assume that the eigenvalues of M, denoted by 11, ..., dd, satisfy li

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts