Question: please answer it in python A 3-dimensional random vector X takes values from a mixture of four Gaussians. One of these Gaussians represent the class-conditional

please answer it in python

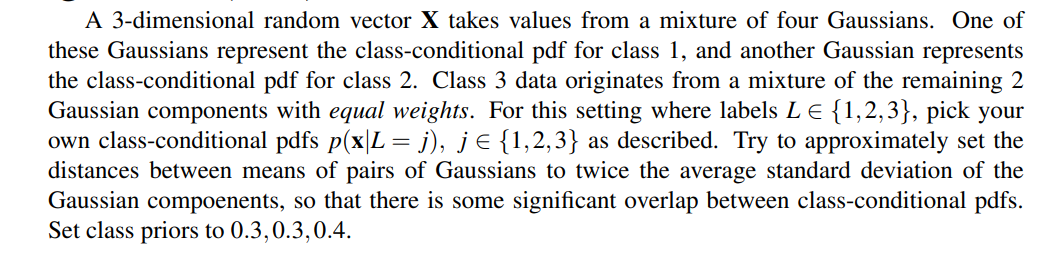

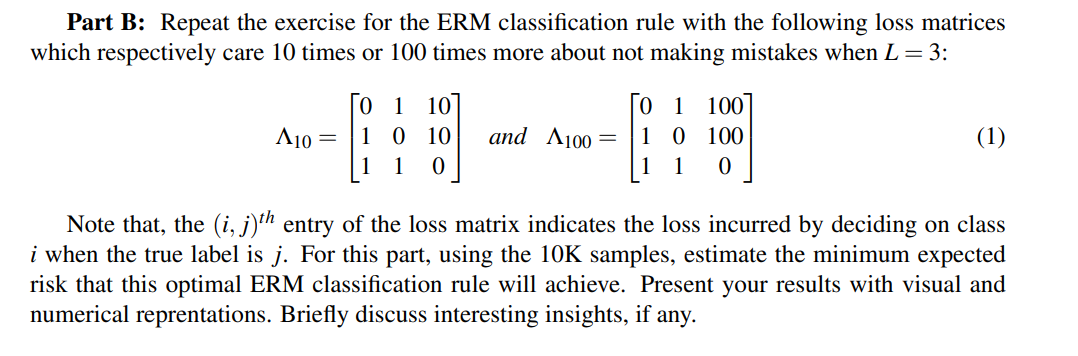

A 3-dimensional random vector X takes values from a mixture of four Gaussians. One of these Gaussians represent the class-conditional pdf for class 1, and another Gaussian represents the class-conditional pdf for class 2. Class 3 data originates from a mixture of the remaining 2 Gaussian components with equal weights. For this setting where labels LE {1,2,3), pick your own class-conditional pdfs p(x\L = j), je {1,2,3} as described. Try to approximately set the distances between means of pairs of Gaussians to twice the average standard deviation of the Gaussian compoenents, so that there is some significant overlap between class-conditional pdfs. Set class priors to 0.3,0.3,0.4. Part B: Repeat the exercise for the ERM classification rule with the following loss matrices which respectively care 10 times or 100 times more about not making mistakes when L = 3: o 1 1 0 A10 = 107 10 0 and A100 = o 1 100 1 0 100 1 0 (1) 1 1 Note that, the (i, j)th entry of the loss matrix indicates the loss incurred by deciding on class i when the true label is j. For this part, using the 10K samples, estimate the minimum expected risk that this optimal ERM classification rule will achieve. Present your results with visual and numerical reprentations. Briefly discuss interesting insights, if any. A 3-dimensional random vector X takes values from a mixture of four Gaussians. One of these Gaussians represent the class-conditional pdf for class 1, and another Gaussian represents the class-conditional pdf for class 2. Class 3 data originates from a mixture of the remaining 2 Gaussian components with equal weights. For this setting where labels LE {1,2,3), pick your own class-conditional pdfs p(x\L = j), je {1,2,3} as described. Try to approximately set the distances between means of pairs of Gaussians to twice the average standard deviation of the Gaussian compoenents, so that there is some significant overlap between class-conditional pdfs. Set class priors to 0.3,0.3,0.4. Part B: Repeat the exercise for the ERM classification rule with the following loss matrices which respectively care 10 times or 100 times more about not making mistakes when L = 3: o 1 1 0 A10 = 107 10 0 and A100 = o 1 100 1 0 100 1 0 (1) 1 1 Note that, the (i, j)th entry of the loss matrix indicates the loss incurred by deciding on class i when the true label is j. For this part, using the 10K samples, estimate the minimum expected risk that this optimal ERM classification rule will achieve. Present your results with visual and numerical reprentations. Briefly discuss interesting insights, if any

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts