Question: please answer neatly and fast Linear Regression 1. Provide a geometric representation of a linear regression example using 10 data points. Additionally provide the corresponding

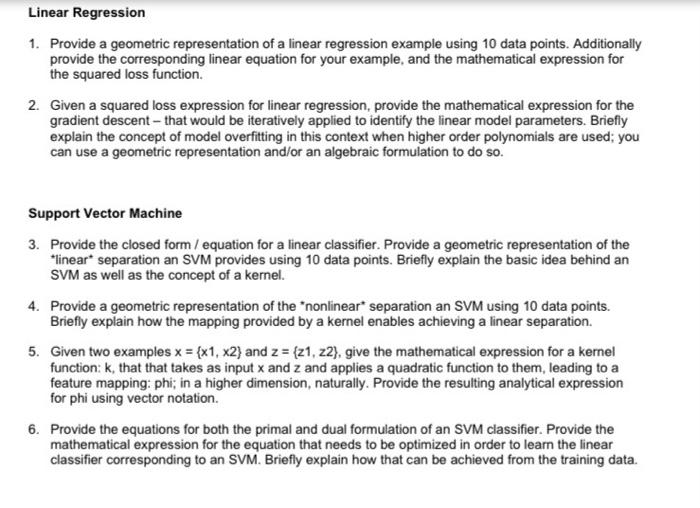

Linear Regression 1. Provide a geometric representation of a linear regression example using 10 data points. Additionally provide the corresponding linear equation for your example, and the mathematical expression for the squared loss function. 2. Given a squared loss expression for linear regression, provide the mathematical expression for the gradient descent - that would be iteratively applied to identify the linear model parameters. Briefly explain the concept of model overfitting in this context when higher order polynomials are used: you can use a geometric representation and/or an algebraic formulation to do so. Support Vector Machine 3. Provide the closed form / equation for a linear classifier. Provide a geometric representation of the *linear separation an SVM provides using 10 data points. Briefly explain the basic idea behind an SVM as well as the concept of a kernel. 4. Provide a geometric representation of the nonlinear separation an SVM using 10 data points. Briefly explain how the mapping provided by a kernel enables achieving a linear separation. 5. Given two examples x = {x1, x2) and z = {21, 22), give the mathematical expression for a kernel function: k, that that takes as input x and z and applies a quadratic function to them, leading to a feature mapping: phi; in a higher dimension, naturally. Provide the resulting analytical expression for phi using vector notation. 6. Provide the equations for both the primal and dual formulation of an SVM classifier. Provide the mathematical expression for the equation that needs to be optimized in order to learn the linear classifier corresponding to an SVM. Briefly explain how that can be achieved from the training data

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts