Question: Please help with this for deep learning. CODE IN PHYTON. Problem 3 : For a given number of parameters P , let m P (

Please help with this for deep learning. CODE IN PHYTON.

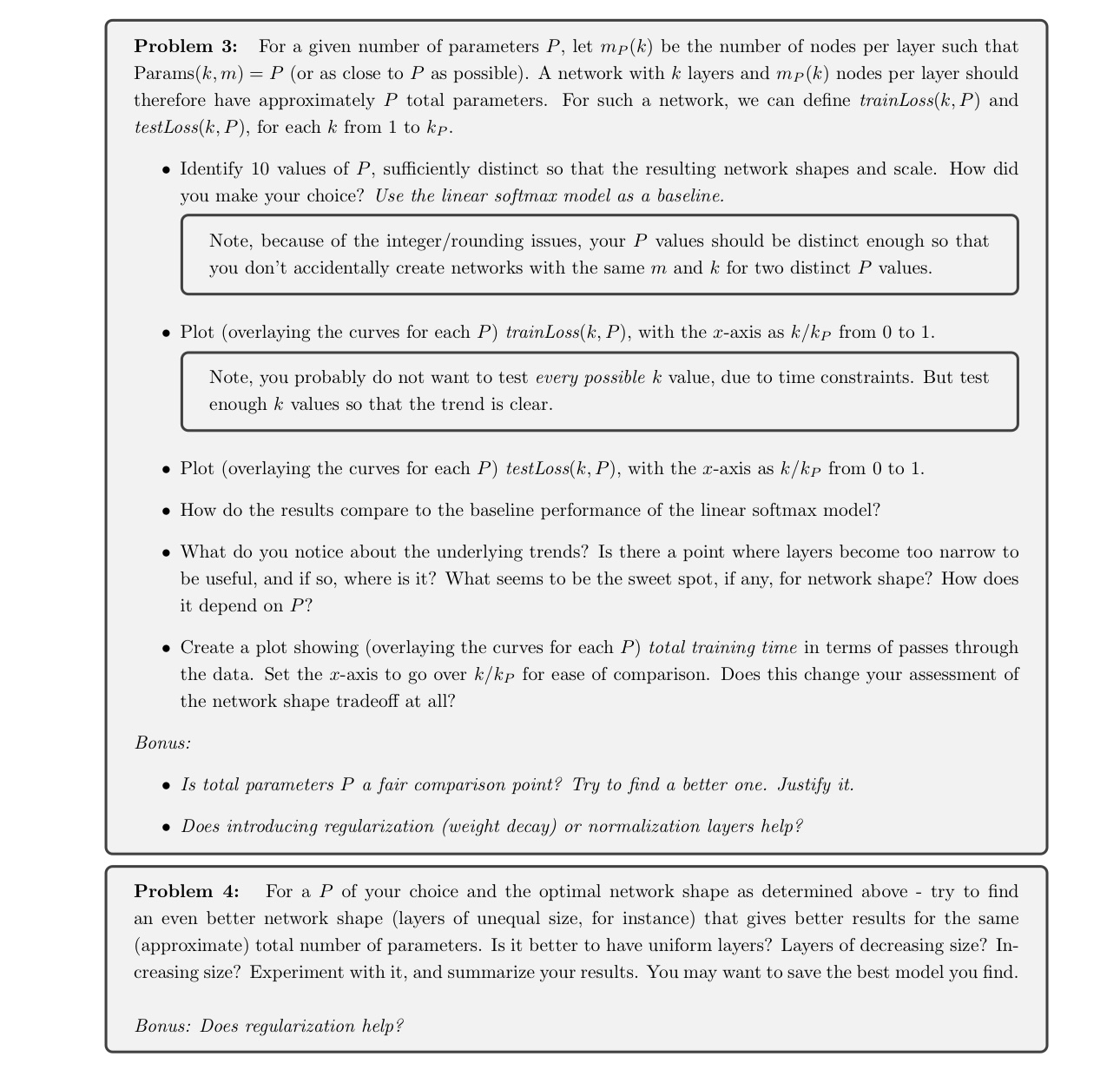

Problem : For a given number of parameters let be the number of nodes per layer such that Params or as close to as possible A network with layers and nodes per layer should therefore have approximately total parameters. For such a network, we can define trainLoss and testLoss for each from to

Identify values of sufficiently distinct so that the resulting network shapes and scale. How did you make your choice? Use the linear softmax model as a baseline.

Note, because of the integerrounding issues, your values should be distinct enough so that you don't accidentally create networks with the same and for two distinct values.

Plot overlaying the curves for each trainLoss with the axis as from to

Note, you probably do not want to test every possible value, due to time constraints. But test enough values so that the trend is clear.

Plot overlaying the curves for each test Los with the axis as from to

How do the results compare to the baseline performance of the linear softmax model?

What do you notice about the underlying trends? Is there a point where layers become too narrow to be useful, and if so where is it What seems to be the sweet spot, if any, for network shape? How does it depend on

Create a plot showing overlaying the curves for each total training time in terms of passes through the data. Set the axis to go over for ease of comparison. Does this change your assessment of the network shape tradeoff at all?

Bonus:

Is total parameters a fair comparison point? Try to find a better one. Justify it

Does introducing regularization weight decay or normalization layers help?

Problem : For a of your choice and the optimal network shape as determined above try to find an even better network shape layers of unequal size, for instance that gives better results for the same approximate total number of parameters. Is it better to have uniform layers? Layers of decreasing size? Increasing size? Experiment with it and summarize your results. You may want to save the best model you find.

Bonus: Does regularization help?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock