Question: Please help with this for deep learning!! CODE IN PHYTON. 2 Training Effects: Activation Functions, Optimizers, Batch Size Problem 5 : For the model structure

Please help with this for deep learning!! CODE IN PHYTON.

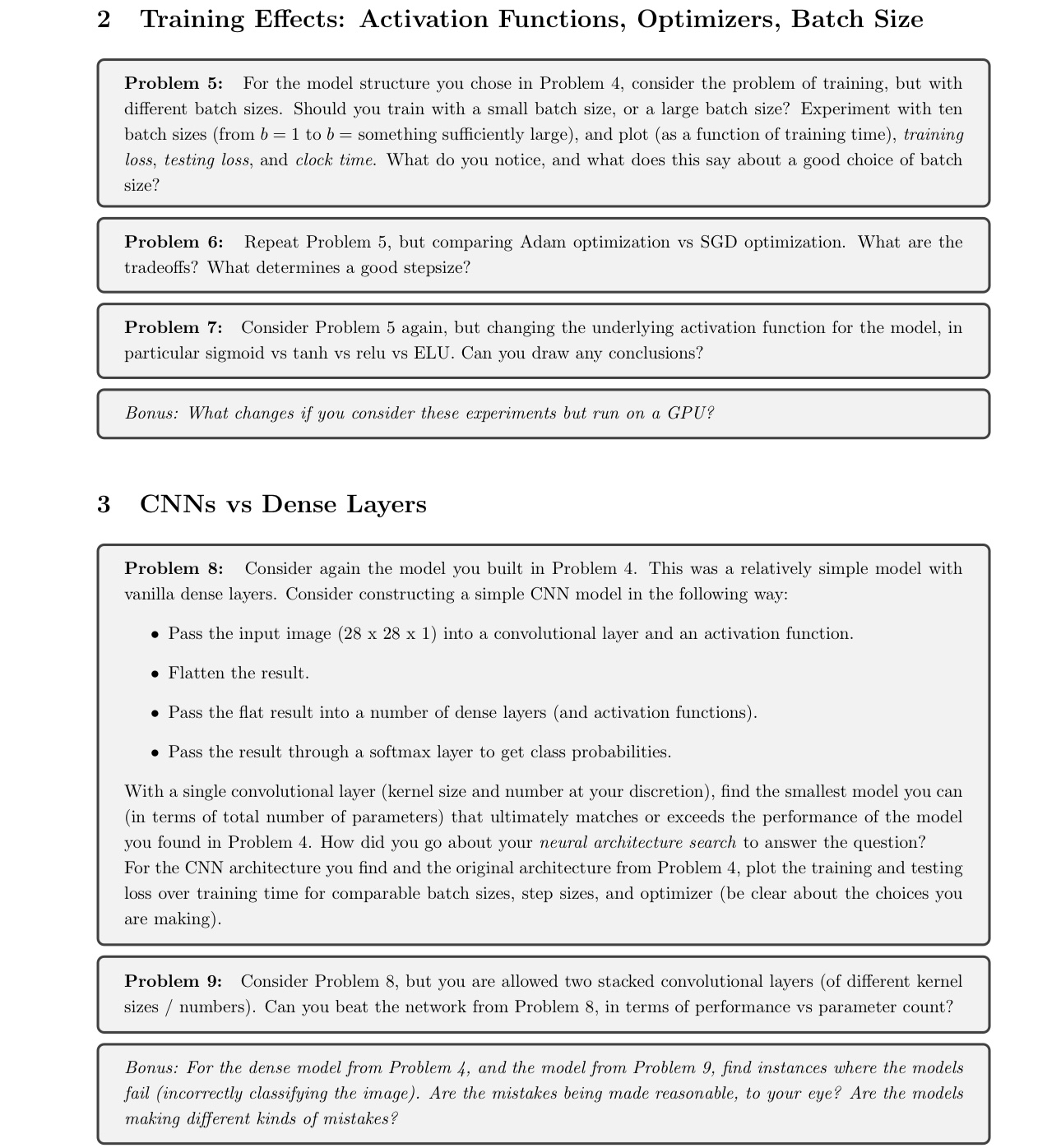

Training Effects: Activation Functions, Optimizers, Batch Size

Problem : For the model structure you chose in Problem consider the problem of training, but with different batch sizes. Should you train with a small batch size, or a large batch size? Experiment with ten batch sizes from to something sufficiently large and plot as a function of training time training loss, testing loss, and clock time. What do you notice, and what does this say about a good choice of batch size?

Problem : Repeat Problem but comparing Adam optimization vs SGD optimization. What are the tradeoffs? What determines a good stepsize?

Problem : Consider Problem again, but changing the underlying activation function for the model, in particular sigmoid vs tanh vs relu vs ELU. Can you draw any conclusions?

Bonus: What changes if you consider these experiments but run on a GPU?

CNNs vs Dense Layers

Problem : Consider again the model you built in Problem This was a relatively simple model with vanilla dense layers. Consider constructing a simple CNN model in the following way:

Pass the input image into a convolutional layer and an activation function.

Flatten the result.

Pass the flat result into a number of dense layers and activation functions

Pass the result through a softmax layer to get class probabilities.

With a single convolutional layer kernel size and number at your discretion find the smallest model you can in terms of total number of parameters that ultimately matches or exceeds the performance of the model you found in Problem How did you go about your neural architecture search to answer the question?

For the CNN architecture you find and the original architecture from Problem plot the training and testing loss over training time for comparable batch sizes, step sizes, and optimizer be clear about the choices you are making

Problem : Consider Problem but you are allowed two stacked convolutional layers of different kernel sizes numbers Can you beat the network from Problem in terms of performance vs parameter count?

Bonus: For the dense model from Problem and the model from Problem find instances where the models fail incorrectly classifying the image Are the mistakes being made reasonable, to your eye? Are the models making different kinds of mistakes?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock