Question: Please, only answer d through f with the complete work For a 2-class nearest-means classifier (NMC) based on D features, and for given mean vectors

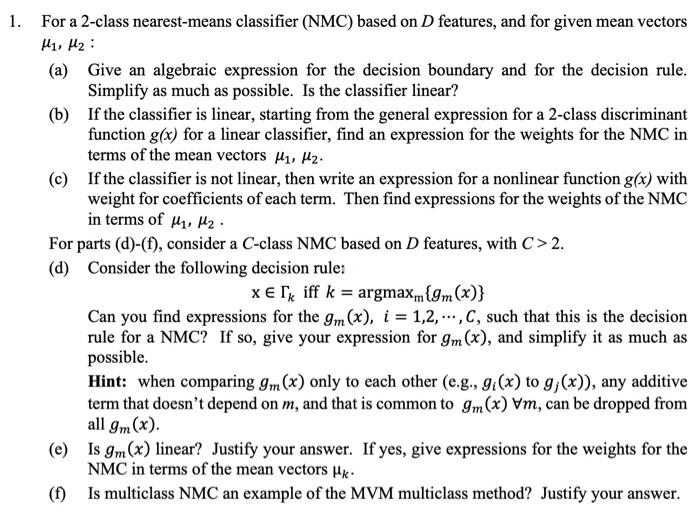

For a 2-class nearest-means classifier (NMC) based on D features, and for given mean vectors 1,2 : (a) Give an algebraic expression for the decision boundary and for the decision rule. Simplify as much as possible. Is the classifier linear? (b) If the classifier is linear, starting from the general expression for a 2-class discriminant function g(x) for a linear classifier, find an expression for the weights for the NMC in terms of the mean vectors 1,2. (c) If the classifier is not linear, then write an expression for a nonlinear function g(x) with weight for coefficients of each term. Then find expressions for the weights of the NMC in terms of 1,2. For parts (d)-(f), consider a C-class NMC based on D features, with C>2. (d) Consider the following decision rule: xkiffk=argmaxm{gm(x)} Can you find expressions for the gm(x),i=1,2,,C, such that this is the decision rule for a NMC? If so, give your expression for gm(x), and simplify it as much as possible. Hint: when comparing gm(x) only to each other (e.g., gi(x) to gj(x) ), any additive term that doesn't depend on m, and that is common to gm(x)m, can be dropped from all gm(x). (e) Is gm(x) linear? Justify your answer. If yes, give expressions for the weights for the NMC in terms of the mean vectors k. (f) Is multiclass NMC an example of the MVM multiclass method? Justify your

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts