Question: PLEASE USE PYTHON 3 AND DO NOT USE ANY INBUILT LIBRARIES THAT GIVE YOU A DIRECT ANSWER THANK YOU Theory We discussed in class the

PLEASE USE PYTHON 3

AND DO NOT USE ANY INBUILT LIBRARIES THAT GIVE YOU A DIRECT ANSWER

THANK YOU

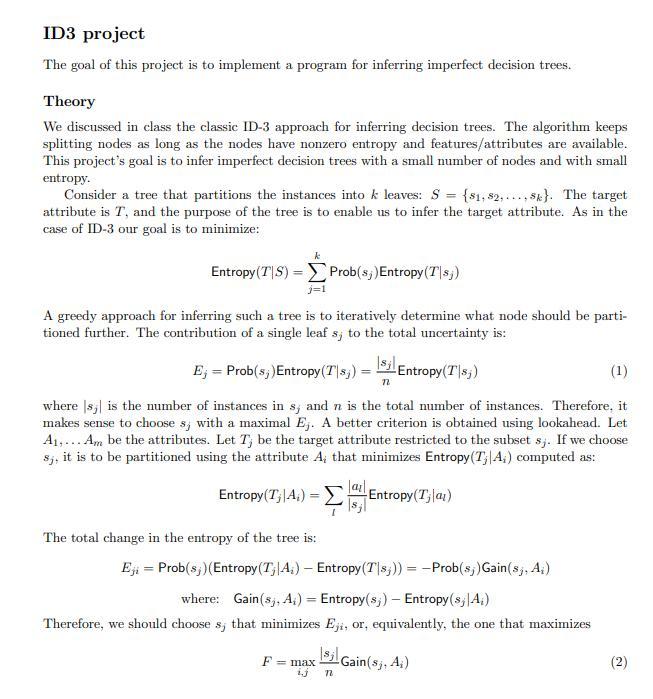

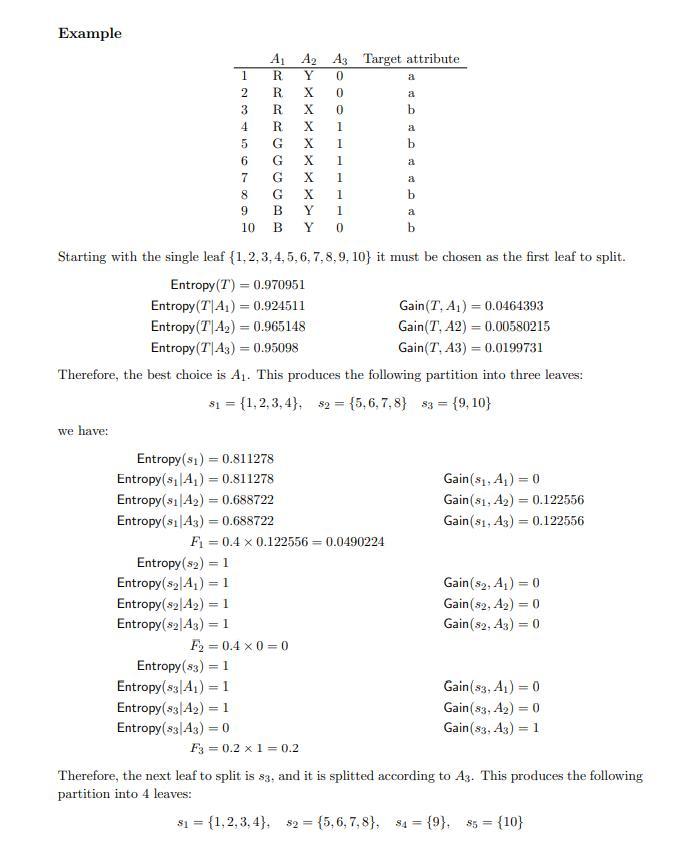

Theory We discussed in class the classic ID-3 approach for inferring decision trees. The algorithm keeps splitting nodes as long as the nodes have nonzero entropy and features/attributes are available. This project's goal is to infer imperfect decision trees with a small number of nodes and with small entropy. Consider a tree that partitions the instances into k leaves: S={s1,s2,,sk}. The target attribute is T, and the purpose of the tree is to enable us to infer the target attribute. As in the case of ID-3 our goal is to minimize: Entropy(TS)=j=1kProb(sj)Entropy(Tsj) A greedy approach for inferring such a tree is to iteratively determine what node should be partitioned further. The contribution of a single leaf sj to the total uncertainty is: Ej=Prob(sj)Entropy(Tsj)=nsjEntropy(Tsj) where sj is the number of instances in sj and n is the total number of instances. Therefore, it makes sense to choose sj with a maximal Ej. A better criterion is obtained using lookahead. Let A1,Am be the attributes. Let Tj be the target attribute restricted to the subset sj. If we choose sj, it is to be partitioned using the attribute Ai that minimizes Entropy (TjAi) computed as: Entropy(TjAi)=lsjalEntropy(Tjal) The total change in the entropy of the tree is: Eji=Prob(sj)(Entropy(TjAi)Entropy(Tsj))=Prob(sj)Gain(sj,Ai)where:Gain(sj,Ai)=Entropy(sj)Entropy(sjAi) Therefore, we should choose sj that minimizes Eji, or, equivalently, the one that maximizes F=maxi,jnsjGain(sj,Ai) Example Starting with the single leaf {1,2,3,4,5,6,7,8,9,10} it must be chosen as the first leaf to split. Entropy(T)Entropy(TA1)Entropy(TA2)Entropy(TA3)=0.970951=0.924511=0.965148=0.95098Gain(T,A1)=0.0464393Gain(T,A2)=0.00580215Gain(T,A3)=0.0199731 Therefore, the best choice is A1. This produces the following partition into three leaves: s1={1,2,3,4},s2={5,6,7,8}s3={9,10} we have: Entropy(s1)=0.811278Entropy(s1A1)=0.811278Gain(s1,A1)=0Entropy(s1A2)=0.688722Gain(s1,A2)=0.122556Entropy(s1A3)=0.688722Gain(s1,A3)=0.122556F1=0.40.122556=0.0490224Entropy(s2)=1Entropy(s2A1)=1Gain(s2,A1)=0Entropy(s2A2)=1Gain(s2,A2)=0Entropy(s2A3)=1Gain(s2,A3)=0F2=0.40=0Entropy(s3)=1Entropy(s3A1)=1Gain(s3,A1)=0Entropy(s3A2)=1Gain(s3,A2)=0Entropy(s3A3)=0Gain(s3,A3)=1F3=0.21=0.2 Therefore, the next leaf to split is s3, and it is splitted according to A3. This produces the following partition into 4 leaves: s1={1,2,3,4},s2={5,6,7,8},s4={9},s5={10} Input/Output The dataset is described in a file containing a matrix of integers. Let m be the number of instances (rows) and let n be the number of features/attributes (columns). The last attribute is taken as the target attribute. Example: You may assume that an attribute has no more than 3 distinct values so that the numbers in each column can be 0,1 or 2 . The target attribute (the last column) is binary, so that its values are 0 or 1 . A partition is described in a text file. For a situation where there are m instances, a partition is described by a sequence of numbers in the range 0m1. Each partition is specified in a separate line of integer numbers. The first number in each line is the partition id. The only requirement is that the partition ids are unique. Continuing with the example above that has 10 instances, here is the file representing the partition {0,9},{1,2,3,4},{5,6,7,8}. The first partition has id =2, the second id =5, and the id of the third is 6 . (These numbers are arbitrary but must be unique.) 209 51234 6578 Your program should read as input one dataset file and one partition file. It should determine which partition to split, and according to what attribute. This information should be printed as a message on the console (standard output). The program should then produce as output the new partition file. The attributes are numbered 0n1. Example: Python id3variant.py dataset-1.txt partition-1.txt partition-2.txt Partition 6 was replaced with partitions 7,8 using Attribute 2 If the file partition-1.txt is as specified above, the file partition-2.txt might be

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts