Question: Problem 1 . Word Embedding Problem Generate word embedding from your favorite story or article ( it should be very large in size ) .

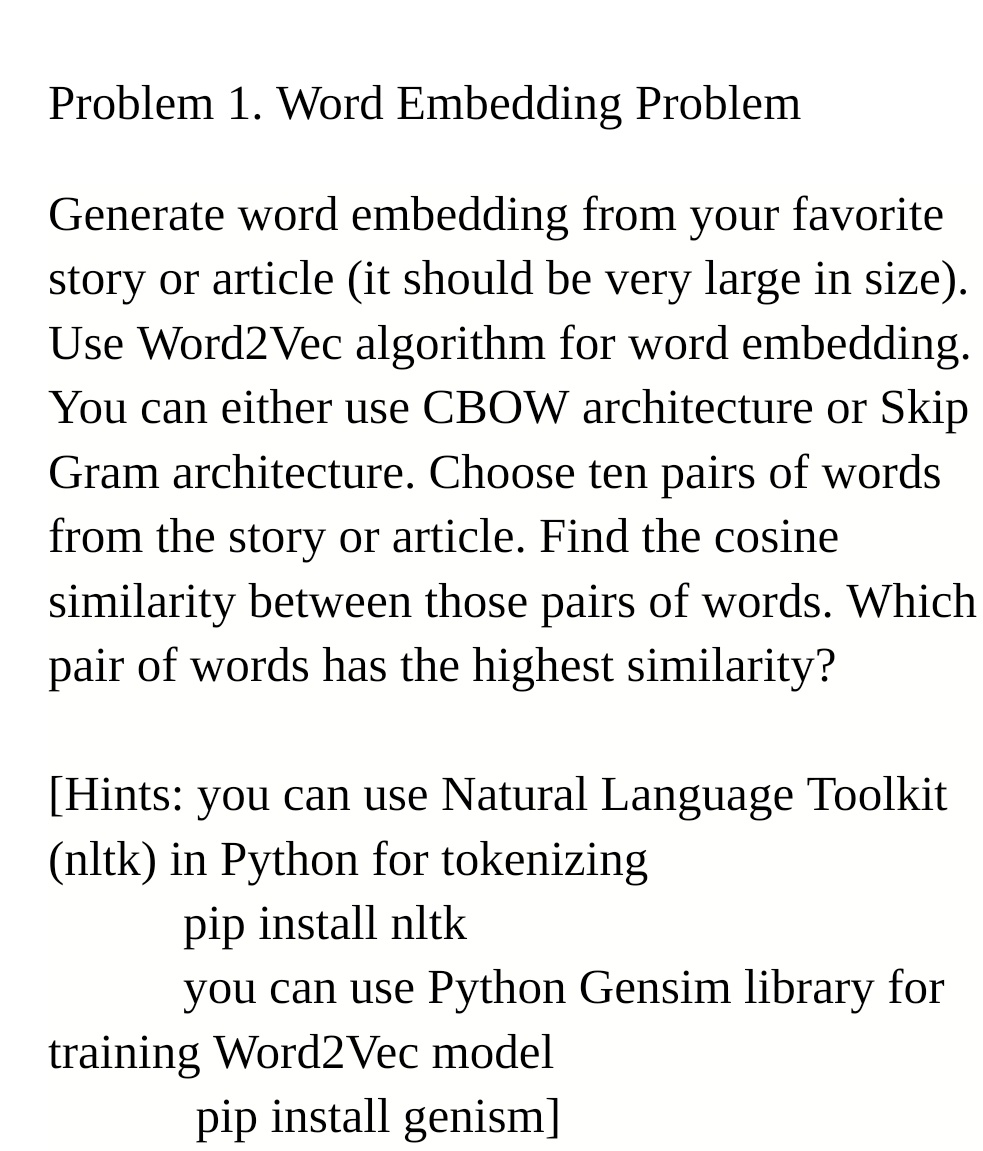

Problem Word Embedding Problem

Generate word embedding from your favorite story or article it should be very large in size Use WordVec algorithm for word embedding. You can either use CBOW architecture or Skip Gram architecture. Choose ten pairs of words from the story or article. Find the cosine similarity between those pairs of words. Which pair of words has the highest similarity?

Hints: you can use Natural Language Toolkit nltk in Python for tokenizing

pip install nltk

you can use Python Gensim library for training WordVec model pip install genism

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock