Question: Problem 2 . ( 1 5 points ) Consider the following deterministic Markov Decision Process ( MDP ) , describing a simple robot grid world

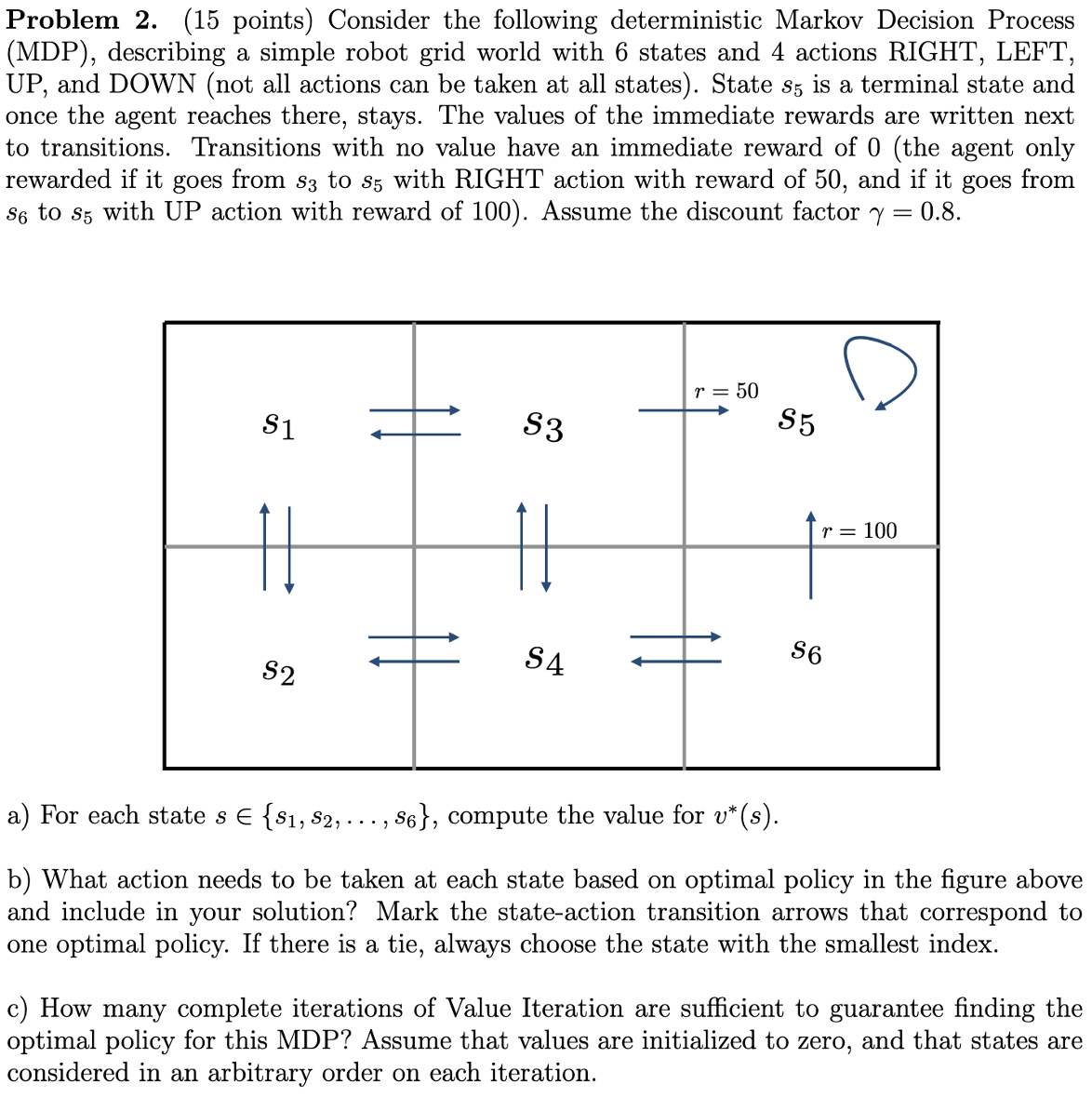

Problem points Consider the following deterministic Markov Decision Process MDP describing a simple robot grid world with states and actions RIGHT, LEFT, UP and DOWN not all actions can be taken at all states State is a terminal state and once the agent reaches there, stays. The values of the immediate rewards are written next to transitions. Transitions with no value have an immediate reward of the agent only rewarded if it goes from to with RIGHT action with reward of and if it goes from to with UP action with reward of Assume the discount factor

a For each state dots, compute the value for

b What action needs to be taken at each state based on optimal policy in the figure above and include in your solution? Mark the stateaction transition arrows that correspond to one optimal policy. If there is a tie, always choose the state with the smallest index.

c How many complete iterations of Value Iteration are sufficient to guarantee finding the optimal policy for this MDP Assume that values are initialized to zero, and that states are considered in an arbitrary order on each iteration.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock