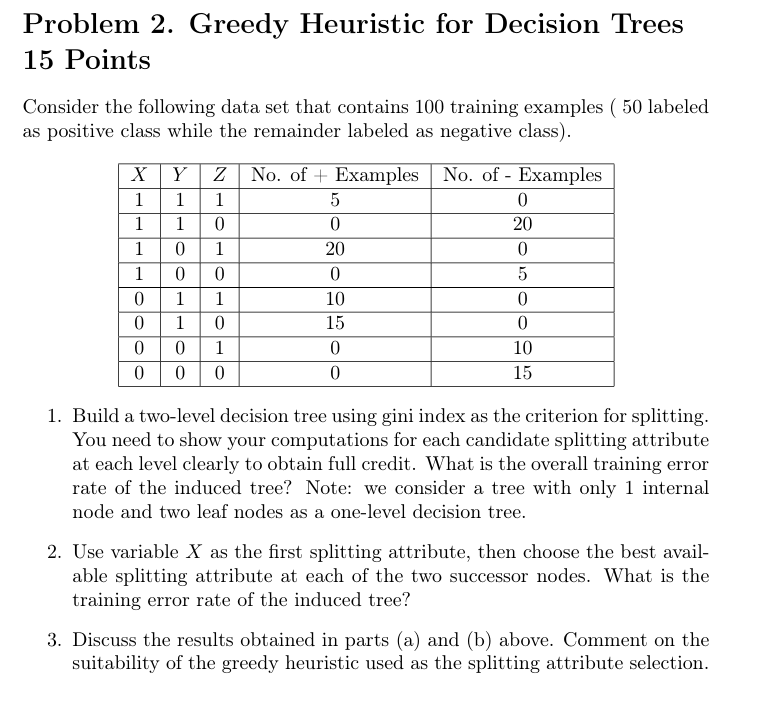

Question: Problem 2 . Greedy Heuristic for Decision Trees Consider the following data set that contains 1 0 0 training examples ( 5 0 labeled as

Problem Greedy Heuristic for Decision Trees

Consider the following data set that contains training examples labeled as positive class while the remainder labeled as negative class

Build a twolevel decision tree using gini index as the criterion for splitting.

You need to show your computations for each candidate splitting attribute at each level clearly to obtain full credit. What is the overall training error rate of the induced tree? Note: we consider a tree with only internal node and two leaf nodes as a onelevel decision tree.

Use variable X as the first splitting attribute, then choose the best available splitting attribute at each of the two successor nodes. What is the training error rate of the induced tree?

Discuss the results obtained in parts a and b above. Comment on the suitability of the greedy heuristic used as the splitting attribute selection.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock