Question: 1 Splitting Heuristic for Decision Trees (20 pts) Recall that the ID3 algorithm iteratively grows a decision tree from the root downwards. On each iteration,

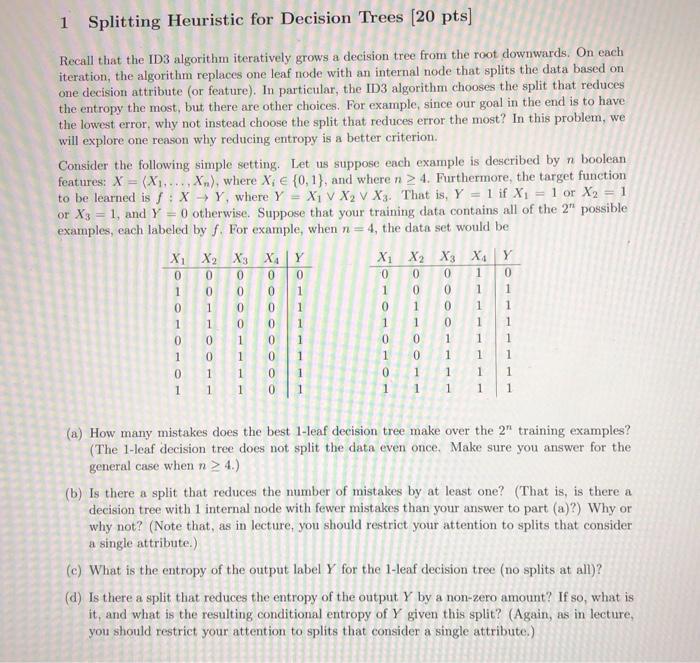

1 Splitting Heuristic for Decision Trees (20 pts) Recall that the ID3 algorithm iteratively grows a decision tree from the root downwards. On each iteration, the algorithm replaces one leaf node with an internal node that splits the data based on one decision attribute (or feature). In particular, the ID3 algorithm chooses the split that reduces the entropy the most, but there are other choices. For example, since our goal in the end is to have the lowest error, why not instead choose the split that reduces error the most? In this problem, we will explore one reason why reducing entropy is a better criterion. Consider the following simple setting. Let us suppose each example is described by v boolean features: X = (X.....X.), where X, {0,1), and where n > 4. Furthermore, the target function to be learned is f : XY, where Y = Xi V X2 V X3. That is, Y = 1 if Xi = 1 or X2 = 1 or X3 = 1, and Y = 0 otherwise. Suppose that your training data contains all of the 2" possible examples, each labeled by f. For example, when n = 4, the data set would be X, X, X3 X | Y X1 X2 X3 X Y 0 0 0 0 0 0 0 1 1 0 0 1 0 0 1 1 1 0 1 1 1 1 1 0 1 1 1 1 1 0 1 1 1 1 0 0 0 0 0 0 1 1 1 0 0 1 0 1 0 1 1 0 1 1 1 0 0 1 1 1 1 1 0 1 0 0 1 0 0 0 1 1 0 1 1 1 1 1 (a) How many mistakes does the best 1-leaf decision tree make over the 2" training examples? (The 1-leaf decision tree does not split the data even once. Make sure you answer for the general case when n > 4.) (b) Is there a split that reduces the number of mistakes by at least one? (That is, is there a decision tree with 1 internal node with fewer mistakes than your answer to part (a)?) Why or why not? (Note that, as in lecture, you should restrict your attention to splits that consider a single attribute.) (c) What is the entropy of the output label Y for the 1-leaf decision tree (no splits at all)? (d) Is there a split that reduces the entropy of the output Y by a non-zero amount? If so, what is it, and what is the resulting conditional entropy of Y given this split? (Again, as in lecture, you should restrict your attention to splits that consider a single attribute.) 1 Splitting Heuristic for Decision Trees (20 pts) Recall that the ID3 algorithm iteratively grows a decision tree from the root downwards. On each iteration, the algorithm replaces one leaf node with an internal node that splits the data based on one decision attribute (or feature). In particular, the ID3 algorithm chooses the split that reduces the entropy the most, but there are other choices. For example, since our goal in the end is to have the lowest error, why not instead choose the split that reduces error the most? In this problem, we will explore one reason why reducing entropy is a better criterion. Consider the following simple setting. Let us suppose each example is described by v boolean features: X = (X.....X.), where X, {0,1), and where n > 4. Furthermore, the target function to be learned is f : XY, where Y = Xi V X2 V X3. That is, Y = 1 if Xi = 1 or X2 = 1 or X3 = 1, and Y = 0 otherwise. Suppose that your training data contains all of the 2" possible examples, each labeled by f. For example, when n = 4, the data set would be X, X, X3 X | Y X1 X2 X3 X Y 0 0 0 0 0 0 0 1 1 0 0 1 0 0 1 1 1 0 1 1 1 1 1 0 1 1 1 1 1 0 1 1 1 1 0 0 0 0 0 0 1 1 1 0 0 1 0 1 0 1 1 0 1 1 1 0 0 1 1 1 1 1 0 1 0 0 1 0 0 0 1 1 0 1 1 1 1 1 (a) How many mistakes does the best 1-leaf decision tree make over the 2" training examples? (The 1-leaf decision tree does not split the data even once. Make sure you answer for the general case when n > 4.) (b) Is there a split that reduces the number of mistakes by at least one? (That is, is there a decision tree with 1 internal node with fewer mistakes than your answer to part (a)?) Why or why not? (Note that, as in lecture, you should restrict your attention to splits that consider a single attribute.) (c) What is the entropy of the output label Y for the 1-leaf decision tree (no splits at all)? (d) Is there a split that reduces the entropy of the output Y by a non-zero amount? If so, what is it, and what is the resulting conditional entropy of Y given this split? (Again, as in lecture, you should restrict your attention to splits that consider a single attribute.)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts