Question: Problem 3. (50pt) Consider an infinite horizon MDP, characterized by M = (S, A, r,p,y) and r : S A [0,1]. We would like

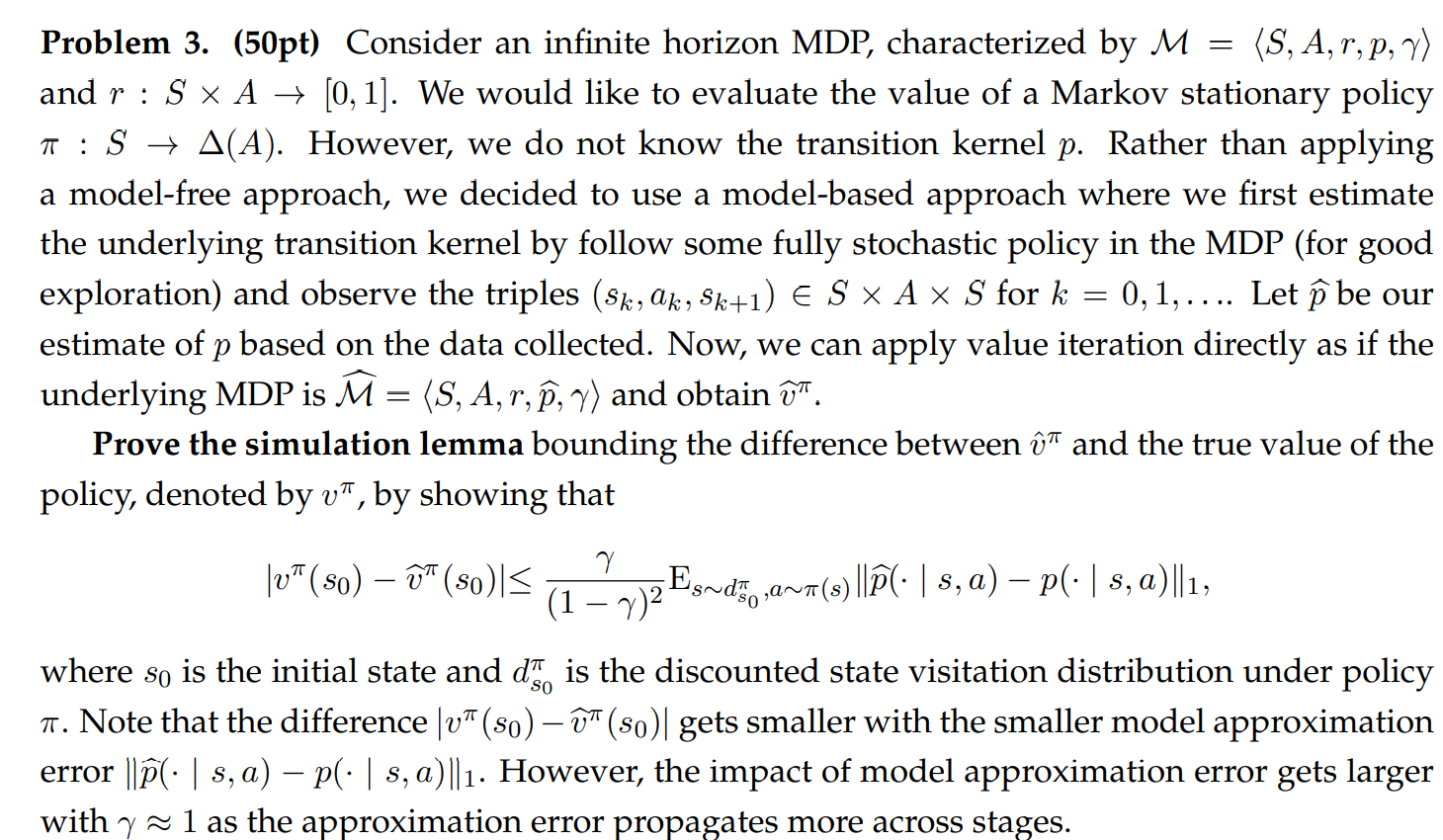

Problem 3. (50pt) Consider an infinite horizon MDP, characterized by M = (S, A, r,p,y) and r : S A [0,1]. We would like to evaluate the value of a Markov stationary policy : S A(A). However, we do not know the transition kernel p. Rather than applying a model-free approach, we decided to use a model-based approach where we first estimate the underlying transition kernel by follow some fully stochastic policy in the MDP (for good exploration) and observe the triples (Sk, ak, Sk+1) S A S for k 0,1,.... Let be our estimate of p based on the data collected. Now, we can apply value iteration directly as if the underlying MDP is M = (S, A, r,p,y) and obtain T. = Prove the simulation lemma bounding the difference between and the true value of the policy, denoted by v", by showing that v (so) (so)| < (1) Es~d~(s) ||( | s, a) p( | s, a)||1, where so is the initial state and do is the discounted state visitation distribution under policy . Note that the difference |vT (80) (so)| gets smaller with the smaller model approximation error ||( | s, a) p( | s, a)||1. However, the impact of model approximation error gets larger with 1 as the approximation error propagates more across stages.

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts