Question: Problem 3. Recall that in classication we assume that each data point is an i.i.d. sample from a(n unknown) distribution P(X = :13, Y =

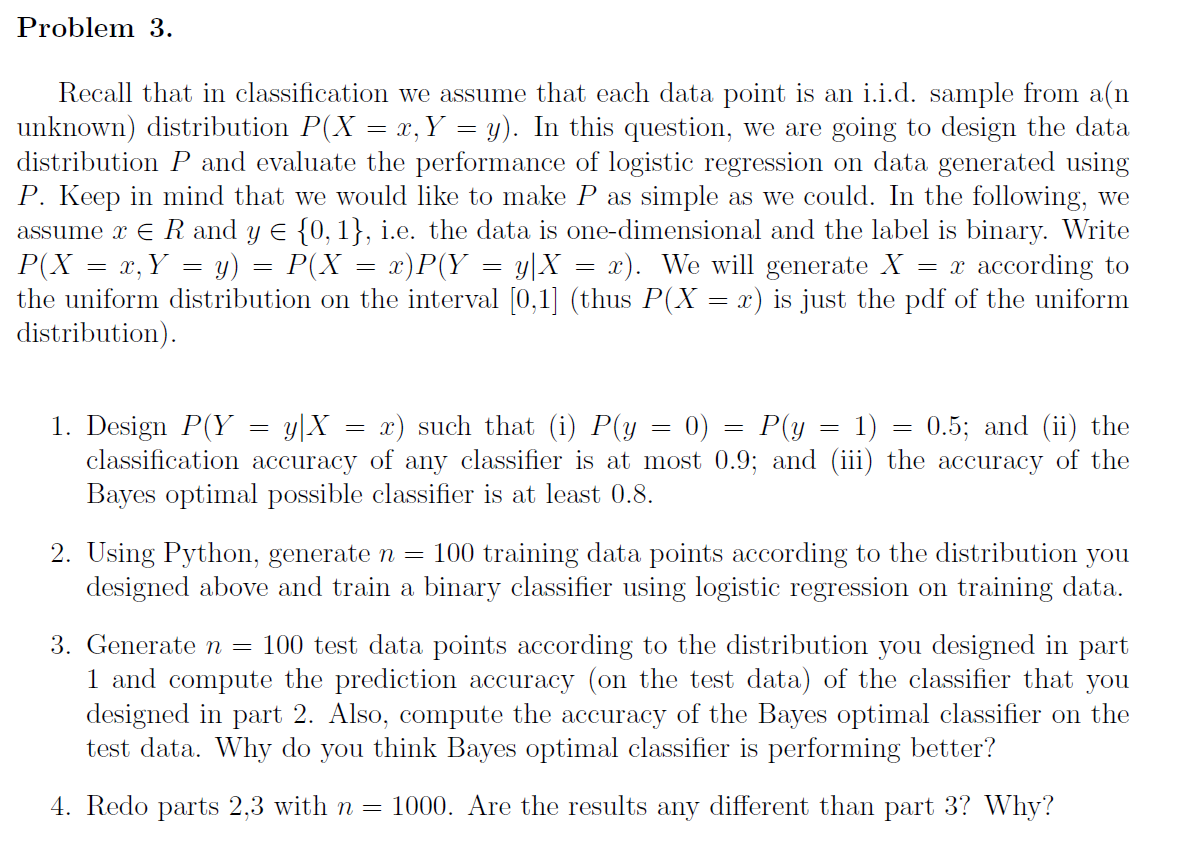

Problem 3. Recall that in classication we assume that each data point is an i.i.d. sample from a(n unknown) distribution P(X = :13, Y = y). In this question, we are going to design the data distribution P and evaluate the performance of logistic regression on data generated using P. Keep in mind that we would like to make P as simple as we could. In the following, we assume 3: E R and y E {0, 1}, i.e. the data is onedimensional and the label is binary. Write P(X = 33,Y = y) = P(X = m)P(Y = y|X = :13). We Will generate X = :1: according to the uniform distribution on the interval [0,1] (thus P(X : 3:) is just the pdf of the uniform distribution) . 1. Design P(Y : y|X : :13) such that (i) P(y : 0) : P(y : 1) : 0.5', and (ii) the classication accuracy of any classier is at most 0.9; and (iii) the accuracy of the Bayes optimal possible classier is at least 0.8. 2. Using Python, generate n : 100 training data points according to the distribution you designed above and train a binary classier using logistic regression on training data. 3. Generate n = 100 test data points according to the distribution you designed in part 1 and compute the prediction accuracy (on the test data) of the classier that you designed in part 2. Also, compute the accuracy of the Bayes optimal classier on the test data. Why do you think Bayes optimal classier is performing better? 4. Redo parts 2,3 with n = 1000. Are the results any different than part 3? Why

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts