Question: Problem # 5 : ( 1 point ) Stochastic Gradient Descent ( SGD ) is an iterative method for optimizing an objective function, particularly useful

Problem #:

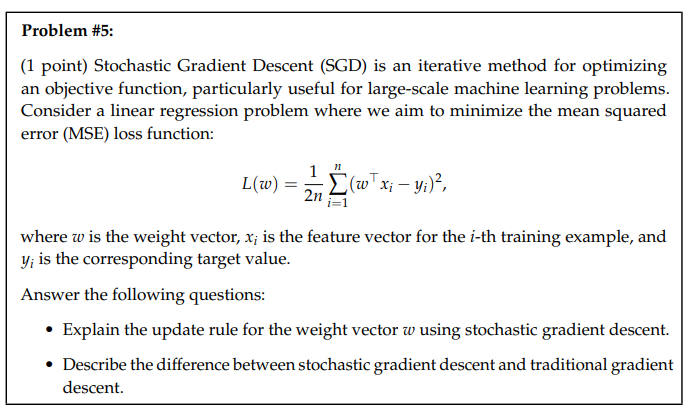

point Stochastic Gradient Descent SGD is an iterative method for optimizing

an objective function, particularly useful for largescale machine learning problems.

Consider a linear regression problem where we aim to minimize the mean squared

error MSE loss function:

Lw

n

n

i

w

xi yi

where w is the weight vector, xi

is the feature vector for the ith training example, and

yi

is the corresponding target value.

Answer the following questions:

Explain the update rule for the weight vector w using stochastic gradient descent.

Describe the difference between stochastic gradient descent and traditional gradient

descent.Problem #:

point Stochastic Gradient Descent SGD is an iterative method for optimizing

an objective function, particularly useful for largescale machine learning problems.

Consider a linear regression problem where we aim to minimize the mean squared

error MSE loss function:

where is the weight vector, is the feature vector for the th training example, and

is the corresponding target value.

Answer the following questions:

Explain the update rule for the weight vector using stochastic gradient descent.

Describe the difference between stochastic gradient descent and traditional gradient

descent.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock