Question: Problem 5F (The Bias-Variance tradeoff) The mean square error, MSE, is the usual way to measure how good a parameter estimator is to the

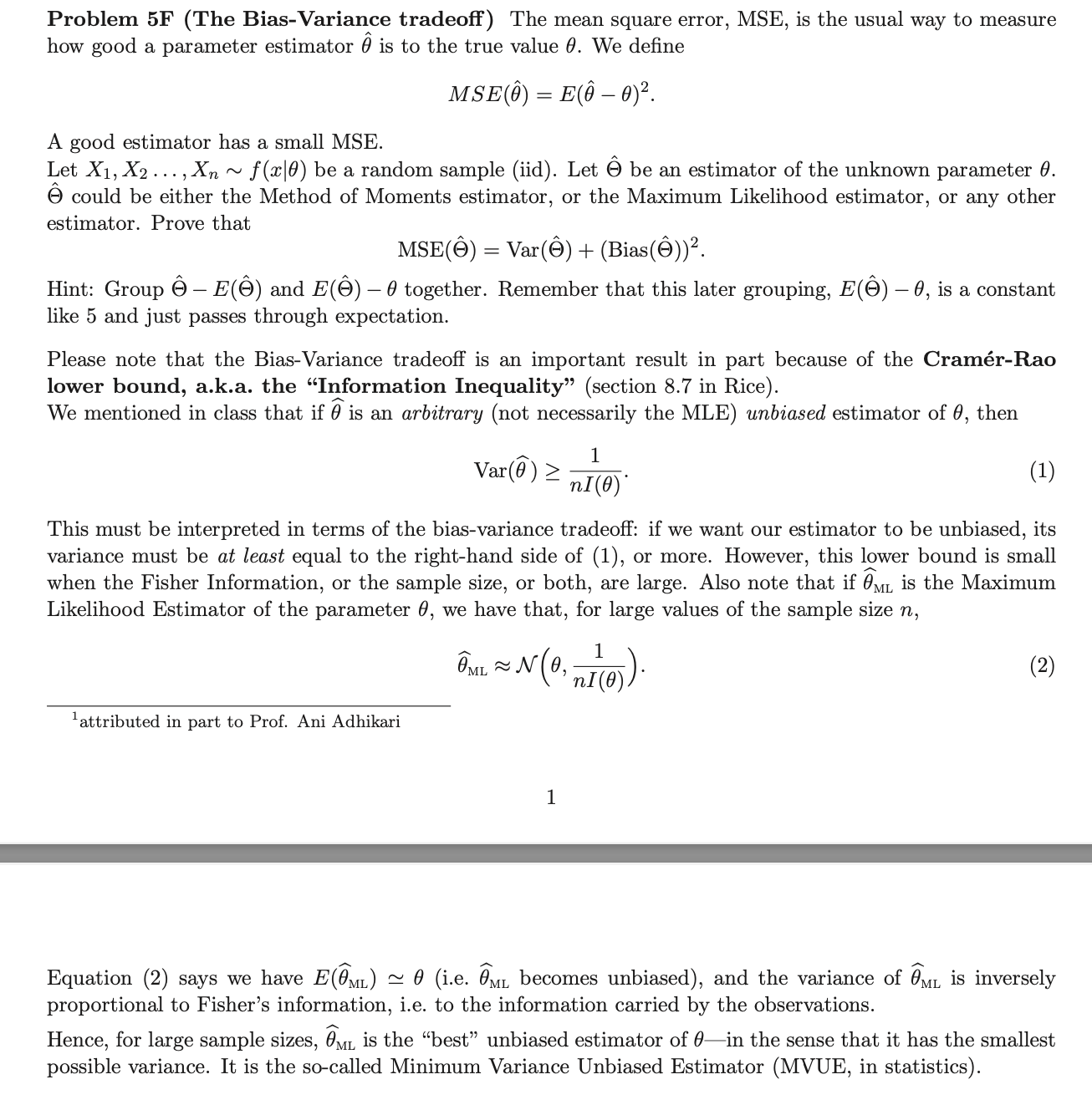

Problem 5F (The Bias-Variance tradeoff) The mean square error, MSE, is the usual way to measure how good a parameter estimator is to the true value 0. We define MSE() = E( 0). A good estimator has a small MSE. Let X1, X2, Xn ~ f(x|0) be a random sample (iid). Let be an estimator of the unknown parameter 0. could be either the Method of Moments estimator, or the Maximum Likelihood estimator, or any other estimator. Prove that MSE() = Var() + (Bias()). Hint: Group - E() and E() 0 together. Remember that this later grouping, E() 0, is a constant like 5 and just passes through expectation. Please note that the Bias-Variance tradeoff is an important result in part because of the Cramr-Rao lower bound, a.k.a. the Information Inequality" (section 8.7 in Rice). We mentioned in class that if is an arbitrary (not necessarily the MLE) unbiased estimator of 0, then 1 Var() > nI(0) (1) This must be interpreted in terms of the bias-variance tradeoff: if we want our estimator to be unbiased, its variance must be at least equal to the right-hand side of (1), or more. However, this lower bound is small when the Fisher Information, or the sample size, or both, are large. Also note that if ML is the Maximum Likelihood Estimator of the parameter 0, we have that, for large values of the sample size n, attributed in part to Prof. Ani Adhikari ML N(0, ~N (0; 1(0)). 1 (2) Equation (2) says we have E( ML) ~ 0 (i.e. ML becomes unbiased), and the variance of ML is inversely proportional to Fisher's information, i.e. to the information carried by the observations. Hence, for large sample sizes, ML is the best unbiased estimator of 0in the sense that it has the smallest possible variance. It is the so-called Minimum Variance Unbiased Estimator (MVUE, in statistics).

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts