Question: Programming Assignment USE HAKELL GHCI. Code for HW_05_skel_440.hs It's in Haskell -- The scanner matches regular expressions against some input and -- returns a list

Programming Assignment

USE HAKELL GHCI.

Code for HW_05_skel_440.hs It's in Haskell

-- The scanner matches regular expressions against some input and

-- returns a list of the captured tokens (and leftover input).

-- scan input produces Just (list of tokens, remaining input)

--

data Token = Const Int | Id String | Op String | Punct String | Space

deriving (Show, Read, Eq)

-- scan input runs the scan algorithm on the input and returns the list of

-- resulting tokens (if any). It calls a helper routine with an empty list

-- of result tokens.

--

scan :: String -> [Token]

scan input = case scan' scan_rexps [] input of

Just(tokens,_) -> tokens

Nothing -> []

-- scan' rexps revtokens input applies the first regular expression of

-- the list rexps to the input. If the match succeeds, the captured

-- string is passed to a token-making routine associated with the reg

-- expr, and we add the new token to the head of our reversed list of

-- tokens found so far. Exception: We don't add a Space token.

--

scan' _ revtokens [] = Just (reverse revtokens, [])

-- *** STUB ***

-- scan_rexps are the regexprs to use to break up the input. The format of each

-- pair is (regexpr, fcn); if capture regexpr produces a string str, apply the function

-- to it to get a token. scan_rexps is an infinite cycle of the regular expressions

-- the scanner is looking for

--

scan_rexps = cycle

[ -- *** STUB *** (num_const, ???), -- You need to write the tokenizer function.

(identifier, Id), (operators, Op), (punctuation, Punct), (spaces, \_ -> Space) ]

num_const = RE_and [digit1_9, RE_star digit]

digit1_9 = RE_in_set "123456789"

digit = RE_in_set "0123456789"

operators = RE_in_set "+-*/"

punctuation = RE_in_set "[](){},;:.?!$%"

-- *** STUB *** need identifier, spaces

-- Regular expressions

data RegExpr a

= RE_const a

| RE_or [RegExpr a]

| RE_and [RegExpr a]

| RE_star (RegExpr a)

| RE_in_set [a] -- [...] - any symbol in list

| RE_empty -- epsilon - empty (no symbols)

deriving (Eq, Read, Show)

-- Regular expressions have their own types for token, reversed token,

-- and input. (They're used to make type annotations more understandable.)

--

type RE_Token a = [a]

type RE_RevToken a = [a]

type RE_Input a = [a]

-- capture matches a regular expression against some input; on success,

-- it returns the matching token (= list of input symbols) and the

-- remaining input. E.g. capture abcd abcdef = Just(abcd, ef)

--

capture :: Eq a => RegExpr a -> RE_Input a -> Maybe(RE_Token a, RE_Input a)

-- top-level capture routine calls assistant with empty built-up token to start

--

capture rexp input = case capture' rexp ([], input) of

Nothing -> Nothing

Just (revtoken, input') -> Just (reverse revtoken, input')

-- capture' rexp (partial_token input) matches the expression against

-- the input given a reversed partial token; on success, it returns

-- the completed token and remaining input. The token is in reverse

-- order. E.g., capture' cd (ba, cdef) = (dcba, ef)

--

capture' :: Eq a => RegExpr a -> (RE_RevToken a, RE_Input a) -> Maybe(RE_RevToken a, RE_Input a)

-- *** STUB *** add code for RE_const, RE_or, RE_and, RE_in_set, RE_star, RE_empty

-- *** and for any other kinds of regular expressions you need.

--

capture' _ _ = Nothing -- *** STUB ***

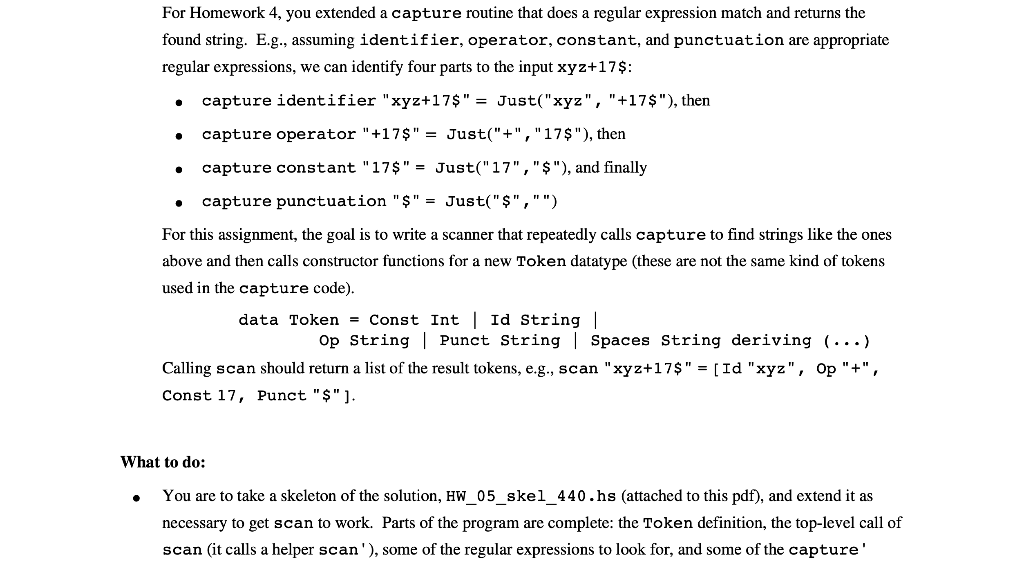

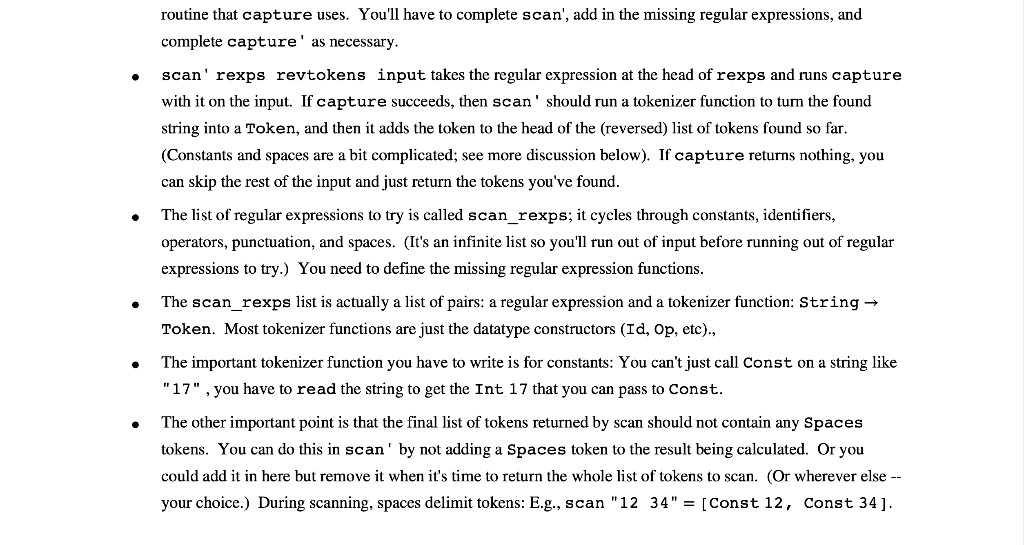

For Homework 4, you extended a capture routine that does a regular expression match and returns the found string. E.g., assuming identifier, operator, constant, and punctuation are appropriate regular expressions, we can identify four parts to the input xyz+17$: capture identifier "xyz+17$" = Just("xyz", "+17$"), then capture operator "+17$" = Just("+","17$"), then capture constant "17$" = Just("17","$"), and finally capture punctuation "$" = Just("$","") For this assignment, the goal is to write a scanner that repeatedly calls capture to find strings like the ones above and then calls constructor functions for a new Token datatype (these are not the same kind of tokens used in the capture code). data Token = Const Int | Id String | Op String | Punct String | Spaces String deriving (...) Calling scan should return a list of the result tokens, e.g., scan "xyz+17$" = [Id "xyz", Op "+", Const 17, Punct "$"]. What to do: You are to take a skeleton of the solution, Hw_05_skel_440.hs (attached to this pdf), and extend it as necessary to get scan to work. Parts of the program are complete: the Token definition, the top-level call of scan (it calls a helper scan'), some of the regular expressions to look for, and some of the capture' routine that capture uses. You'll have to complete scan', add in the missing regular expressions, and complete capture' as necessary. scan' rexps revtokens input takes the regular expression at the head of rexps and runs capture with it on the input. If capture succeeds, then scan' should run a tokenizer function to turn the found string into a Token, and then it adds the token to the head of the (reversed) list of tokens found so far. (Constants and spaces are a bit complicated; see more discussion below). If capture returns nothing, you can skip the rest of the input and just return the tokens you've found. The list of regular expressions to try is called scan_rexps; it cycles through constants, identifiers, operators, punctuation, and spaces. (It's an infinite list so you'll run out of input before running out of regular expressions to try.) You need to define the missing regular expression functions. The scan_rexps list is actually a list of pairs: a regular expression and a tokenizer function: String Token. Most tokenizer functions are just the datatype constructors (Id, Op, etc)., The important tokenizer function you have to write is for constants: You can't just call Const on a string like "17" , you have to read the string to get the Int 17 that you can pass to Const. The other important point is that the final list of tokens returned by scan should not contain any Spaces tokens. You can do this in scan' by not adding a Spaces token to the result being calculated. Or you could add it in here but remove it when it's time to return the whole list of tokens to scan. (Or wherever else -- your choice.) During scanning, spaces delimit tokens: E.g., scan "12 34" = [Const 12, Const 34]. For Homework 4, you extended a capture routine that does a regular expression match and returns the found string. E.g., assuming identifier, operator, constant, and punctuation are appropriate regular expressions, we can identify four parts to the input xyz+17$: capture identifier "xyz+17$" = Just("xyz", "+17$"), then capture operator "+17$" = Just("+","17$"), then capture constant "17$" = Just("17","$"), and finally capture punctuation "$" = Just("$","") For this assignment, the goal is to write a scanner that repeatedly calls capture to find strings like the ones above and then calls constructor functions for a new Token datatype (these are not the same kind of tokens used in the capture code). data Token = Const Int | Id String | Op String | Punct String | Spaces String deriving (...) Calling scan should return a list of the result tokens, e.g., scan "xyz+17$" = [Id "xyz", Op "+", Const 17, Punct "$"]. What to do: You are to take a skeleton of the solution, Hw_05_skel_440.hs (attached to this pdf), and extend it as necessary to get scan to work. Parts of the program are complete: the Token definition, the top-level call of scan (it calls a helper scan'), some of the regular expressions to look for, and some of the capture' routine that capture uses. You'll have to complete scan', add in the missing regular expressions, and complete capture' as necessary. scan' rexps revtokens input takes the regular expression at the head of rexps and runs capture with it on the input. If capture succeeds, then scan' should run a tokenizer function to turn the found string into a Token, and then it adds the token to the head of the (reversed) list of tokens found so far. (Constants and spaces are a bit complicated; see more discussion below). If capture returns nothing, you can skip the rest of the input and just return the tokens you've found. The list of regular expressions to try is called scan_rexps; it cycles through constants, identifiers, operators, punctuation, and spaces. (It's an infinite list so you'll run out of input before running out of regular expressions to try.) You need to define the missing regular expression functions. The scan_rexps list is actually a list of pairs: a regular expression and a tokenizer function: String Token. Most tokenizer functions are just the datatype constructors (Id, Op, etc)., The important tokenizer function you have to write is for constants: You can't just call Const on a string like "17" , you have to read the string to get the Int 17 that you can pass to Const. The other important point is that the final list of tokens returned by scan should not contain any Spaces tokens. You can do this in scan' by not adding a Spaces token to the result being calculated. Or you could add it in here but remove it when it's time to return the whole list of tokens to scan. (Or wherever else -- your choice.) During scanning, spaces delimit tokens: E.g., scan "12 34" = [Const 12, Const 34]

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts