Question: Q 1 ) Perceptron Trees: To exploit the desirable properties of decision tree classifiers and perceptrons, Adam came up with a new algorithm called perceptron

Q Perceptron Trees: To exploit the desirable properties of decision tree classifiers and perceptrons, Adam came up with a new algorithm called "perceptron trees", which combines features from both. Perceptron trees are similar to decision trees, however each leaf node is a perceptron, instead of a majority vote.

To create a perceptron tree, the first step is to follow a regular decision tree learning algorithm such as ID and perform splitting on attributes until the specified maximum depth is reached. Once maximum depth has been reached, at each leaf node, a perceptron is trained on the remaining attributes which have not been used up in that branch. Classification of a new example is done via a similar procedure. The example is first passed through the decision tree based on its attribute values. When it reaches a leaf node, the final prediction is made by running the corresponding perceptron at that node.

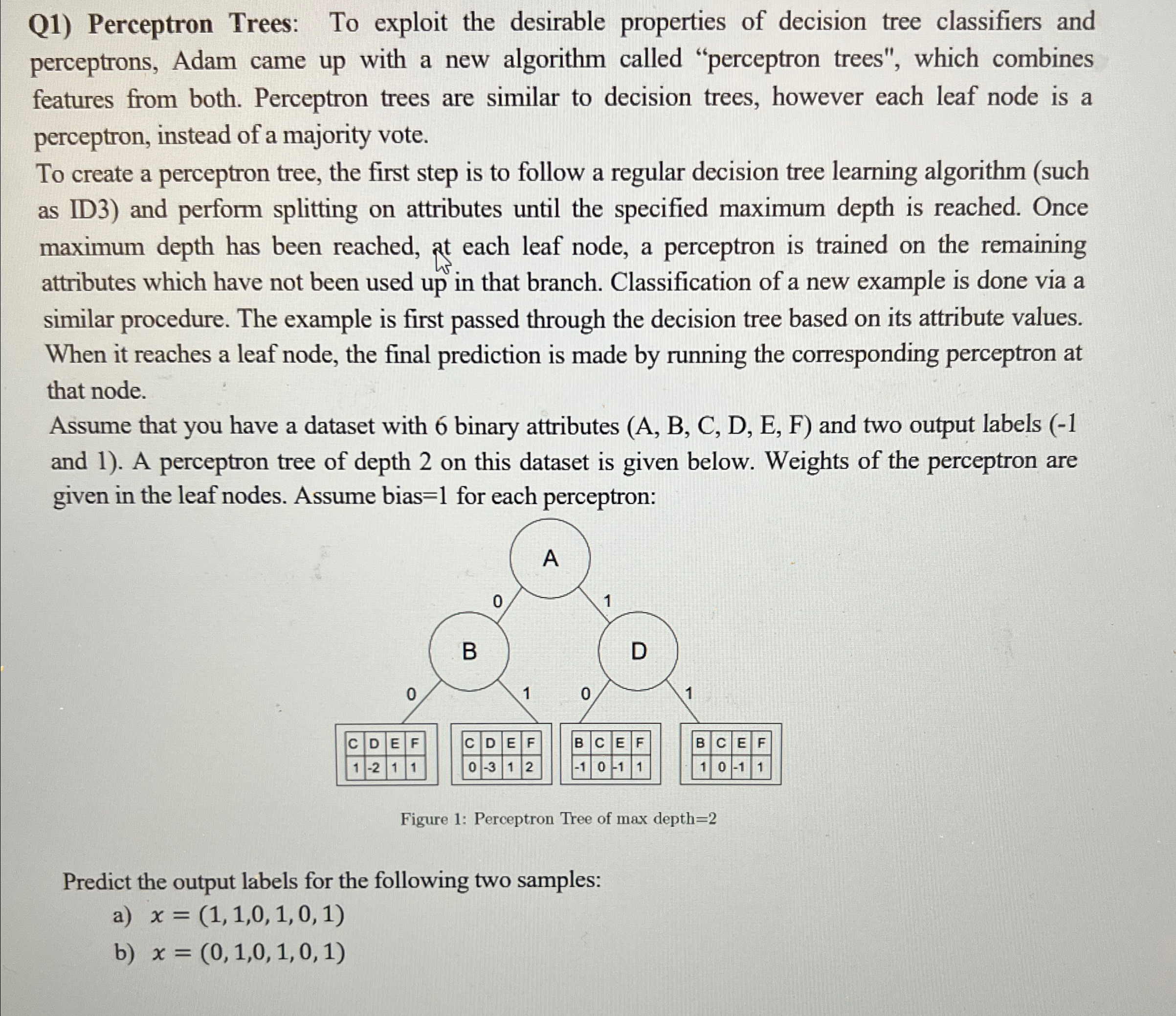

Assume that you have a dataset with binary attributes A B C D E F and two output labels and A perceptron tree of depth on this dataset is given below. Weights of the perceptron are given in the leaf nodes. Assume bias for each perceptron:

Figure : Perceptron Tree of max depth

Predict the output labels for the following two samples:

a

b

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock