Question: Q2. Neural Network The neural network given below adopts Rectified Linear Unit (ReLU) as its activation function: o(x) = max(x, 0), e.g., a,3) = o

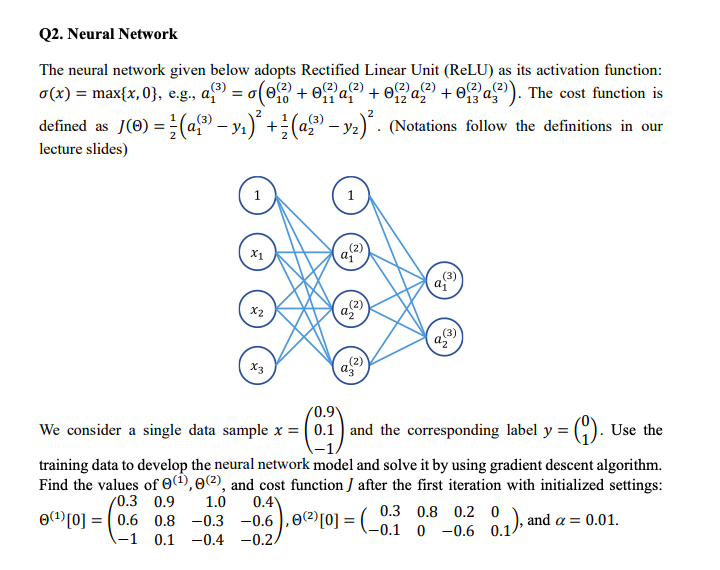

Q2. Neural Network The neural network given below adopts Rectified Linear Unit (ReLU) as its activation function: o(x) = max(x, 0), e.g., a,3) = o ( of? + ofPa;")+ ofa"' + ofa,"'). The cost function is defined as J(0) = =(a,3) -y1) +(a13) -y2) . (Notations follow the definitions in our lecture slides) X1 (2) (3) Xz (2) (3) X3 (2) 0.9 We consider a single data sample x = 0.1 and the corresponding label y = (1 ) . Use the training data to develop the neural network model and solve it by using gradient descent algorithm. Find the values of @(1), 0(2), and cost function ) after the first iteration with initialized settings: 0.3 0.9 1.0 0.4 0.3 0.8 0.2 0 0(1) 0] = 0.6 0.8 -0.3 -0.6 .")= (1 0 -06 01); and a= 0.01. -1 0.1 -0.4 -0.2

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts