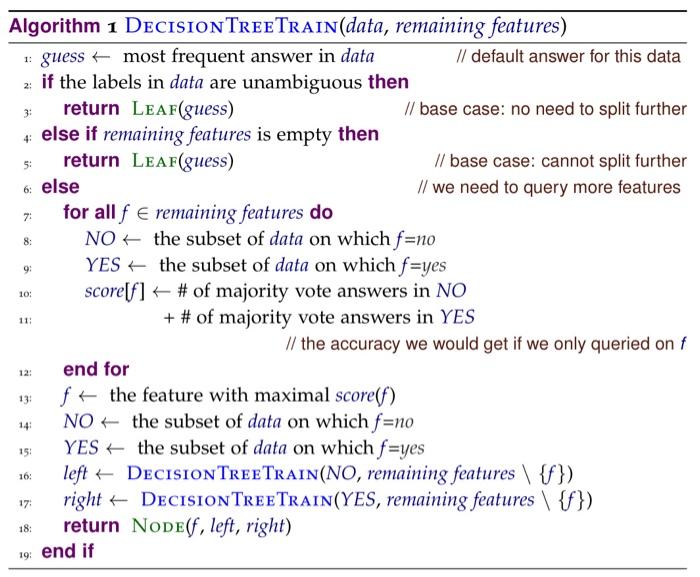

Question: Q4. a. [3 points] Change Algorithm 1 (in chapter 1 page 13) in the textbook to take a maximum depth parameter d. b. [1 point]

![Q4. a. [3 points] Change Algorithm 1 (in chapter 1 page](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f32d276e842_83966f32d2716831.jpg)

![[1 point] Do we also need to change Algorithm 2 (page 3)?](https://dsd5zvtm8ll6.cloudfront.net/si.experts.images/questions/2024/09/66f32d28ce4d3_84066f32d2870ea1.jpg)

Q4. a. [3 points] Change Algorithm 1 (in chapter 1 page 13) in the textbook to take a maximum depth parameter d. b. [1 point] Do we also need to change Algorithm 2 (page 3)? Why? 2: 3: 5: 6: else 7: 8: 9: Algorithm 1 DECISION TREETRAIN(data, remaining features) 1 guess + most frequent answer in data // default answer for this data 2: if the labels in data are unambiguous then return LEAF(guess) // base case: no need to split further else if remaining features is empty then return LEAF(guess) // base case: cannot split further // we need to query more features for all f e remaining features do NO the subset of data on which f=no YES the subset of data on which f=yes score[f] + # of majority vote answers in NO + # of majority vote answers in YES // the accuracy we would get if we only queried on f end for f+ the feature with maximal score(f) NO the subset of data on which f=no YES + the subset of data on which f=yes left + DECISION TREE TRAIN(NO, remaining features {f}) right + Decision Tree TRAIN(YES, remaining features \ {f}) return Node(f, left, right) 10: 11: 121 13: 14 15 16: 17: 18: 19: end if 2: 3: Algorithm 2 DECISION TreeTest(tree, test point) 1: if tree is of the form LEAF(guess) then return guess else if tree is of the form Node(f, left, right) then if f = no in test point then return DECISIONTREETest(left, test point) else return DecisionTreeTest(right, test point) end if 4: 5: 6: 7: 8: 9: end if Q4. a. [3 points] Change Algorithm 1 (in chapter 1 page 13) in the textbook to take a maximum depth parameter d. b. [1 point] Do we also need to change Algorithm 2 (page 3)? Why? 2: 3: 5: 6: else 7: 8: 9: Algorithm 1 DECISION TREETRAIN(data, remaining features) 1 guess + most frequent answer in data // default answer for this data 2: if the labels in data are unambiguous then return LEAF(guess) // base case: no need to split further else if remaining features is empty then return LEAF(guess) // base case: cannot split further // we need to query more features for all f e remaining features do NO the subset of data on which f=no YES the subset of data on which f=yes score[f] + # of majority vote answers in NO + # of majority vote answers in YES // the accuracy we would get if we only queried on f end for f+ the feature with maximal score(f) NO the subset of data on which f=no YES + the subset of data on which f=yes left + DECISION TREE TRAIN(NO, remaining features {f}) right + Decision Tree TRAIN(YES, remaining features \ {f}) return Node(f, left, right) 10: 11: 121 13: 14 15 16: 17: 18: 19: end if 2: 3: Algorithm 2 DECISION TreeTest(tree, test point) 1: if tree is of the form LEAF(guess) then return guess else if tree is of the form Node(f, left, right) then if f = no in test point then return DECISIONTREETest(left, test point) else return DecisionTreeTest(right, test point) end if 4: 5: 6: 7: 8: 9: end if

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts