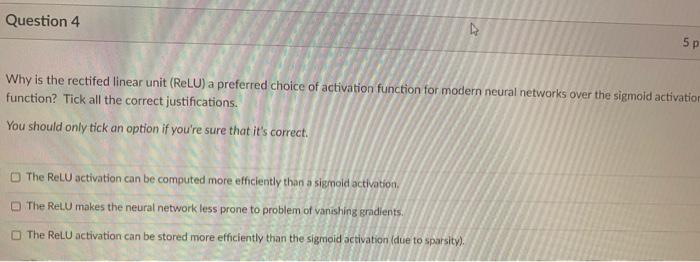

Question: Question 4 5p Why is the rectifed linear unit (ReLU) a preferred choice of activation function for modern neural networks over the sigmoid activation function?

Question 4 5p Why is the rectifed linear unit (ReLU) a preferred choice of activation function for modern neural networks over the sigmoid activation function? Tick all the correct justifications. You should only tick an option if you're sure that it's correct. The ReLU activation can be computed more efficiently than a sigmoid activation, The RelU makes the neural network less prone to problem of vanishing gradients. The ReLU activation can be stored more efficiently than the sigmoid activation due to sparsity)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts