Question: Question 4. Consider k-nearest-neighbor (k-NN) or Kernel Regression with a Gaussian Kernel. Show how to control the bias-variance trade-off for either of the methods.

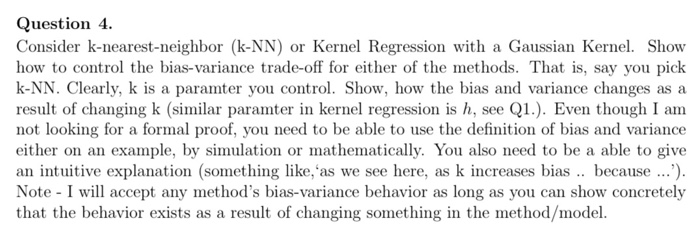

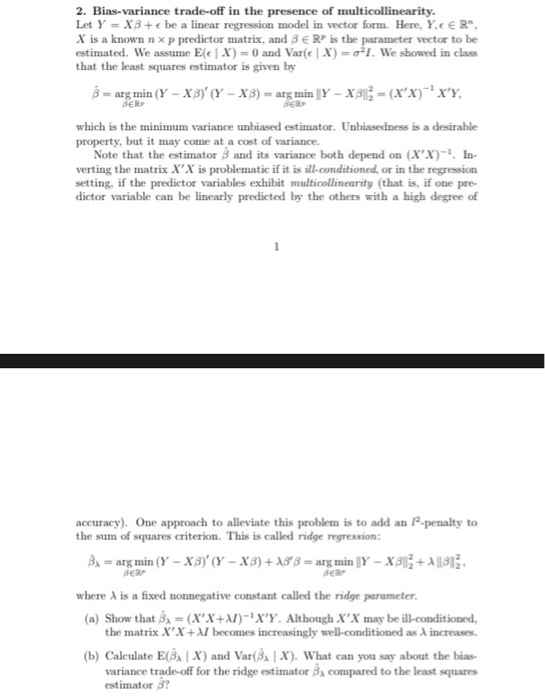

Question 4. Consider k-nearest-neighbor (k-NN) or Kernel Regression with a Gaussian Kernel. Show how to control the bias-variance trade-off for either of the methods. That is, say you pick k-NN. Clearly, k is a paramter you control. Show, how the bias and variance changes as a result of changing k (similar paramter in kernel regression is h, see Q1.). Even though I am not looking for a formal proof, you need to be able to use the definition of bias and variance either on an example, by simulation or mathematically. You also need to be a able to give an intuitive explanation (something like, 'as we see here, as k increases bias .. because ...). Note I will accept any method's bias-variance behavior as long as you can show concretely that the behavior exists as a result of changing something in the method/model. 2. Bias-variance trade-off in the presence of multicollinearity. Let Y = X3 + e be a linear regression model in vector form. Here, Y, e R", X is a known n xp predictor matrix, and 3 RP is the parameter vector to be estimated. We assume E(e | X) = 0 and Var(e| X) = 1. We showed in class that the least squares estimator is given by 3= arg min (Y-X 3)' (Y-X3) = arg min ||Y-XB| = (X'X)~X'Y, BERP BER which is the minimum variance unbiased estimator. Unbiasedness is a desirable property, but it may come at a cost of variance. Note that the estimator 3 and its variance both depend on (X'X)-. In- verting the matrix X'X is problematic if it is ill-conditioned, or in the regression setting, if the predictor variables exhibit multicollinearity (that is, if one pre- dictor variable can be linearly predicted by the others with a high degree of accuracy). One approach to alleviate this problem is to add an F-penalty to the sum of squares criterion. This is called ridge regression: 3= arg min (Y-XB)' (Y-X3) + AS8= arg min | Y - XB||+X|||| SER SER where A is a fixed nonnegative constant called the ridge parameter. (a) Show that 3x = (X'X+XI)-X'Y. Although X'X may be ill-conditioned, the matrix X'X+XI becomes increasingly well-conditioned as A increases. (b) Calculate E(3x | X) and Var(3x | X). What can you say about the bias- variance trade-off for the ridge estimator 3 compared to the least squares estimator 3?

Step by Step Solution

There are 3 Steps involved in it

Here is an explanation of controlling the biasvariance tradeoff in kNN and kernel regression as well ... View full answer

Get step-by-step solutions from verified subject matter experts