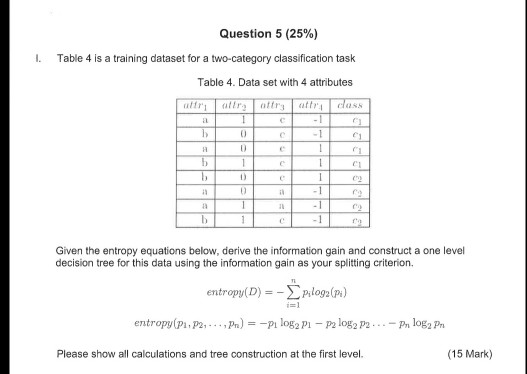

Question: Question 5 (25%) I. Table 4 is a training dataset for a two-category classification task Table 4. Data set with 4 attributes C1 r1 C1

Question 5 (25%) I. Table 4 is a training dataset for a two-category classification task Table 4. Data set with 4 attributes C1 r1 C1 Given the entropy equations below, derive the information gain and construct a one level decision tree for this data using the information gain as your splitting criterion entropy(D)-plogp) entropip1 , P2, . . . , Pn) =-pi log2 pi-n log2 P2 . . .-Pn log2 Prl Please show all calculations and tree construction at the first level. (15 Mark)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts