Question: Question 5 - Kernel PCA ( Bonus 1 0 points ) PCA is a useful tool to lower the dimensions of the data and get

Question Kernel PCA Bonus points

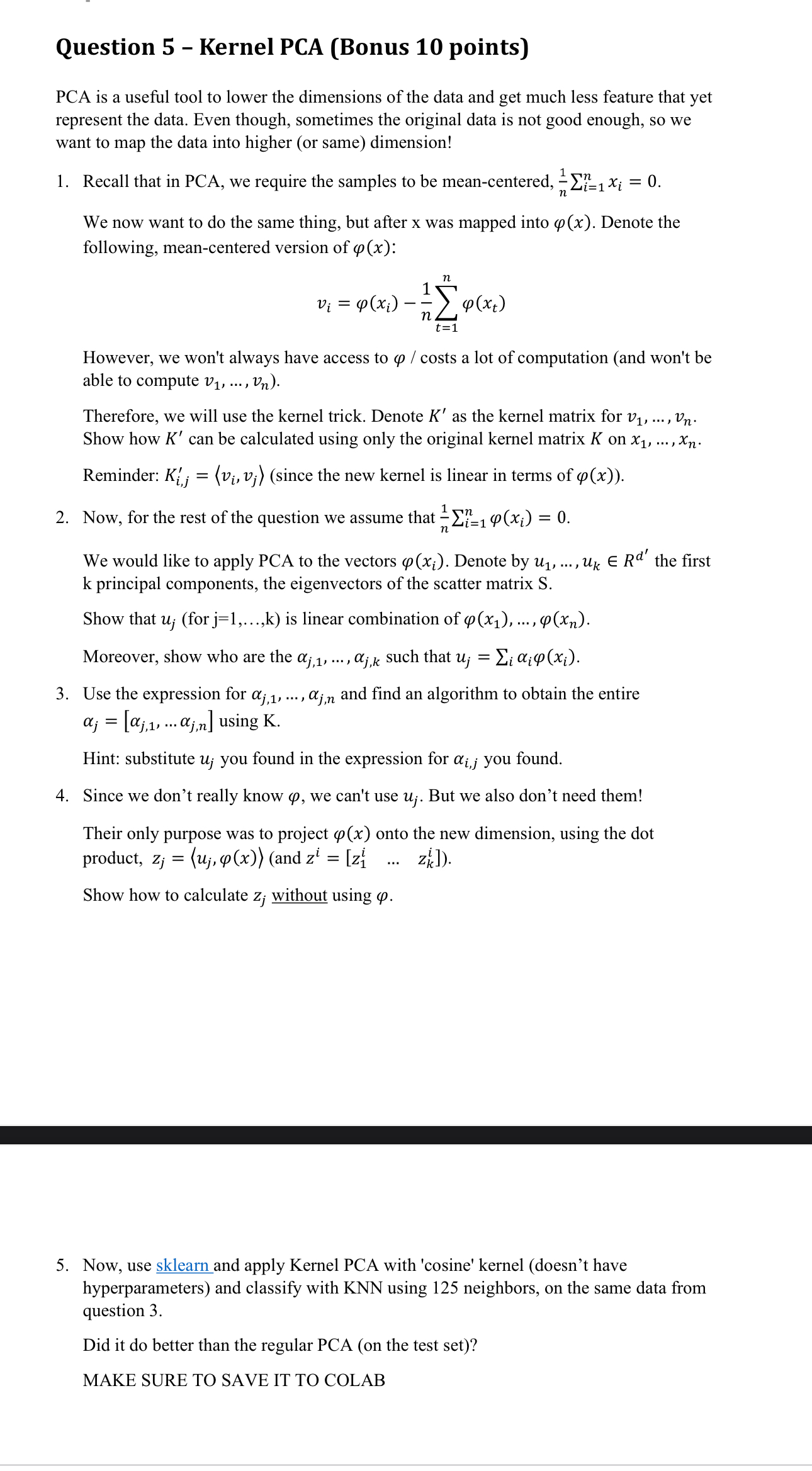

PCA is a useful tool to lower the dimensions of the data and get much less feature that yet represent the data. Even though, sometimes the original data is not good enough, so we want to map the data into higher or same dimension!

Recall that in PCA, we require the samples to be meancentered,

We now want to do the same thing, but after x was mapped into Denote the following, meancentered version of :

However, we won't always have access to costs a lot of computation and won't be able to compute dots,

Therefore, we will use the kernel trick. Denote as the kernel matrix for dots, Show how can be calculated using only the original kernel matrix on dots,

Reminder: ::since the new kernel is linear in terms of

Now, for the rest of the question we assume that

We would like to apply PCA to the vectors Denote by dots, the first k principal components, the eigenvectors of the scatter matrix S

Show that for dots, is linear combination of dots,

Moreover, show who are the dots, such that

Use the expression for dots, and find an algorithm to obtain the entire dots using K

Hint: substitute you found in the expression for you found.

Since we don't really know we can't use But we also don't need them!

Their only purpose was to project onto the new dimension, using the dot product, ::and :

Show how to calculate without using

Now, use sklearn and apply Kernel PCA with 'cosine' kernel doesnt have hyperparameters and classify with KNN using neighbors, on the same data from question

Did it do better than the regular PCA on the test set

MAKE SURE TO SAVE IT TO COLAB

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock