Question: Question 5. Neural Net: Given (Li, Yi) for i = 1, ..., n, we are to build a one-layer neu- ral network for regression. Initializing

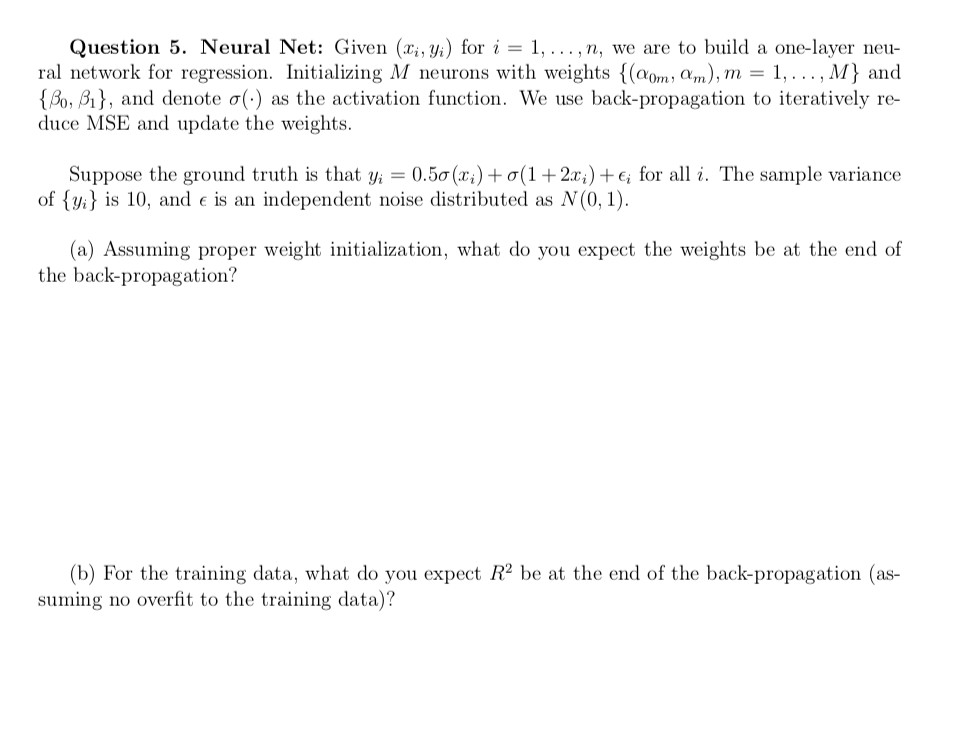

Question 5. Neural Net: Given (Li, Yi) for i = 1, ..., n, we are to build a one-layer neu- ral network for regression. Initializing M neurons with weights {(Qom, Om), m = 1, ..., M} and {Bo, B1}, and denote o(s) as the activation function. We use back-propagation to iteratively re- duce MSE and update the weights. Suppose the ground truth is that yi = 0.50 (xi) +0(1 + 2.6;) +e; for all i. The sample variance of {yi} is 10, and e is an independent noise distributed as N(0,1). (a) Assuming proper weight initialization, what do you expect the weights be at the end of the back-propagation? (b) For the training data, what do you expect R be at the end of the back-propagation (as- suming no overfit to the training data)? Question 5. Neural Net: Given (Li, Yi) for i = 1, ..., n, we are to build a one-layer neu- ral network for regression. Initializing M neurons with weights {(Qom, Om), m = 1, ..., M} and {Bo, B1}, and denote o(s) as the activation function. We use back-propagation to iteratively re- duce MSE and update the weights. Suppose the ground truth is that yi = 0.50 (xi) +0(1 + 2.6;) +e; for all i. The sample variance of {yi} is 10, and e is an independent noise distributed as N(0,1). (a) Assuming proper weight initialization, what do you expect the weights be at the end of the back-propagation? (b) For the training data, what do you expect R be at the end of the back-propagation (as- suming no overfit to the training data)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts