Question: Question 6 ( 1 . 5 points ) Mark all that is true about activation functions. The derivative of softmax with z as input is

Question points

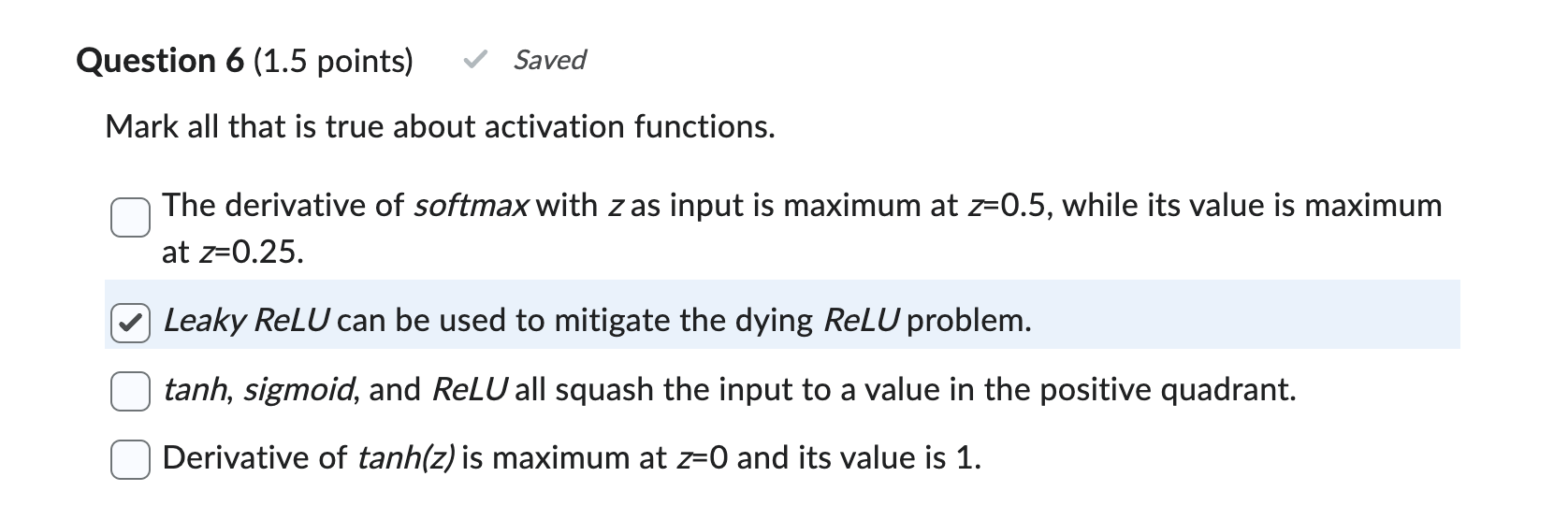

Mark all that is true about activation functions.

The derivative of softmax with as input is maximum at while its value is maximum

at

Leaky ReLU can be used to mitigate the dying ReLU problem.

tanh sigmoid, and ReLU all squash the input to a value in the positive quadrant.

Derivative of is maximum at and its value is

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock