Question: Question: Gradient Descent with Square Loss Define square loss as Algorithm 2 is gradient descent with respect to square loss (code this up yourself --

Question: Gradient Descent with Square Loss

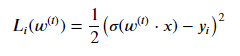

Define square loss as

Algorithm 2 is gradient descent with respect to square loss (code this up yourself -- run for 1000 iterations, use step size eta = 0.01).

Here is the code for the dataset I have generated:

def generate_data(m): w = np.random.normal(0,1,10) norm = np.linalg.norm(w) norm_w = worm x_i = [] y_i = [] a = [0, 1] for i in range(1, m): x = np.random.normal(0,1,10) x_i.append(x) b = np.dot(x, norm_w) sigma = 1/(1 + np.exp(-b)) p_y1 = sigma p_y0 = 1 - (sigma) c = random.choices(a, weights = [p_y0, p_y1]) y_i.append(c) i = i + 1 df = pd.DataFrame(x_i) df['y_i'] = y_i return df

dataset = generate_data(51)

Please provide the python code for gradient descent with square loss defined by the equation above. :)

2 L+(uc)) = ( x - y) 2 L+(uc)) = ( x - y)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts