Question: Read about automatic differentation in Machine Learning Defined appendix B. You can find that online here https://jermwatt.github.io/machine learning refinedotes/3 First order methods/3 5 Automatic.html To

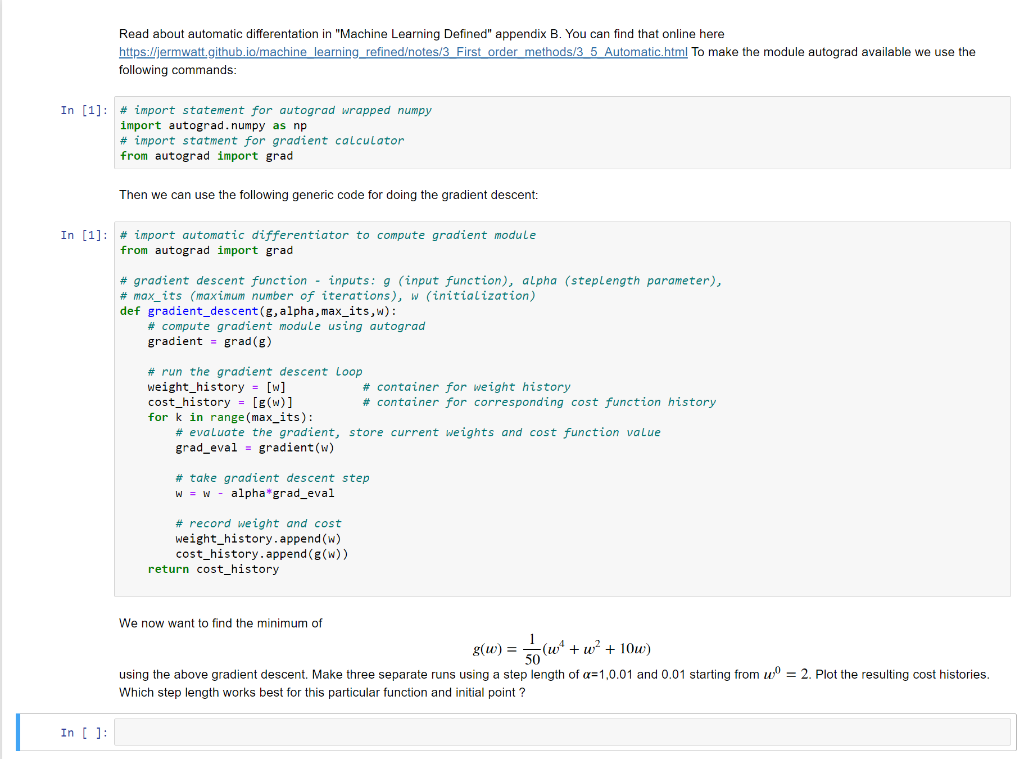

Read about automatic differentation in "Machine Learning Defined" appendix B. You can find that online here https://jermwatt.github.io/machine learning refinedotes/3 First order methods/3 5 Automatic.html To make the module autograd available we use the following commands: In [1]: # import statement for autograd wrapped numpy import autograd. numpy as np # import statment for gradient calculator from autograd import grad Then we can use the following generic code for doing the gradient descent: In [1]: # import automatic differentiator to compute gradient module from autograd import grad # gradient descent function - inputs: g (input function), alpha (steplength parameter), # max_its (maximum number of iterations), w (initialization) def gradient_descent(g, alpha, max_its,w): # compute gradient module using autograd gradient = grad(g) # run the gradient descent Loop weight_history = [w] # container for weight history cost_history = (g(w)] # container for corresponding cost function history for k in range (max_its): # evaluate the gradient, store current weights and cost function value grad_eval = gradient(w) # take gradient descent step W = W - alpha*grad_eval # record weight and cost weight_history.append(w) cost_history.append(g(w)) return cost_history We now want to find the minimum of g(W) = (w" + w+ 10w) using the above gradient descent. Make three separate runs using a step length of a=1,0.01 and 0.01 starting from w" = 2. Plot the resulting cost histories. Which step length works best for this particular function and initial point ? so In [ ]: Read about automatic differentation in "Machine Learning Defined" appendix B. You can find that online here https://jermwatt.github.io/machine learning refinedotes/3 First order methods/3 5 Automatic.html To make the module autograd available we use the following commands: In [1]: # import statement for autograd wrapped numpy import autograd. numpy as np # import statment for gradient calculator from autograd import grad Then we can use the following generic code for doing the gradient descent: In [1]: # import automatic differentiator to compute gradient module from autograd import grad # gradient descent function - inputs: g (input function), alpha (steplength parameter), # max_its (maximum number of iterations), w (initialization) def gradient_descent(g, alpha, max_its,w): # compute gradient module using autograd gradient = grad(g) # run the gradient descent Loop weight_history = [w] # container for weight history cost_history = (g(w)] # container for corresponding cost function history for k in range (max_its): # evaluate the gradient, store current weights and cost function value grad_eval = gradient(w) # take gradient descent step W = W - alpha*grad_eval # record weight and cost weight_history.append(w) cost_history.append(g(w)) return cost_history We now want to find the minimum of g(W) = (w" + w+ 10w) using the above gradient descent. Make three separate runs using a step length of a=1,0.01 and 0.01 starting from w" = 2. Plot the resulting cost histories. Which step length works best for this particular function and initial point ? so In [ ]

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts