Question: Review the Research Process and Evaluation Research Steps found on pages 316-318. Select something that strikes you as particularly important it all is important and

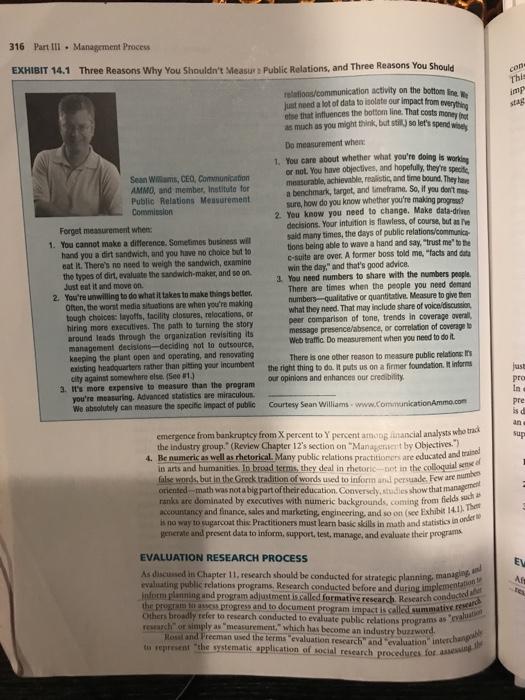

Review the Research Process and Evaluation Research Steps found on pages 316-318. Select something that strikes you as particularly important it all is important and describe it. How have you seen this part of the process or step used? CONS Thie imp just had a lot of data to isolate our impact from everything As discussed in Chapter 11, research should be conducted for strategic planning managing and evaluating public relations programs. Research conducted before and during implementation inform planning and program adjustment is called formative research Research conducted the PNP progress and to document program impact is called mathed Others broadly refer to research conducted to evaluate public relations programs as "valu Rossland Freeman used the terms evaluation research and evaluation interchan the systematic application of social research procedures for the 316 Part III . Management Process EXHIBIT 14.1 Three Reasons Why You Shouldn't Measure Public Relations, and Three Reasons You Should relations/communication activity on the bottom line. ette that influences the bottom line. That costs money trul as much as you might think, but still, so let's spend why Do measurement when 1. You care about whether what you're doing is working Sean Wom, CEO Communication or not. You have objectives, and hopefully they're specie AMMO, and member, Institute for mensurable, achievable, realistic and time bound. They Public Relations Mensurement a benchmark, tarpet, and timeframe. So, if you don't sure, how do you know whether you're making progres? Commission 2. You know you need to change. Make data-driven Forget measurement when decisions. Your intuition is flawless, of course, but alle 1. You cannot make a difference. Sometimes business wil said many times, the days of public relations/communica hand you a dirt sandwich, and you have no choice but to tions being able to wave a hand and say, "trust me to the cat it. There's no need to weigh the sandwich, examine c-suite are over. A former boss told me, "facts and cute the types of dirt, evaluate the sandwich-maker, and so on. win the day," and that's good advice Just eat it and move on You need numbers to share with the numbers people. There are times when the people you need donand 2. You're willing to do what it takes to make things better Often, the worst media situations are when you're making numbers--qualitative or quantitative. Measure to give them tough choices:layoffs, facility closures, relocations, or what they need. That may include share of voice discussion hiring more executives. The path to turning the story per comparison of tone, trends in coverage overall around leads through the organization revisiting its message presence absence, or correlation of coverage management decisions deciding not to outsource, Web traffic Do measurement when you need to do it keeping the plant open and operating, and renovating There is one other reason to measure public relations existing headquarters rather than pitting your incumbent the right thing to do. It puts us on a firmer foundation. It informe city against somewhere else. (See 81.) our opinions and enhances our credibility 3. It's more expensive to measure than the program you're measuring. Advanced statistics are miraculous. We solutely can measure the specific impact of public Courtesy Sean Williams www.communicationAmmo.com emergence from bankruptcy from X percent to Y percent among dinancial analysts who track the industry group." (Review Chapter 12's section on Management by Objectives." 4. Bc numeric as well as rhetorical. Many public relations practitioners are educated and trained in arts and humanities. In brood terms, they deal in rhetoricot in the colloquial sense of Gole works, but in the Greek tradition of words used to informan pertande. Few are numbe oriented math was not a big part of their education. Conversely, studies show that management Recountancy and finance, sales and marketing, engineering, and so on (see Exhibit 14.1). The is no way to sugarcoat thise Practitioners must learn bask skills in math and statistics in order to generwte and present data to inform support, test, manage, and evaluate their programma EVALUATION RESEARCH PROCESS reach or simply as "measurement which has become an industry buzzword. use Pre In pre is an Sup EL AR Chapter 14. Step Four Evaluating the Program conceptualization, design, implementation, and utility of social intervention programa. This definition emphasizes the focus of evaluation on the preparation, implementation, and impact of public relations programs (sce page 240-42). Furthermore, It explains the various stages by outlining the basic questions posed in evaluation: Program conceptualization and design What is the extent and distribution of the target problem and/or population! is the program designed in conformity with intended goals is there a coberent rationale underlying it, and have chances of successful delivery been maximized? What are projected or existing costs, and what is their relation to benefits and effectiveness? Monitoring and accountability of program implementation Is the program reaching the specified target population or target atea! Are the intervention efforts being conducted as specified in the program design? Assessment of program utility: Impact and efficiency Is the program effective in achieving its intended goals? Can the results of the program be explained by some alternative process that does not include the program? Is the program having some effects that were not intended? What are the costs to deliver services and benefits to program participants? Is the program an efficient use of resources, compared with alternative uses of the sources! Abe evaluation research should be used to learn what happened and why, not to prove or justify mething already done or decided. For example, one organization set up an evaluation project for the sole purpose of justifying the firing of its senior communication officer In other public relations and other communication staff do evaluation research with a predetrained objective of supporting their decisions and programs. True evaluation research is done e gather information honestly and objectively to provide data for decision making with an open mind. "Symbolic evaluation on the other hand, is conducted to provide managers with supportive data from what can be called "pseudoresearch." Program managers use pseudoresearch for three reasons 1. Organizational politics Research is used solely to gain power, justify decisions already made, or serve as a scapegoat. 2. Service promotion: Pseudoresearch is undertaken, often in a slanted way, to promote products or services and impress clients or prospects 3. Personal satisfaction: Pseudoresearch is done as an ego-bolstering activity to keep up with fads or to demonstrate acquired skills." In the long haul, these spurious efforts are self-defeating, EVALUATION RESEARCH STEPS After preparing themselves to undertake program evaluation and reviewing the evaluation Research process, public relations managers must implement the following 10 steps 1. Establish agreement on the uses and purposes of the evaluation. Without och agreement, research often produces volumes of unused and often weiss data Commi to paper the problem, concern or question that motivates the research fort. Nent detail how research findings will be used. To avoid buying canned or off the shell services these written statements are doubly important when hiring outside research specialis with colleagues the knowledge gained from vant research distinguishes the profesi 318 Part III. Management Process hequr 2. Secure organizational commitment to evaluation and make research basic to the program. Evaluation cannot be tacked on as an afterthought. Build research into the entire process, with sufficient resources to make it central to the problem definition, planning and programming, implementation, and evaluation steps. 3. Develop consensus on using evaluation research within the department. Even practitioners not eager to trade in their notions of public relations as creative activity dealing with "intangibles" must be part of the effort. They have to accept that research a necessity to build the strategic foundation, although it will not replace completely their creativity and lessons gained from experience. 4. Write program objectives in observable and measurable terms. Without measurable outcomes specified in the program objectives, evaluation research cannot be designed to evaluate program impact. If an objective outcome cannot be measured, it is not useful The evaluation imperative forces clarity and precision in the planning process, particularly when writing specific objectives for each of the target publics, 5. Select the most appropriate criteria. Objectives spell out intended outcomes. If increasing awareness of an organization's support of local charities is stated in an objective, for example, then column inches and favorable mentions in the media are not appropriate measures of the knowledge outcome sought. Identify what changes in knowledge, opinions attitudes, and behaviors are specified in the objectives before gathering evidence. The same applies when the program seeks to maintain existing levels of desired states. (Review the *Writing Program Objectives" section of Chapter 12, pages 270-73.) 6. Determine the best way to gather evidence. Surveys are not always the best way to find out about program impact. Sometimes organizational records contain the evidence needed. In other cases, a field experiment or case study may be the only way to test and evaluate a program. There is no single right way to gather data for evaluations. The method used depends on (1) the question and purposes motivating the evaluation: (2) the outcome criteria specified in the objectives; and (3) the cost of different research approaches resulting from the program complexity, setting, or both. 7. Keep complete program records. Program strategies and materials are real-world expressions of practitioners working theories of cause and effect. Complete documentation helps identify what worked and what did not work. Records help reduce the impact of selection perception and personal bias when reconstructing the strategy and tactics that contributed to program success or failure. 8. Use evaluation findings to manage the program. Each cycle of the program process can be more effective than the preceding cycle if the results of evaluation are used to make adjustments. Problem statements and situation analyses should be more detailed and precise with the addition of new evidence from the evaluation Revised goals and objective should reflect what was learned. Action and communication strategies can be continuel fine-tuned. or discarded on the basis of knowledge of what did and did not work. 9. Report evaluation results to management. Develop a procedure for regularly reporting to line and stall manager. Documented results and adjustments based on evidence illustra that public relations is being managed to contribute to achieving organizational goals of "bottom line 10. Add to professional knowledge. Scientifie management of public relations leads to greater understanding of the proces and its effects. Most program evaluation tends to be specife to a particular organization and time, but some findings are cross situational. For example, article may be relevant oly to that one article and organization. On the other hand, learning findings about how many employees teamed about a proposed reorganization from that employees want more information about organizational plans not only prowider guidance for future communications, but who may apply in other organisations. She practice from the technical crafis practiced under the public relations rubrie Review the Research Process and Evaluation Research Steps found on pages 316-318. Select something that strikes you as particularly important it all is important and describe it. How have you seen this part of the process or step used? CONS Thie imp just had a lot of data to isolate our impact from everything As discussed in Chapter 11, research should be conducted for strategic planning managing and evaluating public relations programs. Research conducted before and during implementation inform planning and program adjustment is called formative research Research conducted the PNP progress and to document program impact is called mathed Others broadly refer to research conducted to evaluate public relations programs as "valu Rossland Freeman used the terms evaluation research and evaluation interchan the systematic application of social research procedures for the 316 Part III . Management Process EXHIBIT 14.1 Three Reasons Why You Shouldn't Measure Public Relations, and Three Reasons You Should relations/communication activity on the bottom line. ette that influences the bottom line. That costs money trul as much as you might think, but still, so let's spend why Do measurement when 1. You care about whether what you're doing is working Sean Wom, CEO Communication or not. You have objectives, and hopefully they're specie AMMO, and member, Institute for mensurable, achievable, realistic and time bound. They Public Relations Mensurement a benchmark, tarpet, and timeframe. So, if you don't sure, how do you know whether you're making progres? Commission 2. You know you need to change. Make data-driven Forget measurement when decisions. Your intuition is flawless, of course, but alle 1. You cannot make a difference. Sometimes business wil said many times, the days of public relations/communica hand you a dirt sandwich, and you have no choice but to tions being able to wave a hand and say, "trust me to the cat it. There's no need to weigh the sandwich, examine c-suite are over. A former boss told me, "facts and cute the types of dirt, evaluate the sandwich-maker, and so on. win the day," and that's good advice Just eat it and move on You need numbers to share with the numbers people. There are times when the people you need donand 2. You're willing to do what it takes to make things better Often, the worst media situations are when you're making numbers--qualitative or quantitative. Measure to give them tough choices:layoffs, facility closures, relocations, or what they need. That may include share of voice discussion hiring more executives. The path to turning the story per comparison of tone, trends in coverage overall around leads through the organization revisiting its message presence absence, or correlation of coverage management decisions deciding not to outsource, Web traffic Do measurement when you need to do it keeping the plant open and operating, and renovating There is one other reason to measure public relations existing headquarters rather than pitting your incumbent the right thing to do. It puts us on a firmer foundation. It informe city against somewhere else. (See 81.) our opinions and enhances our credibility 3. It's more expensive to measure than the program you're measuring. Advanced statistics are miraculous. We solutely can measure the specific impact of public Courtesy Sean Williams www.communicationAmmo.com emergence from bankruptcy from X percent to Y percent among dinancial analysts who track the industry group." (Review Chapter 12's section on Management by Objectives." 4. Bc numeric as well as rhetorical. Many public relations practitioners are educated and trained in arts and humanities. In brood terms, they deal in rhetoricot in the colloquial sense of Gole works, but in the Greek tradition of words used to informan pertande. Few are numbe oriented math was not a big part of their education. Conversely, studies show that management Recountancy and finance, sales and marketing, engineering, and so on (see Exhibit 14.1). The is no way to sugarcoat thise Practitioners must learn bask skills in math and statistics in order to generwte and present data to inform support, test, manage, and evaluate their programma EVALUATION RESEARCH PROCESS reach or simply as "measurement which has become an industry buzzword. use Pre In pre is an Sup EL AR Chapter 14. Step Four Evaluating the Program conceptualization, design, implementation, and utility of social intervention programa. This definition emphasizes the focus of evaluation on the preparation, implementation, and impact of public relations programs (sce page 240-42). Furthermore, It explains the various stages by outlining the basic questions posed in evaluation: Program conceptualization and design What is the extent and distribution of the target problem and/or population! is the program designed in conformity with intended goals is there a coberent rationale underlying it, and have chances of successful delivery been maximized? What are projected or existing costs, and what is their relation to benefits and effectiveness? Monitoring and accountability of program implementation Is the program reaching the specified target population or target atea! Are the intervention efforts being conducted as specified in the program design? Assessment of program utility: Impact and efficiency Is the program effective in achieving its intended goals? Can the results of the program be explained by some alternative process that does not include the program? Is the program having some effects that were not intended? What are the costs to deliver services and benefits to program participants? Is the program an efficient use of resources, compared with alternative uses of the sources! Abe evaluation research should be used to learn what happened and why, not to prove or justify mething already done or decided. For example, one organization set up an evaluation project for the sole purpose of justifying the firing of its senior communication officer In other public relations and other communication staff do evaluation research with a predetrained objective of supporting their decisions and programs. True evaluation research is done e gather information honestly and objectively to provide data for decision making with an open mind. "Symbolic evaluation on the other hand, is conducted to provide managers with supportive data from what can be called "pseudoresearch." Program managers use pseudoresearch for three reasons 1. Organizational politics Research is used solely to gain power, justify decisions already made, or serve as a scapegoat. 2. Service promotion: Pseudoresearch is undertaken, often in a slanted way, to promote products or services and impress clients or prospects 3. Personal satisfaction: Pseudoresearch is done as an ego-bolstering activity to keep up with fads or to demonstrate acquired skills." In the long haul, these spurious efforts are self-defeating, EVALUATION RESEARCH STEPS After preparing themselves to undertake program evaluation and reviewing the evaluation Research process, public relations managers must implement the following 10 steps 1. Establish agreement on the uses and purposes of the evaluation. Without och agreement, research often produces volumes of unused and often weiss data Commi to paper the problem, concern or question that motivates the research fort. Nent detail how research findings will be used. To avoid buying canned or off the shell services these written statements are doubly important when hiring outside research specialis with colleagues the knowledge gained from vant research distinguishes the profesi 318 Part III. Management Process hequr 2. Secure organizational commitment to evaluation and make research basic to the program. Evaluation cannot be tacked on as an afterthought. Build research into the entire process, with sufficient resources to make it central to the problem definition, planning and programming, implementation, and evaluation steps. 3. Develop consensus on using evaluation research within the department. Even practitioners not eager to trade in their notions of public relations as creative activity dealing with "intangibles" must be part of the effort. They have to accept that research a necessity to build the strategic foundation, although it will not replace completely their creativity and lessons gained from experience. 4. Write program objectives in observable and measurable terms. Without measurable outcomes specified in the program objectives, evaluation research cannot be designed to evaluate program impact. If an objective outcome cannot be measured, it is not useful The evaluation imperative forces clarity and precision in the planning process, particularly when writing specific objectives for each of the target publics, 5. Select the most appropriate criteria. Objectives spell out intended outcomes. If increasing awareness of an organization's support of local charities is stated in an objective, for example, then column inches and favorable mentions in the media are not appropriate measures of the knowledge outcome sought. Identify what changes in knowledge, opinions attitudes, and behaviors are specified in the objectives before gathering evidence. The same applies when the program seeks to maintain existing levels of desired states. (Review the *Writing Program Objectives" section of Chapter 12, pages 270-73.) 6. Determine the best way to gather evidence. Surveys are not always the best way to find out about program impact. Sometimes organizational records contain the evidence needed. In other cases, a field experiment or case study may be the only way to test and evaluate a program. There is no single right way to gather data for evaluations. The method used depends on (1) the question and purposes motivating the evaluation: (2) the outcome criteria specified in the objectives; and (3) the cost of different research approaches resulting from the program complexity, setting, or both. 7. Keep complete program records. Program strategies and materials are real-world expressions of practitioners working theories of cause and effect. Complete documentation helps identify what worked and what did not work. Records help reduce the impact of selection perception and personal bias when reconstructing the strategy and tactics that contributed to program success or failure. 8. Use evaluation findings to manage the program. Each cycle of the program process can be more effective than the preceding cycle if the results of evaluation are used to make adjustments. Problem statements and situation analyses should be more detailed and precise with the addition of new evidence from the evaluation Revised goals and objective should reflect what was learned. Action and communication strategies can be continuel fine-tuned. or discarded on the basis of knowledge of what did and did not work. 9. Report evaluation results to management. Develop a procedure for regularly reporting to line and stall manager. Documented results and adjustments based on evidence illustra that public relations is being managed to contribute to achieving organizational goals of "bottom line 10. Add to professional knowledge. Scientifie management of public relations leads to greater understanding of the proces and its effects. Most program evaluation tends to be specife to a particular organization and time, but some findings are cross situational. For example, article may be relevant oly to that one article and organization. On the other hand, learning findings about how many employees teamed about a proposed reorganization from that employees want more information about organizational plans not only prowider guidance for future communications, but who may apply in other organisations. She practice from the technical crafis practiced under the public relations rubrie