Question: RNN Components 3 points possible ( graded ) The main challenge with an n - gram model is that history needs to be variable, not

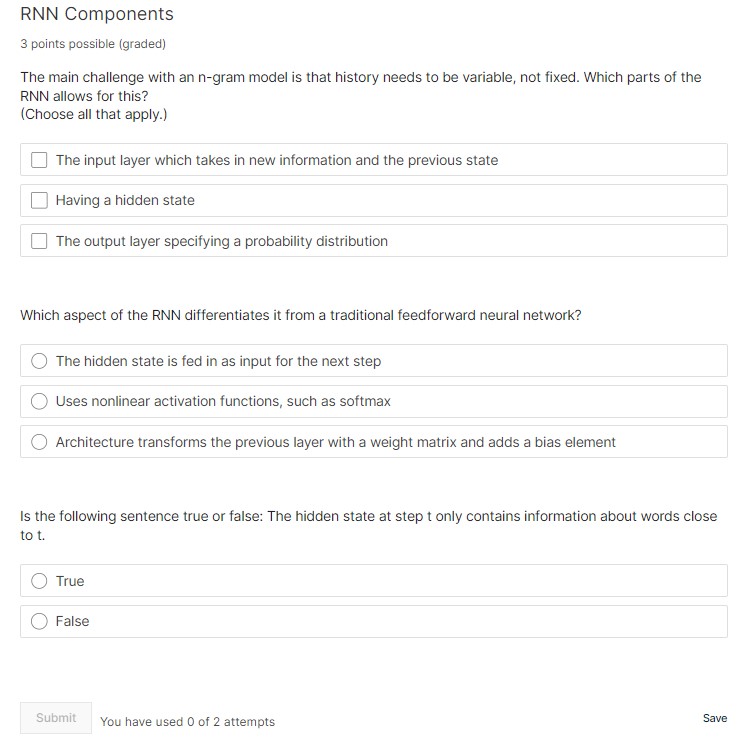

RNN Components

points possible graded

The main challenge with an gram model is that history needs to be variable, not fixed. Which parts of the

RNN allows for this?

Choose all that apply.

The input layer which takes in new information and the previous state

Having a hidden state

The output layer specifying a probability distribution

Which aspect of the RNN differentiates it from a traditional feedforward neural network?

The hidden state is fed in as input for the next step

Uses nonlinear activation functions, such as softmax

Architecture transforms the previous layer with a weight matrix and adds a bias element

Is the following sentence true or false: The hidden state at step t only contains information about words close

to t

True

False

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock