Question: Section C - Concepts in Apache Spark and Distributed Computing Please answer in full sentence(s) (max 3), per question, as appropriate. Use the handout to

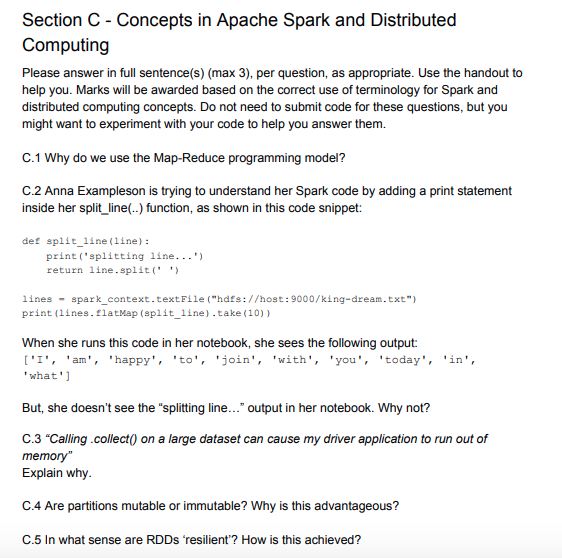

Section C - Concepts in Apache Spark and Distributed Computing Please answer in full sentence(s) (max 3), per question, as appropriate. Use the handout to help you. Marks will be awarded based on the correct use of terminology for Spark and distributed computing concepts. Do not need to submit code for these questions, but you might want to experiment with your code to help you answer them. C.1 Why do we use the Map-Reduce programming model? C.2 Anna Exampleson is trying to understand her Spark code by adding a print statement inside her split_line (..) function, as shown in this code snippet: def split_line(line): print('splitting line...' return line.split("") lines - spark_context.textFile("hdfs://host:9000/king-dream.txt") print(lines. flatMap (split_line).take (10) When she runs this code in her notebook, she sees the following output: ['I', 'am', 'happy', 'to', 'join', 'with', 'you', 'today', 'in', what'] But, she doesn't see the "splitting line..." output in her notebook. Why not? C.3 "Calling .collect() on a large dataset can cause my driver application to run out of memory" Explain why. C.4 Are partitions mutable or immutable? Why is this advantageous? C.5 In what sense are RDDs 'resilient'? How is this achieved

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts