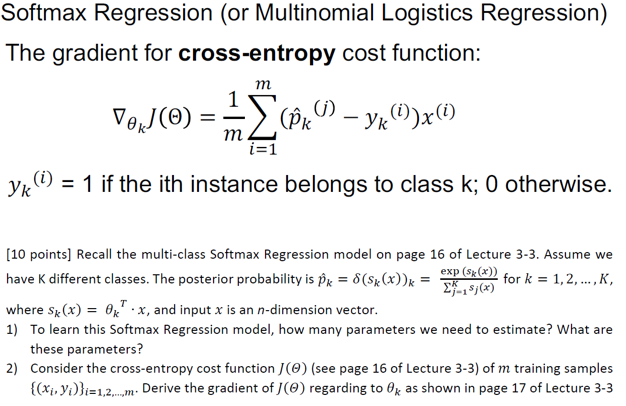

Question: Softmax Regression (or Multinomial Logistics Regression) The gradient for cross-entropy cost function: m Vo J(0) = 1 (Pk (i) m - yk (i))x(i) i=1 yk

Softmax Regression (or Multinomial Logistics Regression) The gradient for cross-entropy cost function: m Vo J(0) = 1 (Pk (i) m - yk (i))x(i) i=1 yk () = 1 if the ith instance belongs to class k; 0 otherwise. [10 points] Recall the multi-class Softmax Regression model on page 16 of Lecture 3-3. Assume we have K different classes. The posterior probability is PR = 6(S,(X) )k = TK_ s,(x) exp (5k (*)) for k = 1, 2, .... K, where Sk(x) = 0k . x, and input x is an n-dimension vector. 1) To learn this Softmax Regression model, how many parameters we need to estimate? What are these parameters? 2) Consider the cross-entropy cost function / (@) (see page 16 of Lecture 3-3) of m training samples ((*/ Vi))=1.2..m. Derive the gradient of ](@) regarding to Ox as shown in page 17 of Lecture 3-3

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts