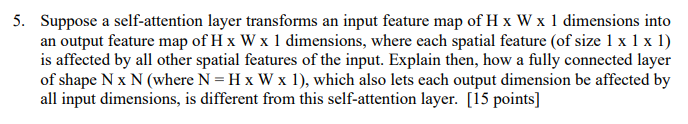

Question: Suppose a self - attention layer transforms an input feature map of H W 1 dimensions into an output feature map of H W 1

Suppose a selfattention layer transforms an input feature map of dimensions into

an output feature map of dimensions, where each spatial feature of size

is affected by all other spatial features of the input. Explain then, how a fully connected layer

of shape where which also lets each output dimension be affected by

all input dimensions, is different from this selfattention layer. points

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock