Question: Suppose that we observe a single data point Y from a normal model Y ~ N(0. 6,) where the mean @ is unknown. We assume

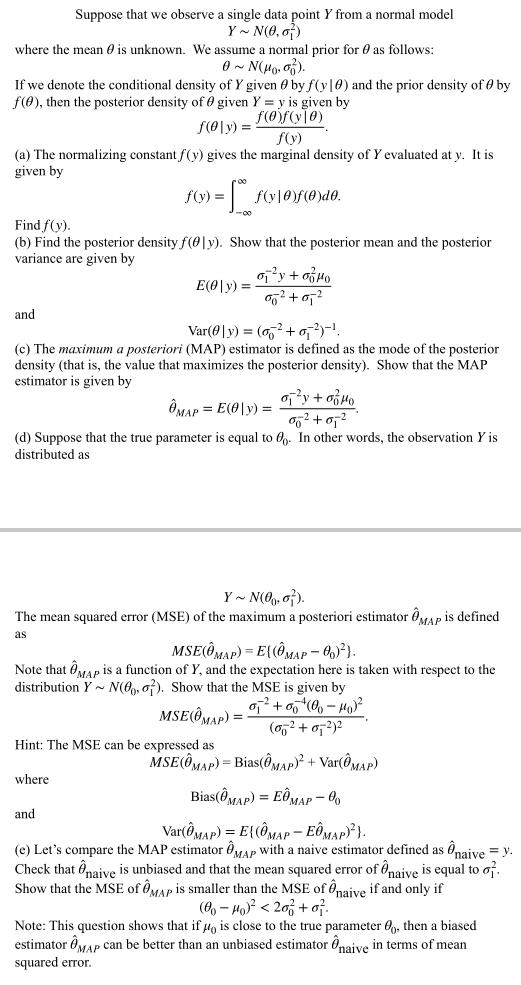

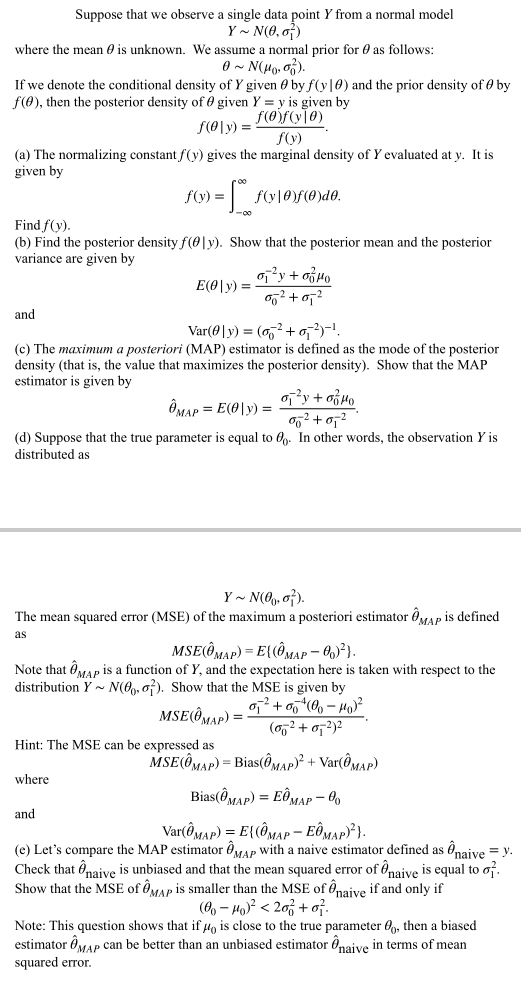

Suppose that we observe a single data point Y from a normal model Y ~ N(0. 6,) where the mean @ is unknown. We assume a normal prior for O as follows: 0 ~ N(Ho. (7 ). If we denote the conditional density of Y given O by f() |0) and the prior density of O by f(0), then the posterior density of O given Y' = y is given by f(0 ly) = f(@)f(x10) f()) (a) The normalizing constant f(y) gives the marginal density of Y evaluated at y. It is given by fly) = f(vlef(@)do. co. Find f(y). (b) Find the posterior density f(0|y). Show that the posterior mean and the posterior variance are given by E(0| y) = and Var (@ | y) = (072 + 0, 3)-1. (c) The maximum a posteriori (MAP) estimator is defined as the mode of the posterior density (that is, the value that maximizes the posterior density). Show that the MAP estimator is given by (MAP = E(0|y) = 17+0540 002+ 012 (d) Suppose that the true parameter is equal to . In other words, the observation Y is distributed as The mean squared error (MSE) of the maximum a posteriori estimator MAP is defined as MSE(0MAP) = E((OMAP - 60)?). Note that Map is a function of Y, and the expectation here is taken with respect to the distribution Y ~ N(6. ?). Show that the MSE is given by MSE(@MAP) = 1 +60 (60 - 10)2 (on2+0-2)2 Hint: The MSE can be expressed as MSE(0MAP) = Bias(0MAP)2 + Var(@MAP) where Bias(@MAP) = EOMAP - 60 and Var(@MAP) = E((OMAP - EOMAP)? ). (e) Let's compare the MAP estimator MAP with a naive estimator defined as naive = V. Check that naive is unbiased and that the mean squared error of naive is equal to of. Show that the MSE of MAP is smaller than the MSE of naive if and only if (60 - 10)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts