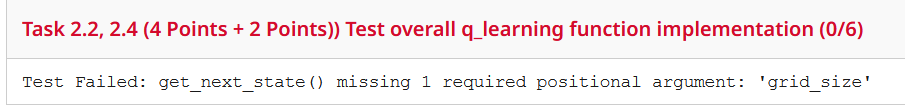

Question: Task 2 . 2 , 2 . 4 ( 4 Points + 2 Points ) ) Test overall q _ learning function implementation ( 0

Task Points Points Test overall qlearning function implementation

Test Failed: getnextstate missing required positional argument: 'gridsize'

# TASKS and : Qlearning algorithm following epsilongreedy policy

# Inputs:

# qtable, rtable: initialized by calling the initializeqrtables function inside the main function

# startpos, goalpos: given by the getrandomstartgoal function based on studentid and gridsize

# numepisodes: taken from the global constant EPISODES you need to determine the episodes needed to train the agent

# gridsize: To try different grid sizes, change the GRIDSIZE global constant

# alpha, gamma, epsilon: DO NOT CHANGE

# Outputs:

# qtable: the final qtable after training

def qlearningstartpos, goalpos, qtableqtableg rtablertableg numepisodesEPISODES, alpha gamma epsil

for episode in rangenumepisodes:

# Initialize the state index corresponding to the starting position

currentstateindex startpos gridsize startpos # Ensure this results in an integer

currentstatepos startpos # currentstatepos has current row, column position of the agent

done False

while not done:

# Task Get action using epsilongreedy policy

action getactionqtable, epsilon, currentstateindex

# Task Get next state based on the chosen action

nextstatepos getnextstatecurrentstatepos, action, gridsize # Pass gridsize

nextstateindex nextstatepos gridsize nextstatepos # Correct calculation of index

# Task Update the Qtable using Qlearning formula

qtable updateqtableqtable, rtable, currentstateindex, action, nextstateindex, alpha, gamma

# Update the 'state' to the next state index

currentstatepos nextstatepos

currentstateindex nextstateindex

# Task End episode if goal is reached

if currentstatepos goalpos:

done True # Episode ends when the goal is reached

qtableg qtable # DO NOT CHANGE THIS LINE

Task Points Test getnextstate function implementation

Test Failed: unsupported operand types for divmod: 'tuple' and 'int'

Task Points Test getaction implementation

Task Points Points Test overall qlearning function implementation

Test Failed: getnextstate missing required positional argument: 'gridsize'

Task Points Test updateqtable function

Test Failed: unsupported operand types for divmod: 'tuple' and 'int'

# TASK

# Complete the updateqtable function in Task

# This function will be called from the qlearning function

# Inputs:

# qtable, rtable, currentstateindex, action, nextstateindex, alpha gamma

# Outputs:

# qtable: with updated Q values

def updateqtableqtable, rtable, currentstateindex, action, nextstateindex, alpha gamma:

bestnextaction npargmaxqtablenextstateindex

tdtarget rtablecurrentstateindexaction gamma qtablenextstateindexbestnextaction

tderror tdtarget qtablecurrentstateindexaction

qtablecurrentstateindexaction alpha tderror

return qtable

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock