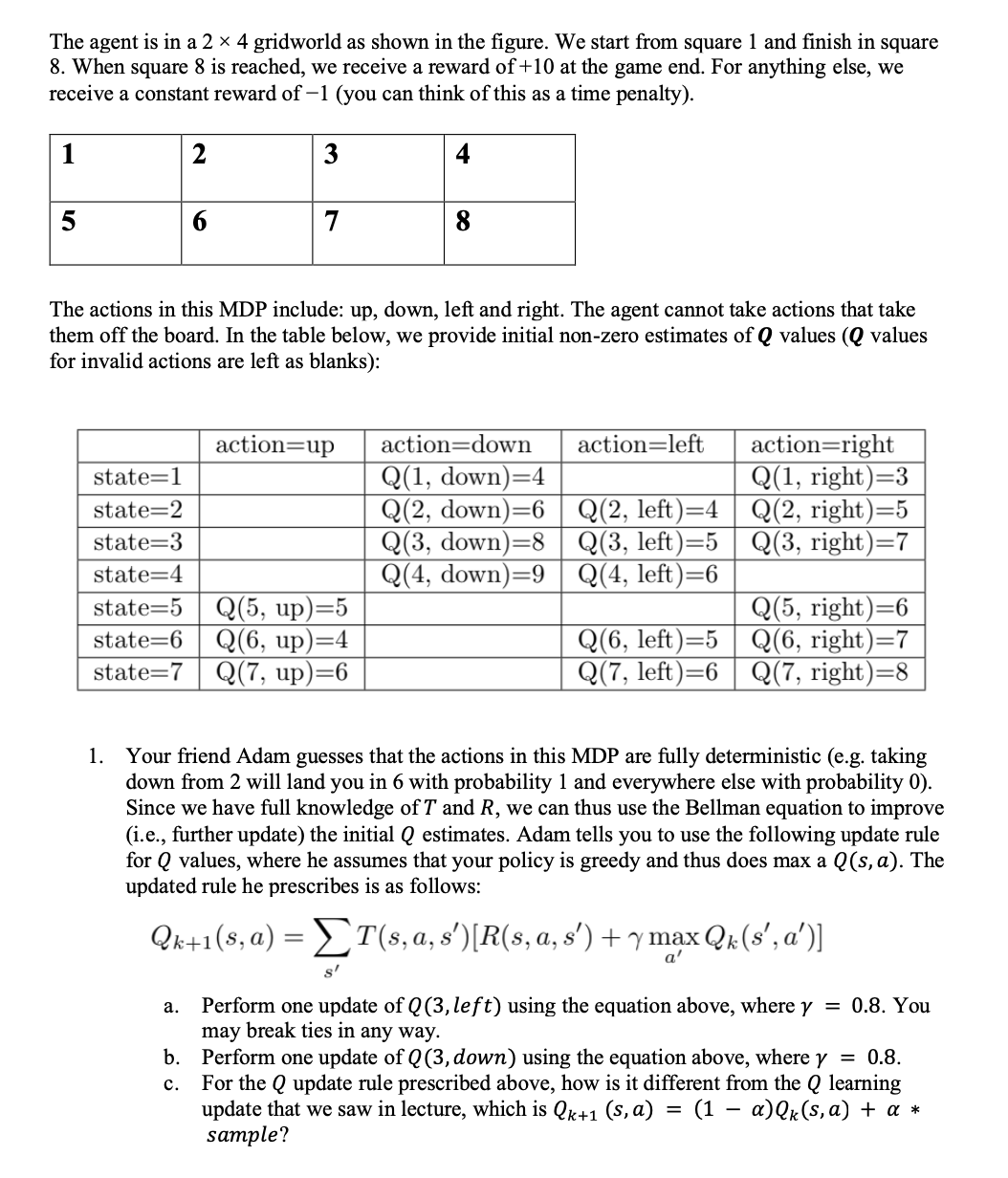

Question: The agent is in a 2 4 gridworld as shown in the figure. We start from square 1 and finish in square When square 8

The agent is in a gridworld as shown in the figure. We start from square and finish in square

When square is reached, we receive a reward of at the game end. For anything else, we

receive a constant reward of you can think of this as a time penalty

The actions in this MDP include: up down, left and right. The agent cannot take actions that take

them off the board. In the table below, we provide initial nonzero estimates of values values

for invalid actions are left as blanks:

Your friend Adam guesses that the actions in this MDP are fully deterministic eg taking

down from will land you in with probability and everywhere else with probability

Since we have full knowledge of and we can thus use the Bellman equation to improve

ie further update the initial estimates. Adam tells you to use the following update rule

for values, where he assumes that your policy is greedy and thus does max a The

updated rule he prescribes is as follows:

a Perform one update of left

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock