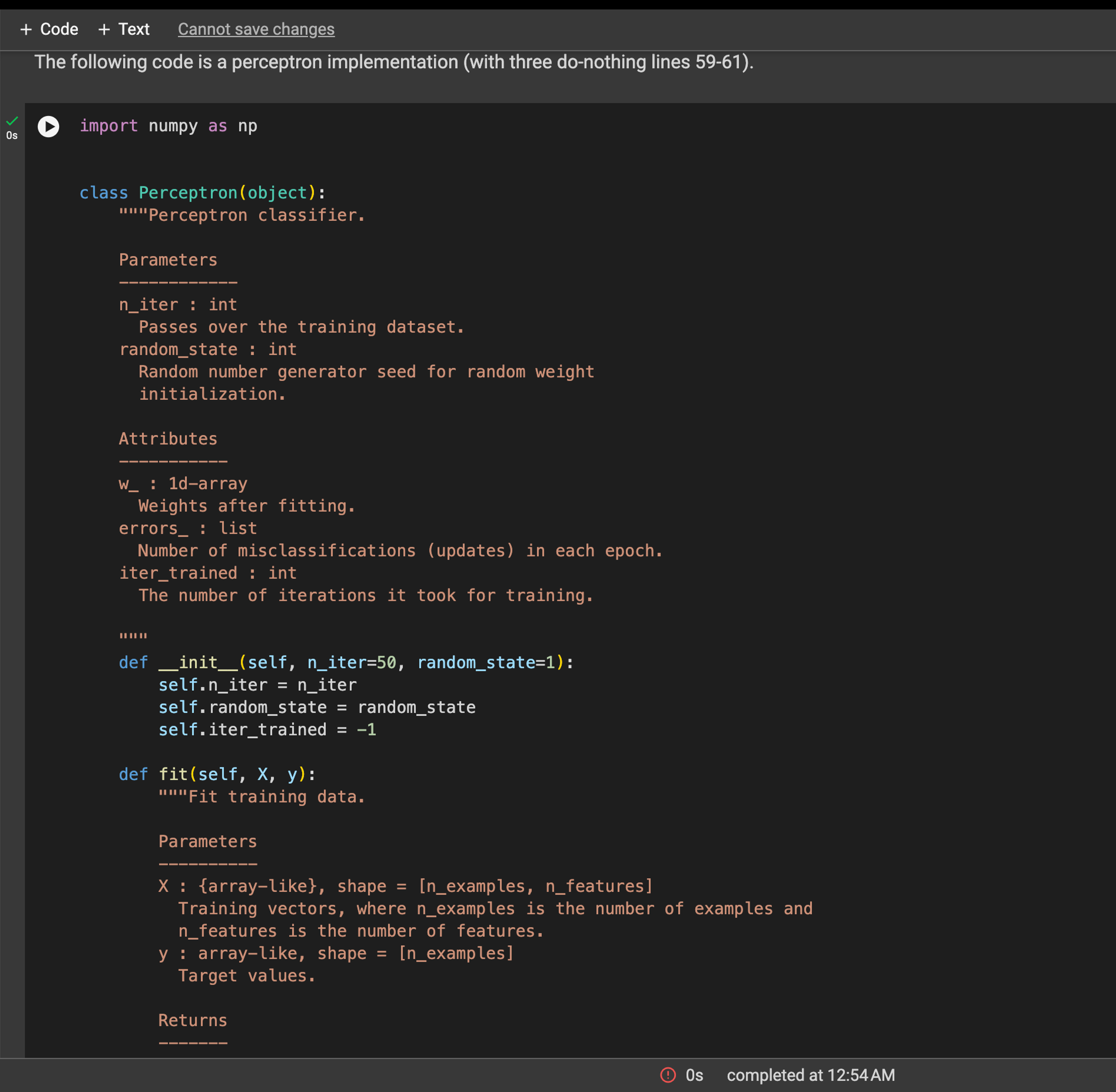

Question: The following code is a perceptron implementation ( with three do - nothing lines 5 9 - 6 1 ) . import numpy as np

The following code is a perceptron implementation with three donothing lines

import numpy as np

class Perceptronobject:

Perceptron classifier.

Parameters

niter : int

Passes over the training dataset.

randomstate : int

Random number generator seed for random weight

initialization.

Attributes

w : darray

Weights after fitting.

errors : list

Number of misclassifications updates in each epoch.

itertrained : int

The number of iterations it took for training.

def self niter randomstate:

self.niter niter

self.randomstate randomstate

self.itertrained

def fitself X y:

Fit training data.

Parameters

X : arraylike shape nexamples, nfeatures

Training vectors, where nexamples is the number of examples and

nfeatures is the number of features.

y : arraylike, shape nexamples

Target values.

Returns Returns

self : object

prime

rgen nprandom.RandomStateselfrandomstate

self.w rgen.normalloc scale size Xshape

self.errors

for in rangeselfniter:

errors

for xi target in zipX y:

update self.predictxi target

self.w: update xi

self.w update

errors intupdate

self.errorsappenderrors

####### New code for doing nothing. MEH

thiscodedoesnothing Truereturn self

def netinputself X:

Calculate net input"""

return npdotX self.w: self.w

def predictself X:

Return class label after unit step"""

return npwhereselfnetinputX

There are significant errors and omissions in the above perceptron implementation. Work on the above cell and modify the code so that:

i The lines containing errors are commented out, and new lines are added with corrected code.

ii The omissions are corrected.

iii The fit function stops when no more iterations are necessary, and stores the number of iterations required for the training.

iv The perceptron maintains a history of its weights, ie the set of weights after each point is processed.

At each place where you have modified the code, please add clear comments surrounding it similarly to the donothing" code. Make sure you

evaluate the cell again, so that following cells will be using the modified perceptron. Question : Experimenting with hyperparameters

ppn Perceptroneta n iter randomstate

ppnfitX y

pltplotrange lenppnerrors ppnerrors markero

pltxticksrange # Set integer xaxis labels

pltxlabelEpochs

pltylabelNumber of updates'

pltshow

Show hidden output

Next steps:

Explain error

Running the above code, you can verify whether your modification in Question works correctly. The point of this question is to experiment with

the hyperparameter the learning rate. Here are some specific questions:

i Find values of for which the process requires and iterations to converge.

ii Is it always the case that raising leads to a reduced or equal number of iterations? Explain with examples.

iii Find two different settings for the random state, that give different convergence patterns for the same value of

iv Based on your experiences in parts iiii would binary search be an appropriate strategy for determining values of for which the

perceptron converges within a desired number of iterations?

Please give your answers in the cell below.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock