Question: The programmer involved in question 1 realizes that the program needs debugging and modifies it so that 1 % of the statements now print a

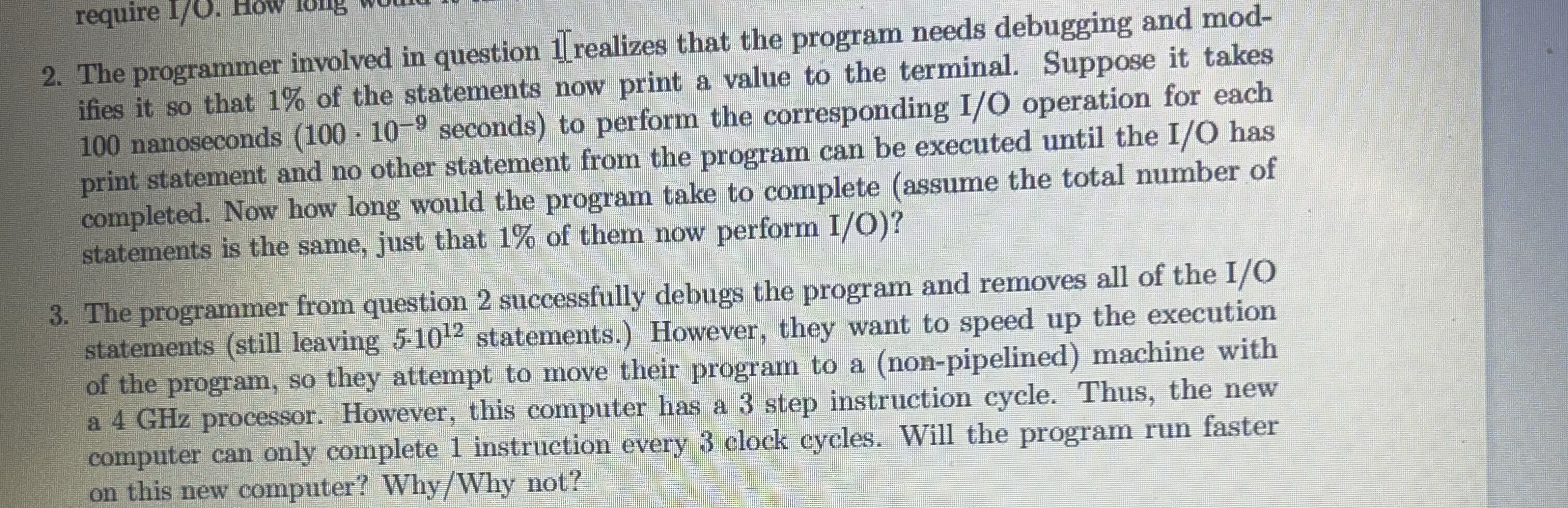

The programmer involved in question realizes that the program needs debugging and modifies it so that of the statements now print a value to the terminal. Suppose it takes nanoseconds seconds to perform the corresponding IO operation for each print statement and no other statement from the program can be executed until the IO has completed. Now how long would the program take to complete assume the total number of statements is the same, just that of them now perform IO

The programmer from question successfully debugs the program and removes all of the statements still leaving statements. However, they want to speed up the execution of the program, so they attempt to move their program to a nonpipelined machine with a GHz processor. However, this computer has a step instruction cycle. Thus, the new computer can only complete instruction every clock cycles. Will the program run faster on this new computer? WhyWhy not?

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock