Question: The system parameters are d , D , S m a x , , 1 , 2 , 3 , and k . Some of

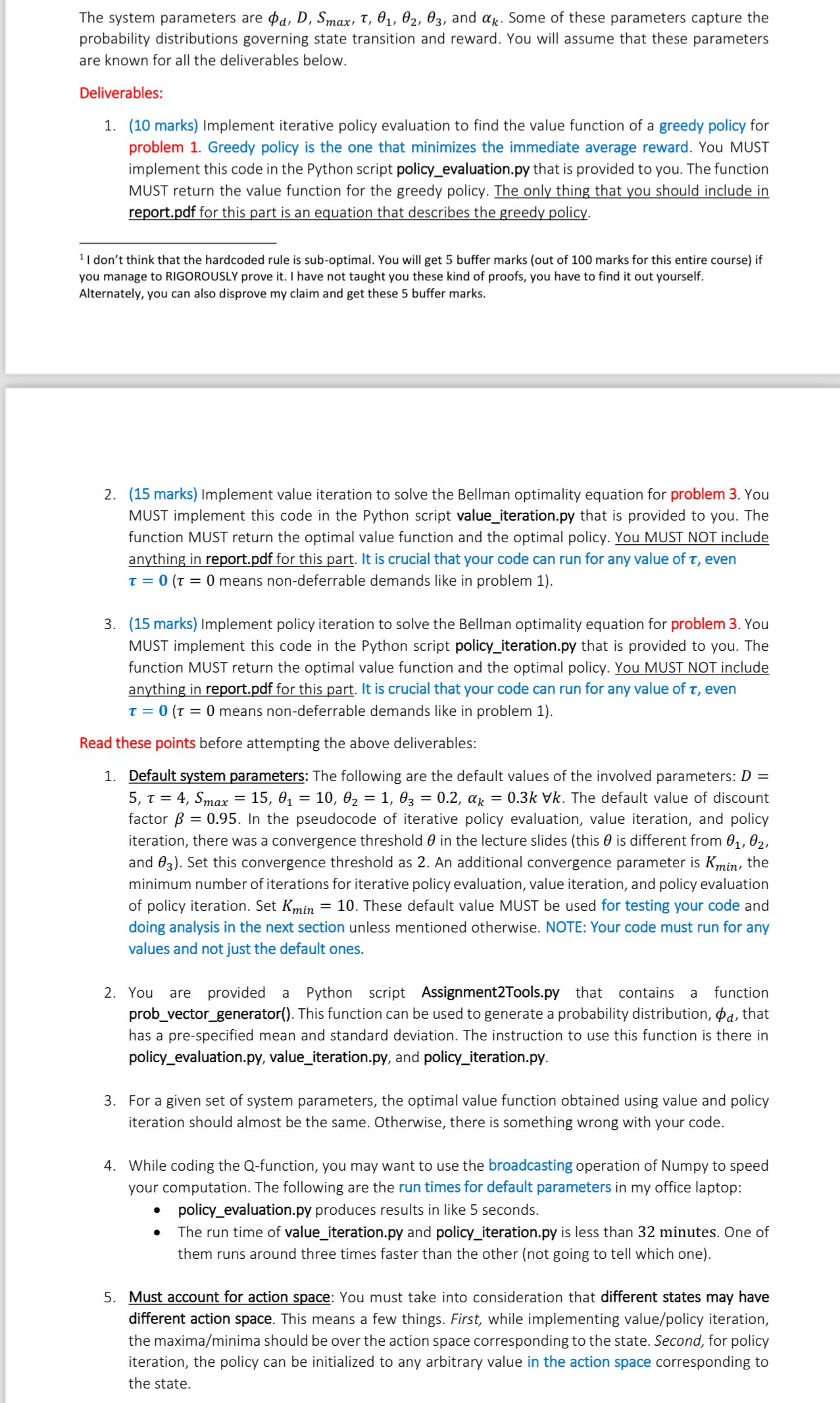

The system parameters are and Some of these parameters capture the probability distributions governing state transition and reward. You will assume that these parameters are known for all the deliverables below.

Deliverables:

marks Implement iterative policy evaluation to find the value function of a greedy policy for problem Greedy policy is the one that minimizes the immediate average reward. You MUST implement this code in the Python script policyevaluation.py that is provided to you. The function MUST return the value function for the greedy policy. The only thing that you should include in report.pdf for this part is an equation that describes the greedy policy.

I don't think that the hardcoded rule is suboptimal. You will get buffer marks out of marks for this entire course if you manage to RIGOROUSLY prove it I have not taught you these kind of proofs, you have to find it out yourself. Alternately, you can also disprove my claim and get these buffer marks.

marks Implement value iteration to solve the Bellman optimality equation for problem You MUST implement this code in the Python script valueiteration.py that is provided to you. The function MUST return the optimal value function and the optimal policy. You MUST NOT include anything in report.pdf for this part. It is crucial that your code can run for any value of even means nondeferrable demands like in problem

marks Implement policy iteration to solve the Bellman optimality equation for problem You MUST implement this code in the Python script policyiteration.py that is provided to you. The function MUST return the optimal value function and the optimal policy. You MUST NOT include anything in report.pdf for this part. It is crucial that your code can run for any value of even means nondeferrable demands like in problem

Read these points before attempting the above deliverables:

Default system parameters: The following are the default values of the involved parameters: kAAk. The default value of discount factor In the pseudocode of iterative policy evaluation, value iteration, and policy iteration, there was a convergence threshold in the lecture slides this is different from and Set this convergence threshold as An additional convergence parameter is the minimum number of iterations for iterative policy evaluation, value iteration, and policy evaluation of policy iteration. Set These default value MUST be used for testing your code and doing analysis in the next section unless mentioned otherwise. NOTE: Your code must run for any values and not just the default ones.

You are provided a Python script

AssignmentTools.py that contains a function probvectorgenerator This function can be used to generate a probability distribution, that has a prespecified mean and standard deviation. The instruction to use this function is there in policyevaluation.py valueiteration.py and policyiteration.py

For a given set of system parameters, the optimal value function obtained using value and policy iteration should almost be the same. Otherwise, there is something wrong with your code.

While coding the Q function, you may want to use the broadcasting operation of Numpy to speed your computation. The following are the run times for default parameters in my office laptop:

policyevaluation.py produces results in like seconds.

The run time of valueiteration.py and policyiteration.py is less than minutes. One of them runs around three times faster than the other not going to tell which one

Must account for action space: You must take into consideration that different states may have different action space. This means a few things. First, while implementing valuepolicy iteration, the maximaminima should be over the action space corresponding to the state. Second, for policy iteration, the policy can be initialized to any arbitrary value in the action space corresponding to the state.

Step by Step Solution

There are 3 Steps involved in it

1 Expert Approved Answer

Step: 1 Unlock

Question Has Been Solved by an Expert!

Get step-by-step solutions from verified subject matter experts

Step: 2 Unlock

Step: 3 Unlock