Question: Thx!!! Consider a classification problem: there is a feature vector x e RNX1 with N features and class label y can be 1 or -1.

Thx!!!

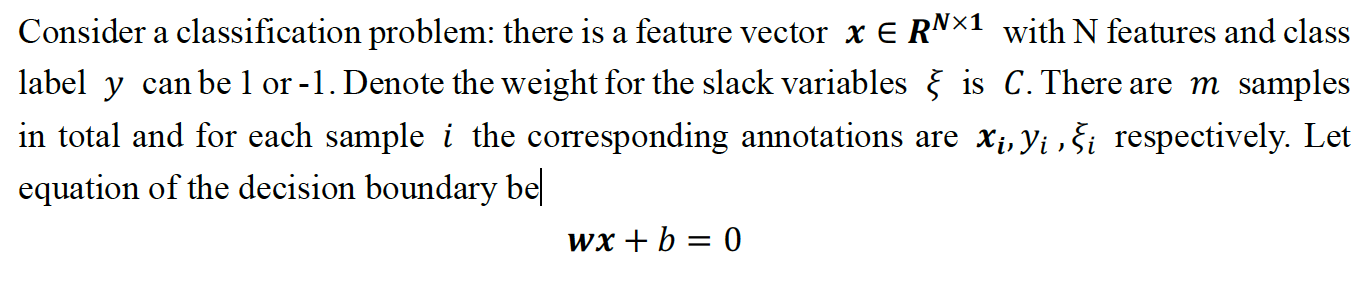

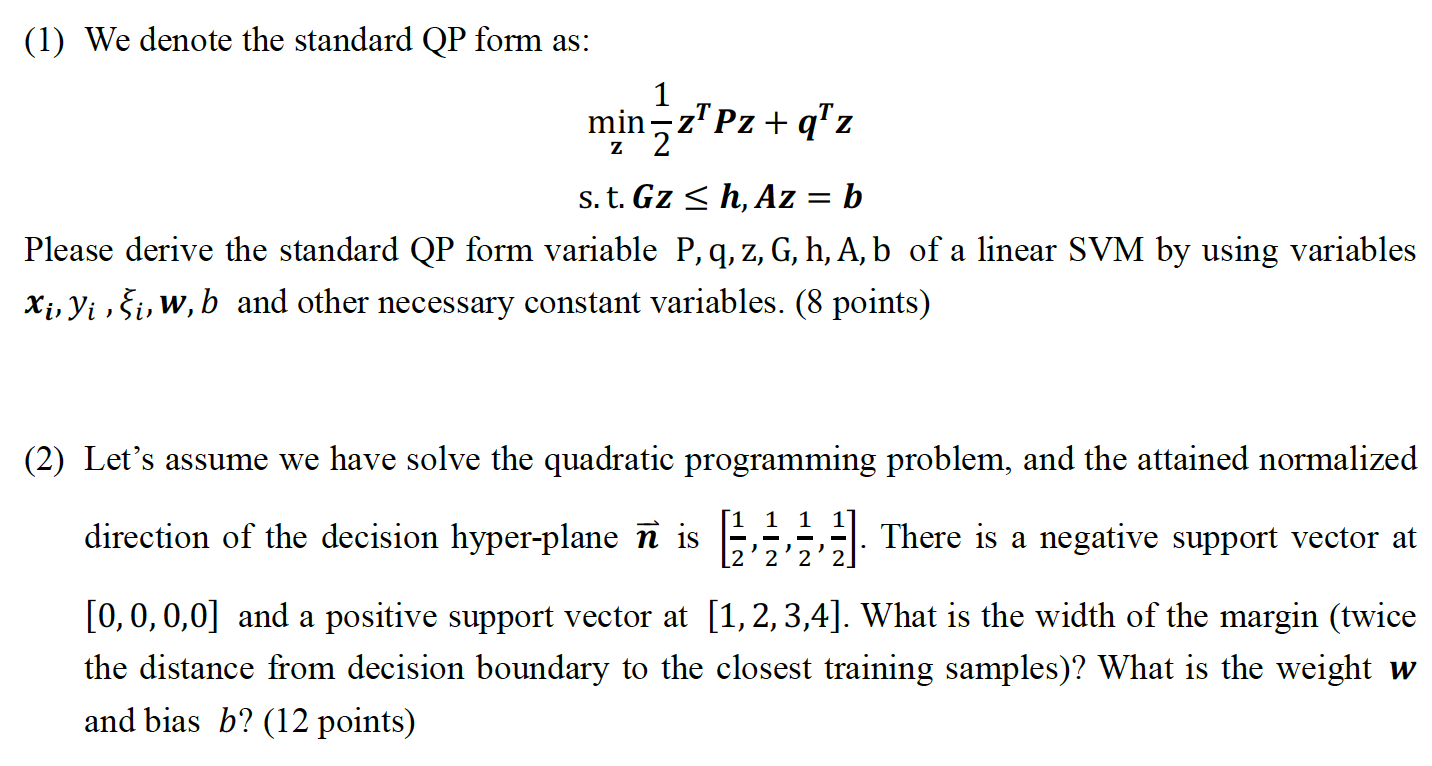

Consider a classification problem: there is a feature vector x e RNX1 with N features and class label y can be 1 or -1. Denote the weight for the slack variables } is C. There are m samples in total and for each sample i the corresponding annotations are Xi, Yi ,i respectively. Let equation of the decision boundary be| wx + b = 0 (1) We denote the standard QP form as: min=2" Pz+qz s. t. Gz = h, Az = b Please derive the standard QP form variable P, q, z, G, h, A, b of a linear SVM by using variables Xi, Yi , i, w, b and other necessary constant variables. (8 points) (2) Lets assume we have solve the quadratic programming problem, and the attained normalized direction of the decision hyper-plane is 122 ). There is a negative support vector at [0,0,0,0] and a positive support vector at [1,2,3,4]. What is the width of the margin (twice the distance from decision boundary to the closest training samples)? What is the weight w and bias b? (12 points) Consider a classification problem: there is a feature vector x e RNX1 with N features and class label y can be 1 or -1. Denote the weight for the slack variables } is C. There are m samples in total and for each sample i the corresponding annotations are Xi, Yi ,i respectively. Let equation of the decision boundary be| wx + b = 0 (1) We denote the standard QP form as: min=2" Pz+qz s. t. Gz = h, Az = b Please derive the standard QP form variable P, q, z, G, h, A, b of a linear SVM by using variables Xi, Yi , i, w, b and other necessary constant variables. (8 points) (2) Lets assume we have solve the quadratic programming problem, and the attained normalized direction of the decision hyper-plane is 122 ). There is a negative support vector at [0,0,0,0] and a positive support vector at [1,2,3,4]. What is the width of the margin (twice the distance from decision boundary to the closest training samples)? What is the weight w and bias b? (12 points)

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts