Question: Topic: Decision Tree Please show step by step on how you got your answer. Problem 3: Decision Tree In order to reduce my email load,

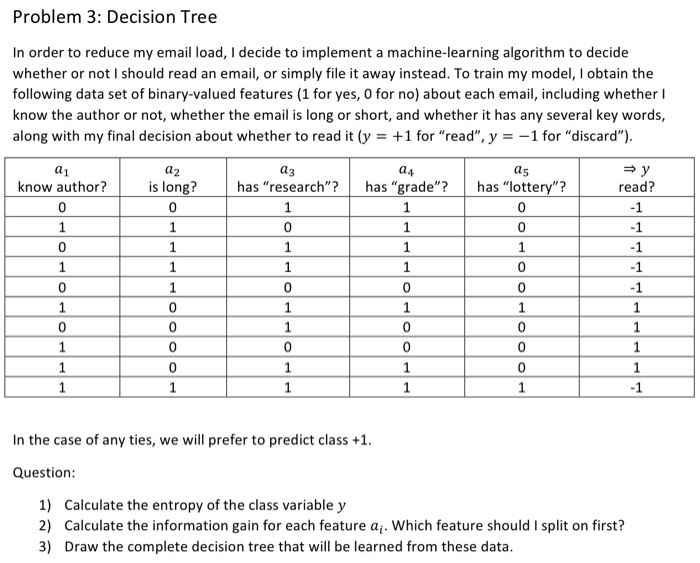

Problem 3: Decision Tree In order to reduce my email load, I decide to implement a machine-learning algorithm to decide whether or not I should read an email, or simply file it away instead. To train my model, I obtain the following data set of binary-valued features (1 for yes, O for no) about each email, including whether l know the author or not, whether the email is long or short, and whether it has any several key words, along with my final decision about whether to read it (y = +1 for "read", y =-1 for "discard") a2 is long? a3 a4 a5 know author? has "research"? has "grade"?has "lottery"? read? 0 0 In the case of any ties, we will prefer to predict class +1 Question 1) 2) 3) Calculate the entropy of the class variable y Calculate the information gain for each feature ai. Which feature should I split on first? Draw the complete decision tree that will be learned from these data

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts