Question: Use C programing. Part A: MPI program creates two MPI processes: my rank of 0 and my rank of 1. Both send two messages to

Use C programing.

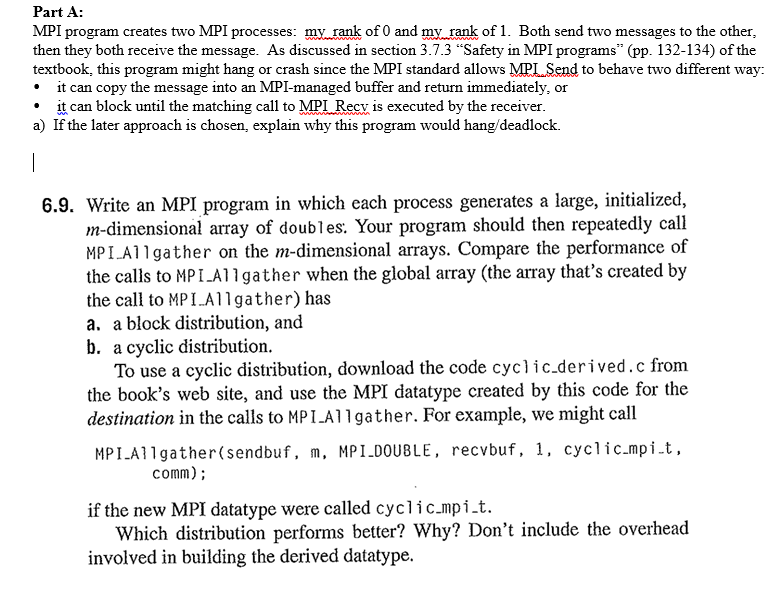

Part A: MPI program creates two MPI processes: my rank of 0 and my rank of 1. Both send two messages to the other then they both receive the message. As discussed in section 3.7.3 "Safety in MPI programs" (pp. 132-134) of the textbook, this program might hang or crash since the MPI standard allows MPL Send to behave two different way: it can copy the message into an MPI-managed buffer and return immediately, or .it can block until the matching call to MPI Recy is executed by the receiver a) If the later approach is chosen, explain why this program would hang/deadlock. 6.9. Write an MPI program in which each process generates a large, initialized, m-dimensional array of doubles. Your program should then repeatedly call MPIA11gather on the m-dimensional arrays. Compare the performance of the calls to MPI.A11gather when the global array (the array that's created by the call to MPI.Allgather) has a. a block distribution, and b. a cyclic distribution. To use a cyclic distribution, download the code cyclic.derived.c from the book's web site, and use the MPI datatype created by this code for the destination in the calls to MP1 A1lgather. For example, we might call MPIAllgather(sendbuf, m, MPIDOUBLE, recvbuf, 1. cyclic.mpit comm) if the new MPI datatype were called cyclic.mpi.t Which distribution performs better? Why? Don't include the overhead involved in building the derived datatype. Part A: MPI program creates two MPI processes: my rank of 0 and my rank of 1. Both send two messages to the other then they both receive the message. As discussed in section 3.7.3 "Safety in MPI programs" (pp. 132-134) of the textbook, this program might hang or crash since the MPI standard allows MPL Send to behave two different way: it can copy the message into an MPI-managed buffer and return immediately, or .it can block until the matching call to MPI Recy is executed by the receiver a) If the later approach is chosen, explain why this program would hang/deadlock. 6.9. Write an MPI program in which each process generates a large, initialized, m-dimensional array of doubles. Your program should then repeatedly call MPIA11gather on the m-dimensional arrays. Compare the performance of the calls to MPI.A11gather when the global array (the array that's created by the call to MPI.Allgather) has a. a block distribution, and b. a cyclic distribution. To use a cyclic distribution, download the code cyclic.derived.c from the book's web site, and use the MPI datatype created by this code for the destination in the calls to MP1 A1lgather. For example, we might call MPIAllgather(sendbuf, m, MPIDOUBLE, recvbuf, 1. cyclic.mpit comm) if the new MPI datatype were called cyclic.mpi.t Which distribution performs better? Why? Don't include the overhead involved in building the derived datatype

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts