Question: Use the following starter code (type it in so you get more practice setting up programs): // ========================================== // Created: August 23, 2018 // @author

Use the following starter code (type it in so you get more practice setting up programs):

// ==========================================

// Created: August 23, 2018

// @author

//

// Description: Counts unique words in a file

// outputs the top N most common words

// ==========================================

#include

#include

#include

#include

#include

#include

using namespace std;

// struct to store word + count combinations

struct wordItem

{

string word;

int count;

};

/*

* Function name: getStopWords

* Purpose: read stop word list from file and store into vector

* @param ignoreWordFile - filename (the file storing ignore/stop words)

* @param _vecIgnoreWords - store ignore words from the file (pass by reference)

* @return - none

* Note: The number of words is fixed to 50

*/

void getStopWords(const char *ignoreWordFileName, vector

/*

* Function name: isStopWord

* Purpose: to see if a word is a stop word

* @param word - a word (which you want to check if it is a stop word)

* @param _vecIgnoreWords - the vector type of string storing ignore/stop words

* @return - true (if word is a stop word), or false (otherwise)

*/

bool isStopWord(string word, vector

/*

* Function name: getTotalNumberNonStopWords

* Purpose: compute the total number of words saved in the words array (including repeated words)

* @param list - an array of wordItems containing non-stopwords

* @param length - the length of the words array

* @return - the total number of words in the words array (including repeated words multiple times)

*/

int getTotalNumberNonStopWords(wordItem list[], int length);

/*

* Function name: arraySort

* Purpose: sort an array of wordItems, decreasing, by their count fields

* @param list - an array of wordItems to be sorted

* @param length - the length of the words array

*/

void arraySort(wordItem list[], int length);

/**

* Function name: printTopN

* Purpose: to print the top N high frequency words

* @param wordItemList - a pointer that points to a *sorted* array of wordItems

* @param topN - the number of top frequency words to print

* @return none

*/

void printTopN(wordItem wordItemList[], int topN);

const int STOPWORD_LIST_SIZE = 50;

const int INITIAL_ARRAY_SIZE = 100;

// ./a.out 10 HW2-HungerGames_edit.txt HW2-ignoreWords.txt

int main(int argc, char *argv[])

{

vector

// verify we have the correct # of parameters, else throw error msg & return

if (argc != 4)

{

cout "Usage: ";

cout 0] "

cout

return 0;

}

/* **********************************

1. Implement your code here.

Read words from the file passed in on the command line, store and

count the words.

2. Implement the six functions after the main() function separately.

********************************** */

return 0;

}

void getStopWords(const char *ignoreWordFileName, vector

{

}

bool isStopWord(string word, vector

{

return true;

}

int getTotalNumberNonStopWords(wordItem list[], int length)

{

return 0;

}

void arraySort(wordItem list[], int length)

{

}

void printTopN(wordItem wordItemList[], int topN)

{

}

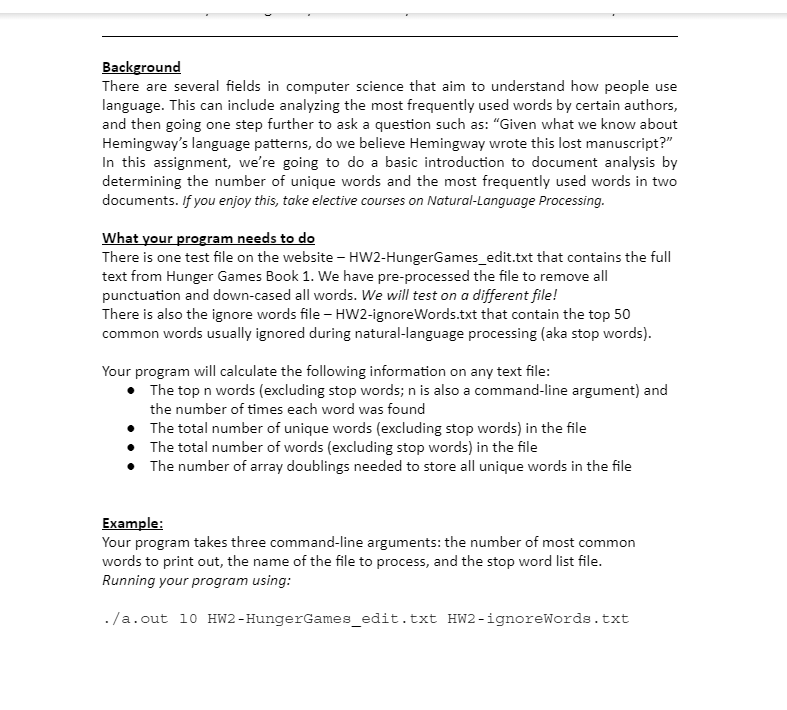

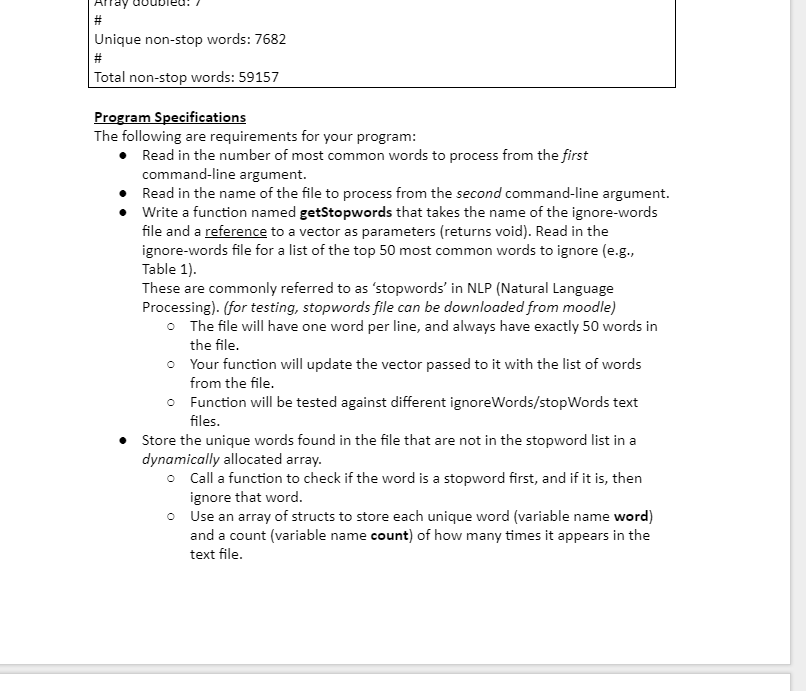

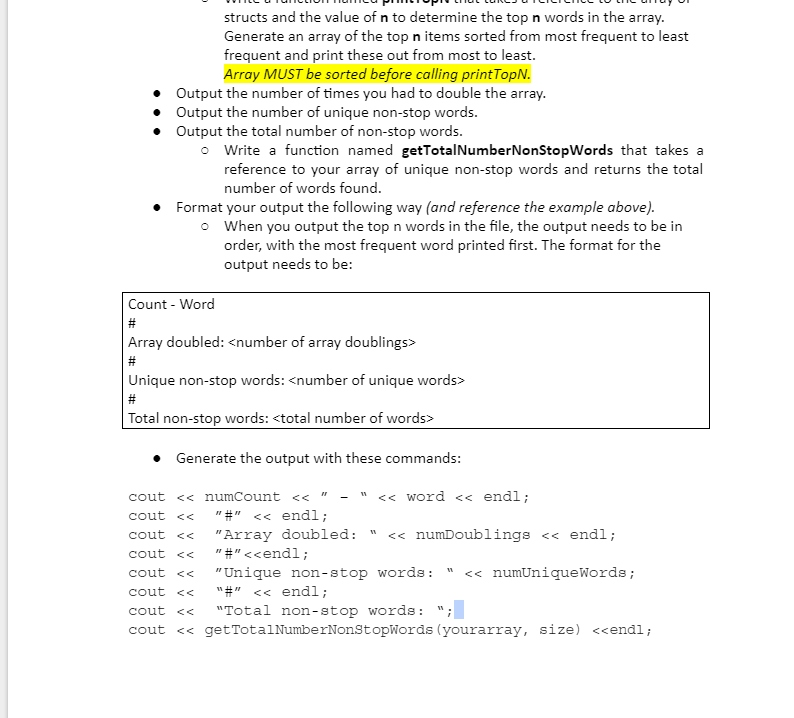

Background There are several fields in computer science that aim to understand how people use language. This can include analyzing the most frequently used words by certain authors and then going one step further to ask a question such as: Given what we know about Hemingway's language patterns, do we believe Hemingway wrote this lost manuscript?" In this assignment, we're going to do a basic introduction to document analysis by determining the number of unique words and the most frequently used words in two documents. if you enjoy this, take elective courses on Natural-Language Processing hat your program needs to do There is one test file on the website HW2-HungerGames_edit.txt that contains the full text from Hunger Games Book 1. We have pre-processed the file to remove all punctuation and down-cased all words. We will test on a different file! There is also the ignore words file HW2-ignoreWords.txt that contain the top 50 common words usually ignored during natural-language processing (aka stop words) Your program will calculate the following information on any text file The top n words (excluding stop words; n is also a command-line argument) and the number of times each word was found The total number of unique words (excluding stop words) in the file The total number of words (excluding stop words) in the file The number of array doublings needed to store all unique words in the file . . Example: Your program takes three command-line arguments: the number of most common words to print out, the name of the file to process, and the stop word list file Running your program using ./a.out 10 HW2-HungerGames_edit.txt HW2-ignoreWords.txt

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts