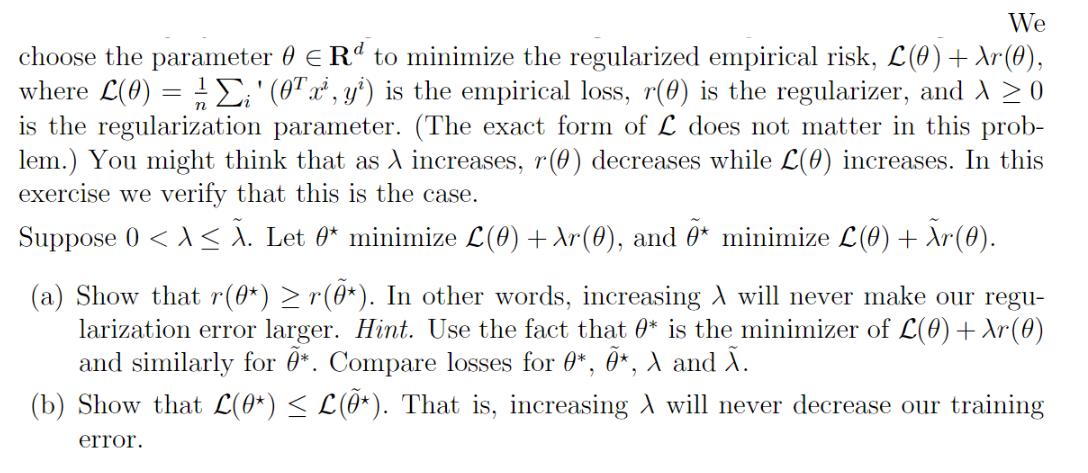

Question: We choose the parameter where L(0) = Rd to minimize the regularized empirical risk, L(0) + Xr(0), (x, y) is the empirical loss, r(0)

We choose the parameter where L(0) = Rd to minimize the regularized empirical risk, L(0) + Xr(0), (x, y) is the empirical loss, r(0) is the regularizer, and > >0 is the regularization parameter. (The exact form of L does not matter in this prob- lem.) You might think that as A increases, r(0) decreases while L(0) increases. In this exercise we verify that this is the case. Suppose 0 < A . Let 0* minimize L(0) + Ar(0), and * minimize L(0) + r(0). (a) Show that r(0*) r(0*). In other words, increasing A will never make our regu- larization error larger. Hint. Use the fact that 0* is the minimizer of L(0) + Ar (0) and similarly for *. Compare losses for 0*, *, A and . (b) Show that L(0*) L(0*). That is, increasing A will never decrease our training error.

Step by Step Solution

There are 3 Steps involved in it

Get step-by-step solutions from verified subject matter experts